Making state-free apps bigger can cut costs by 30% and work faster by 25%. Here is how to make your size plans better:

- Put State Outside: Keep data from sessions in tools like databases or Redis caches. This makes your servers without state and ready to grow.

- Use Auto-Size: Watch stats like CPU, memory, and how fast it replies. Set up autosizing with tools like AWS groups.

- Make Load Splitting Better: Share visits well over all servers. Use tokens instead of cookies to keep from sticking to one place.

- Use Caches & CDNs: Use fast memory for data you need a lot and CDNs for set stuff. This cuts server work and makes things fast.

- Keep Watching & Fix as Needed: Always check stats and fix size caps to stop waste and keep work good.

Easy Look Table

| Plan | Big Plus | How/What |

|---|---|---|

| Move State out | Makes scaling simple | Redis, JWTs |

| Scale on Need | Fits resources with needs | AWS Auto Scaling |

| Balance Loads | Stops too much load | Round-robin, IP-based |

| Save & Fast Networks | Makes data fast to get | Redis, Cloudflare |

| Always Check | Saves money, better non-stop | Nagios, Apdex |

These steps make sure your app grows well but keeps costs low.

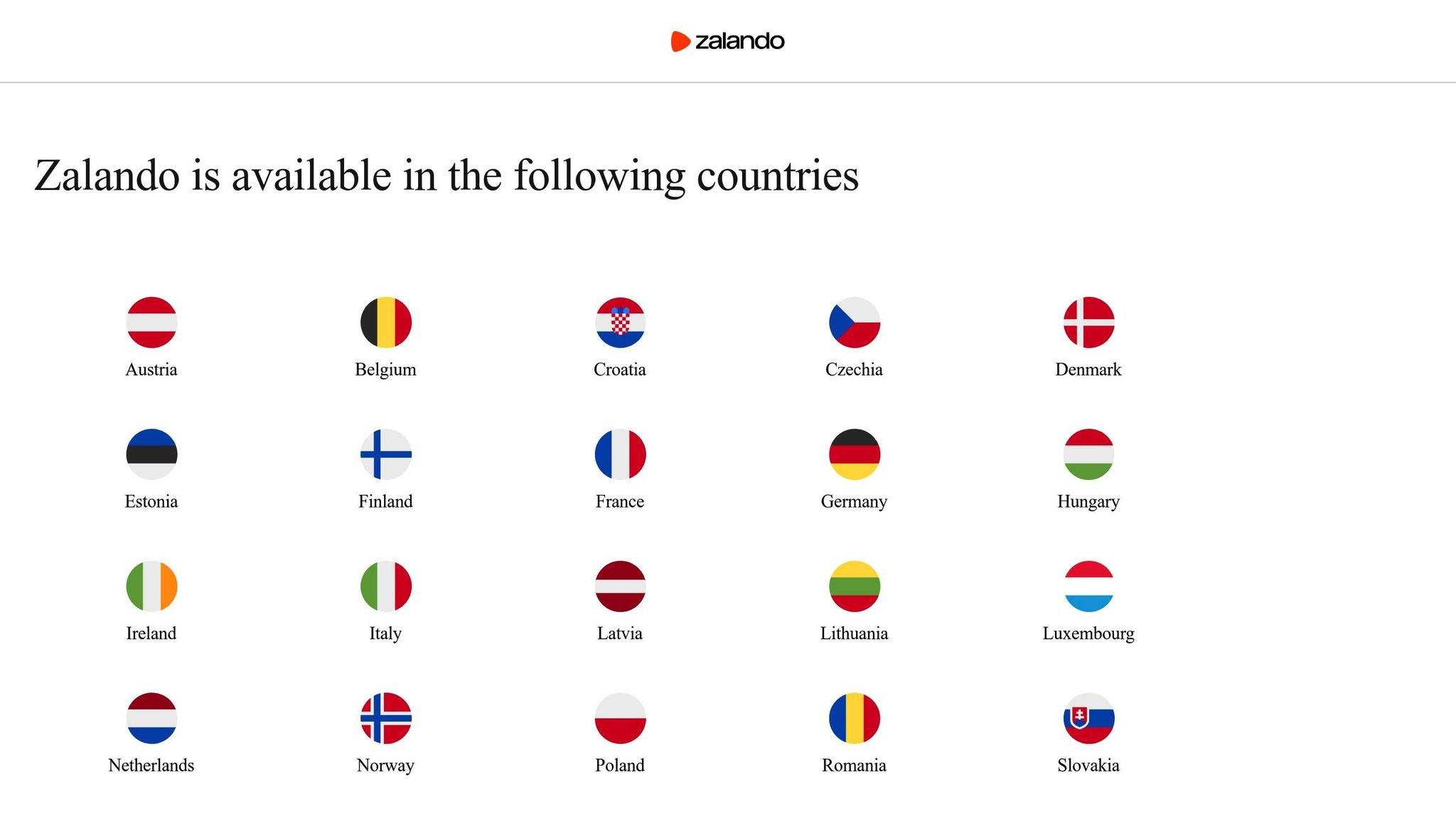

Autoscaling at Scale: How We Manage Capacity @ Zalando - Mikkel Larsen, Zalando SE

1. Put State Away From Your App

To make big, fast systems, it's key to keep your app work apart from the saved data. When the info for the session or short-lived data stays with one server, all asks go just to that one, which cuts their growth. Putting in more servers won't do much good if folk stay stuck to the one with their info.

Modern stateless architectures externalise state, enabling any server to process requests and considerably simplifying scaling.- ByteByteGo [4]

When a server stores no local data, it can take new requests without needing data from its own memory. This method helps the server scale well and cuts down on lost data or breaks in sessions.

Keep State in Databases and Wide Caches

Places like databases and big caches serve as the memory for your app. This means the servers of the app carry no saved state and look after data that sticks around [5].

Take token-based log-ins, like with JWTs, as an easy-to-see case. Here, who you are and what you can do sits right in the token, so the server doesn't need to keep that info.

Look at a shopping app for a clear picture. Rather than keep cart info on the server, it goes into a Redis cache or a database linked by user ID. Any server that gets a cart request pulls the info from outside, works on it, and sends back what’s needed.

How you set up your database really shapes how well it can grow. A smartly set up database can grow better [7]. Do this by indexing columns you look up a lot, spreading data over many servers, and duplicating data to keep it always on hand.

Here we look at how different ways of storing data stack up:

| How It's Kept | Can It Grow? | Safe If Break? | How Hard Is It? |

|---|---|---|---|

| On one server | Just on that server | Loss if it goes down | Easy at first |

| Outside Data Store | Grows wide | Stays if server goes | Medium start-up |

| Many Stops Cache | Can grow lots | Has backup built in | Quite complex |

This method not only grows well but also cuts down running costs over time.

Caching is key here too. When you save often-used data in memory, it takes stress off your database and makes things faster [8]. Tools like Redis and Memcached let many servers share cached data, staying stateless and giving a speed boost.

Breaking things into parts also clears the path for stateless talk ways.

Use Talk Ways That Stay Stateless

Storing stuff outside goes well with methods like HTTP and RESTful APIs, which don't keep state. These ways make sure each ask has all it needs for the server to handle it [9]. REST rules say API calls should bring auth tokens, ask details and any needed info, letting servers count only on outside stores.

For example, Twitter deals with over 300,000 tweets every minute using REST APIs, showing how no-state design spreads big tasks across many servers [9]. To keep things steady, make sure your APIs can do the same thing each time you ask [6].

Put limits on rates to stop too much load and keep service good [9]. Since no-state servers don’t watch each client's past actions, use outside tools like Redis to keep track of rate limiting for all servers.

Load balancers help no-state methods by sending asks to servers that can take them. This spreads out the load, keeps any one machine from getting too much, and helps with easy growth [9].

2. Set Up Metrics and Auto-Scaling

Getting the right metrics and setting auto-scaling helps your app run smooth, even when lots of users come at once. If you don't watch these things well, you might use too many resources or face sudden problems. Good tracking is key for smart auto-scaling.

Pick the Right Metrics to Watch

Watching the right metrics makes scaling cost-effective:

- CPU use: High CPU tells you if more power is needed.

- Memory use: Even if CPU is okay, too much memory use can slow things down.

- Response times: Longer times than usual mean you might need to scale.

- Queue lengths: Long lines suggest your app is near its limit.

- Request rates: Big jumps in requests show you may need to scale up early.

For example, a company streaming media used auto-scaling for its EC2-based system. By watching CPU, memory, and queues well, they cut EC2 prices by 40%, kept uptime at 99.9%, and handled three times the load during busy times [10].

Set Auto-Scaling Right

AWS Auto Scaling Groups make it easy to match power to need while keeping an eye on costs [12]. These groups use a start template, a group for managing and rules for scaling [11].

Here are ways to scale:

- Target tracking scaling: Pick a goal based on old data, and the system changes power to keep it.

- Step scaling: Set levels that make you add more power as need goes up.

- Predictive scaling: Use past data and learning to guess future needs.

Make sure you have a cool-down time, letting new parts start before adding more. Turning on detailed tracking, like updates every minute, lets your system react fast to changes [11].

Health checks are a must to keep only working parts in scaling choices [11]. Also, setting alerts for scaling events helps you learn from traffic trends and get better at planning when to scale.

When set well, auto-scaling saves a lot. Bad scaling can make cloud costs shoot up by up to 32%, but smart auto-scaling can cut running costs by about 30% and improve app speed by 25% [14][1]. The great thing? AWS Auto Scaling doesn't cost extra - you just pay for the resources and normal tracking costs [11][12]. A good auto-scaling plan not only keeps performance steady but also helps manage your cash well, mainly for apps without state.

Make Load Sharing and Health Checks Better

Adding to auto-scale plans, good load sharing and deep health checks keep no-state systems steady and quick to respond. These two parts help apps deal with more users smoothly. Even with great auto-scaling, bad flow spread or not seeing system problems can make things slow when many use it.

Share Users Well

Load sharing lets incoming asks go to many servers, stopping just one from getting too much. This is very key for no-state apps, where every ask works on its own.

Load balancing is the method of distributing workloads, or incoming network traffic, between the available servers to handle traffic fluctuations and improve availability and reliability.– Volico [15]

The 2020 data shows that a good load balancing plan can cut down server wait times by up to 70%. Also, a 2021 look into very busy sites found that uptime went up from 99.74% to 99.99% with good load balancing [16].

In cloud spots, software to balance loads works great. It lets you change and fix servers easy, without messing up when they are on [15]. For apps without state, it's key to steer clear of sticking to one session; skip the cookies for keeping session info, use tokens instead. These tokens can be included in the request or in the URL (like jde-AIS-Auth-Device

) [19].

Picking the top load balancing way is key. Plans like round-robin or least links do well in many cases, while some sort based on the user’s IP to sort traffic as planned [16][20]. Once traffic is smooth, make sure only working servers take on requests.

Set Up Checks That Run by Themselves

Checks that run alone are key to keep systems stable, especially where things change fast. These checks keep an eye on both inside parts - like data spots, caches, and APIs - and outside stuff, to make sure all is working well.

These checks must be light and done often, look at key things. Keep these checks safe with HTTPS, API keys, or OAuth tokens [17]. Match the method to what your app needs [17][18].

It’s also big to know the difference between what's super important and what's less so. Like, a broken payment system is a big deal, but issues in data look-up may not need fast fixing. Tools like Nagios, with Jenkins mixed in, can show deep facts on how endpoints are doing, as API7.ai says [17].

Need help optimizing your cloud costs?

Get expert advice on how to reduce your cloud expenses without sacrificing performance.

4. Use Caching and Content Delivery Networks

Caching and content delivery networks (CDNs) work like a great team to boost how well your apps work. They ease the strain on your back-end tools and make your apps quick and better for users. By keeping data near where it's used, these aids let your main servers deal with more users without any trouble.

Caching accelerates data retrieval times by storing frequently requested data in memory, allowing applications to access it much faster than querying databases or other slower data sources.[22]

Caching cuts API reply times by a lot, 70–90% [24], letting apps that don’t hold state take in more hits without more servers. Let’s go over this some more.

Add In-Memory Caching

In-memory caching means having a quick path for data you use a lot. Rather than asking the database over and over, your app can get data it uses often right from memory. Big tools like Redis and Memcached are often used here.

Think of a busy online store: each time a user looks at a product, rather than asking the database, the app pulls product info, search data, and prices from the cache. This way lowers the work the database has to do and makes things faster for buyers [22].

Key things to keep in cache are product lists, user info, and search data. This way not only makes things work faster but also makes the user's experience better [21] [23].

For more high-level setups, tools like ElastiCache can grow and are reliable. Not like local caching, which keeps data in memory on one server, these tools let you grow over many spots. They have ways to copy data, switch if there is an issue, and work well with cloud services [22].

Serve Static Content Through CDNs

CDNs are great for giving out static files - like images, CSS, and JavaScript. By sending these files from spots closer to your users, CDNs cut delay and ease the load on your app's servers.

To make your CDN setup better, use the right cache control headers or turn on auto caching. A good cache hit ratio (how much of the requests come from the cache) is usually between 85% and 95%, depending on what kind of content [25]. Static files like images and stylesheets tend to hit these marks, while content that changes often hits them less [25].

Here are some ways to boost how things work:

- Use URLs with version numbers to keep caching right.

- Turn on HTTP/3 and QUIC to make things faster and more reliable.

- Start logging on CDN backends to spot and fix issues fast.

For instance, Nike cut API reply times by 40% [1] after using these ways.

It’s key to watch important metrics like reply time, how much bandwidth you use, error counts, cache hit ratios, and how things are going geographically [26]. To really see how your CDN does, set up monitoring from many places that match where your users come from. Using both synthetic monitoring (set tests) and real user monitoring helps you catch issues before they impact your users [25].

5. Watch and Change Your Scale Plans

You can't just set your scale plans and then ignore them. As your apps grow and the flow of user traffic changes, you need to keep a sharp eye on how well things are running and make needed changes fast. This helps stop waste and cuts down on costs you don't need.

Bad scale plans could make your cloud costs go up by 32% [1]. On the other hand, groups that keep fine-tuning their setups often cut their costs by 30% and make their apps work 25% better [1]. Regular checks are key to good scaling because they let you set the exact needed limits.

Keep an Eye on Key Work Rates

Watching the right rates is vital for smart scale choices. Focus on rates that really impact how well users and your business goals are met, not just tech numbers.

Start with how fast you respond and error numbers. A slow answer or a quick rise in 5xx errors can point out problems right away. Watching how fast you respond in different places can also show gaps that might need a look [27]. Set alerts for error rates over 1% to get a heads up early.

Rates of work handled help you see the load your app deals with, while tool use - like CPU, memory, and network - can show when it's time to scale. With APIs being 83% of web traffic [14], it’s key to watch both successful and failed API calls. Search for top use times and use full tracing with clear tags to find problems tied to certain user groups or app versions [27].

User happy rates, like Apdex scores, link tech performance and business effects. Moving Apdex limits, based on past trends, can give a clearer view during busy times and lower false alerts.

Database work is another key area to watch. Many scale problems start with database issues, not server space [27]. Spotting these issues early can help. Also, looking at the delay for different request types can help you fine-tune certain actions. For example, a checkout might need different scale limits than a product browsing page.

Update Scale Limits Based on Data

Once you have the data, use it to better your scale limits:

- Regularly change limits based on real use rates to boost both performance and cost use.

- Fine-tune cool-off times with short waits to keep from scaling too soon and dodge added costs [13].

- Slowly add capacity to avoid sudden high costs and keep expenses low [28].

- Add business rates to your changes. For instance, if your app handles 1,000 orders per minute at busy times, scale triggers should line up with these numbers.

Real stories show the effect of these moves. A big online store changed its auto scale settings during big sales, slicing compute costs by 65% while keeping 99.99% up time [28]. Also, a finance company changed its limits to cut compute costs by 40% while still handling data well in real-time [28]. These cases prove how ongoing checks and quick changes can greatly boost both performance and savings.

Check your scale plans each month. Shifts in seasons, ad runs, or company size can change how much use you get. Look at how you spend to find ways to do better, and try new methods in a small part of your setup before you use them everywhere.

To keep up, have automatic reports made that show how scale efforts, money spent, and performance are doing. These reports can let you see fast if your plans work well and save money.

Ending: Making Cheap Apps Without State

Making better scale rules for stateless apps not only makes them run faster but also cuts down costs a lot. By using ideas like keeping state outside, right-size auto-scaling, clever split of work, using cache/CDNs, and careful check-ups, you can make apps without state that work well and don't cost much.

This way makes things less complex and gets clear good results. For example, in 2025, an online shop made web pages load 40% faster, and a money place cut down what it spends by 25% by making auto-scaling and data handling better [29].

Scalability plays a critical role in maintaining both performance and financial control. A scalable cloud environment allows organisations to meet demand efficiently, avoid unnecessary spending, and align infrastructure with business needs.- Juliana Costa Yereb, Senior FinOps Specialist, ProsperOps [30]

Apps that do not save data are easy to change, making them simple to grow wide and hard to break [3]. When you use all five plans at once, they build a base that changes well with real use.

Auto-growing, in one, makes sure costs fit the need right now, giving even costs and using less power [2][31].

For firms that want all these good points, working with pros can speed up making things better. Hokstad Consulting, as one case, aids groups in making their DevOps work smooth, keeping cloud costs low, and cutting hosting costs. They have cut cloud costs for clients by 30–50% and made things run faster. Their help goes from changing DevOps to working out cloud costs, planned moves, and made-just-for-you auto tools [32].

Our proven optimisation strategies reduce your cloud spending by 30–50% whilst improving performance through right-sizing, automation, and smart resource allocation.- Hokstad Consulting [32]

These ideas show why steady fine-tuning is key for long-term wins. Making current systems better is often cheaper and less of a shock than starting a full redo [29]. By always using these rules, companies can keep their systems running well, staying strong and cost-effective as they grow.

FAQs

Why is it good to keep state and stateless apps apart for big scale and less cost?

Keeping state and stateless apps apart helps a lot with big scale. When you split the app from the inside state needs, you let it grow or shrink resources as needed. This makes it much better at dealing with big changes in work amount without a hitch.

Besides, this method cuts down costs. By managing state outside, you don't set up too many resources, so you don't pay for what you don't use. Instead, you get to use pay-as-you-go plans, paying only for the resources you really need.

How do caching and CDNs help make apps work better and faster?

Caching and Content Delivery Networks (CDNs)

Caching and CDNs team up to make app work faster by cutting wait times and easing the load on main servers. CDNs spread content over a lot of servers in many places. This way, users can get data from a server that's closer to them, which makes things run faster.

Caching goes even further by keeping often-used data, like images, videos, or scripts, on these close servers. This lets users get fixed content fast without having to connect over and over to the main server. By working together, caching and CDNs not only use resources better but also cut down on bandwidth use and deal with big spikes in web traffic well. The end result? A quicker, smoother, and more steady use for everyone.