Zero Trust security is essential for securing multi-cloud environments where traditional network-based defences fall short. This guide explains how Zero Trust, combined with service mesh technology, ensures secure communication between services by focusing on identity verification, granular access control, and encryption. Key highlights:

- Zero Trust Basics:

Never trust, always verify

- access decisions are based on identity, not network location. - Challenges in Multi-Cloud: Flat Kubernetes networks and transient IPs make traditional security ineffective.

- Service Mesh Role: Sidecar proxies enable mutual TLS (mTLS), microsegmentation, and policy enforcement without altering application code.

- Implementation Steps:

- Performance Tips: Reduce latency with Ambient Mesh architecture, optimise resource usage, and monitor with OpenTelemetry.

Zero Trust in a service mesh provides scalable, consistent security across cloud platforms like AWS, Azure, and GCP, reducing risks of breaches and lateral movement.

Zero Trust Networking with a Service Mesh

Core Components of Zero Trust in Multi-Cloud Service Mesh

Implementing Zero Trust in a multi-cloud service mesh revolves around three key pillars: verifying identity, controlling access, and containing threats. These elements shift security away from traditional network-based assumptions, focusing instead on cryptographic validation and explicit policy enforcement.

Identity Verification and Authentication

Every service within the mesh must have a cryptographic identity that can be verified. This is achieved using SPIFFE-issued X.509 certificates, managed by a trusted Certificate Authority (CA) [12][13]. These certificates are automatically rotated - often every 12 hours - to minimise the risk of damage if a private key is compromised [3][8].

Mutual TLS (mTLS) plays a critical role here, authenticating both endpoints through these certificates and ensuring all connections are encrypted. Enforcing strict mTLS mode blocks any plain-text traffic and replaces long-lived external tokens with scoped, short-lived internal tokens [9][10]. Data plane components in Cloud Service Mesh use FIPS 140-2 validated encryption modules, meeting federal security standards [3]. Google Cloud Documentation highlights the importance of this approach:

In modern microservice-based architectures... these steps support the zero trust principle, in which all network traffic is assumed to be at risk, regardless of whether the traffic originates inside or outside the network [7].

Once secure identities are in place, the next step is implementing precise access controls.

Access Control and Policy Enforcement

With identity verified, authorisation policies define what each service is allowed to do. These policies enforce the principle of least privilege through layered controls, such as IP/port restrictions at Layers 3/4 and application-specific rules at Layer 7. Role-Based Access Control (RBAC) and Attribute-Based Access Control (ABAC) mechanisms are used to enforce these rules [7][11][2]. Adopting a default-deny approach ensures that no unauthorised traffic is permitted [10][7].

Such robust access controls are the foundation for microsegmentation, which helps isolate threats across multi-cloud environments.

Microsegmentation for Network Security

In dynamic multi-cloud setups, identity-based microsegmentation is crucial. It ensures consistent security policies even as containers migrate, limiting the impact of breaches by preventing lateral movement [3][4][11]. As noted in Cloud Service Mesh documentation:

Traditionally, micro-segmentation that uses IP-based rules has been used to mitigate insider risks. However, the adoption of containers, shared services, and distributed production environments spread across multiple clouds makes this approach harder to configure and even harder to maintain [3][4].

Emerging architectures like Ambient Mesh address these challenges by separating Layer 4 security (managed by ztunnels) from Layer 7 security (handled by waypoint proxies). This approach can reduce infrastructure costs by up to 92% compared to traditional sidecar deployments [11]. Applying policies at the earliest enforcement point in the traffic flow further strengthens security by minimising the risk of bypass [11].

How to Implement Zero Trust with Service Mesh

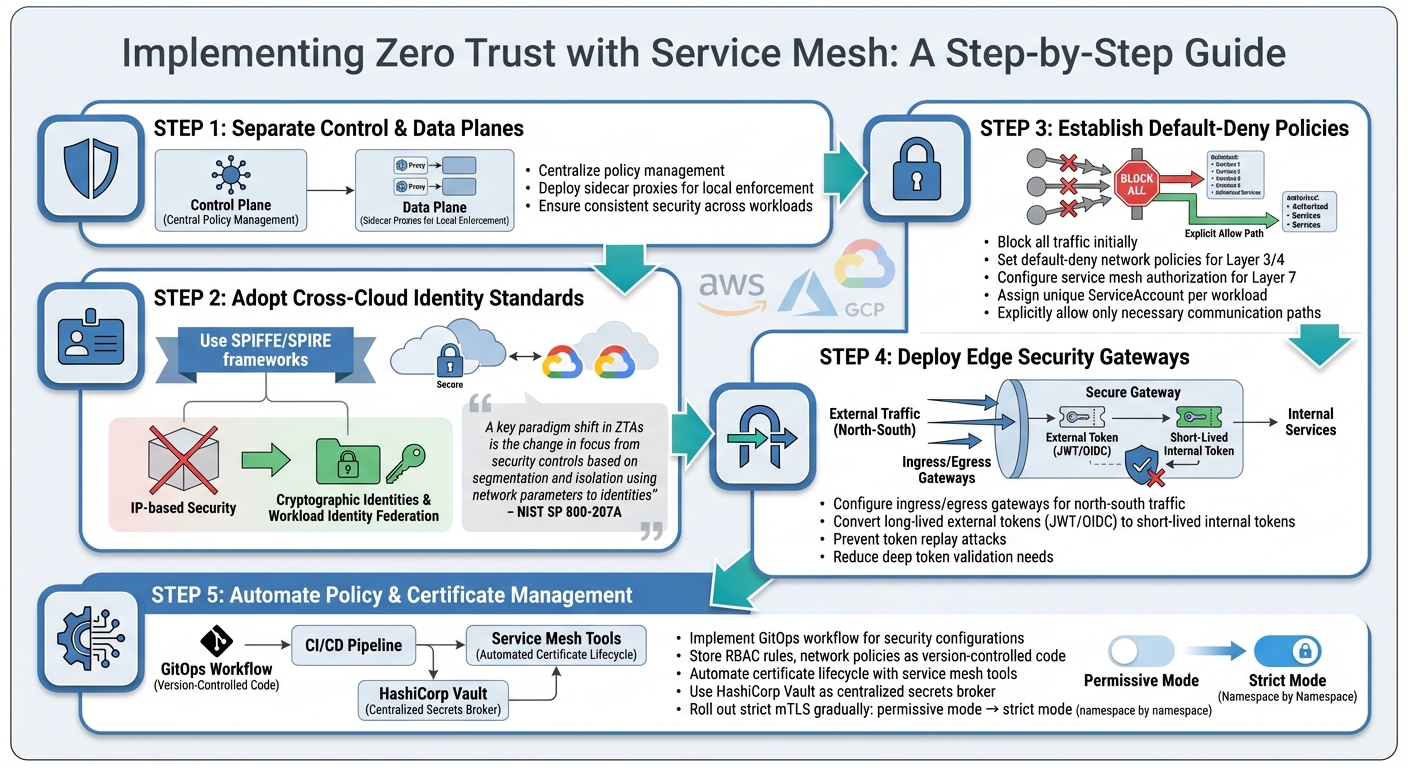

::: @figure  {Zero Trust Implementation Steps for Multi-Cloud Service Mesh}

:::

{Zero Trust Implementation Steps for Multi-Cloud Service Mesh}

:::

To implement Zero Trust, organisations need to move away from traditional network-based controls and focus on identity-driven enforcement. This requires both technical adjustments and organisational commitment.

Designing for Zero Trust in Multi-Cloud

When applying Zero Trust principles across multi-cloud environments, several key steps can guide implementation.

Start by separating the control plane from the data plane. This means centralising policy management while allowing sidecar proxies to enforce those policies at the application level. This division ensures consistent security even as workloads shift between environments.

Next, adopt identity standards that work across clouds. Instead of relying on IP addresses or subnets, use frameworks like SPIFFE/SPIRE or cloud-native tools such as Workload Identity Federation. As NIST SP 800-207A highlights:

A key paradigm shift in ZTAs is the change in focus from security controls based on segmentation and isolation using network parameters (e.g., Internet Protocol (IP) addresses, subnets, perimeter) to identities.[5]

Establish a default-deny policy by blocking all traffic initially and then explicitly allowing only necessary communication paths. For Kubernetes, this involves setting default-deny network policies for Layer 3/4 isolation and using service mesh authorisation policies for Layer 7 controls. Assign a unique ServiceAccount to each workload to provide precise identity and permissions.

For edge security, deploy ingress and egress gateways to manage north–south traffic. At ingress points, convert long-lived external tokens (like JWT or OIDC) into short-lived, scoped internal tokens. This prevents token replay attacks and reduces the need for deep token validation within the mesh.

Dynamic Policy Management and Automation

Manual policy updates are impractical in a multi-cloud setup. A Zero Trust Architecture relies on a centralised Policy Engine and Policy Administrator to handle access decisions and enforcement across distributed Policy Enforcement Points. Service meshes often use constructs like intentions

or traffic permissions

to manage service-to-service communication.

Automate certificate lifecycle management using service mesh tools. Marco Palladino, CTO and Co-Founder of Kong, describes this as giving services a virtual passport

to prove their identity [15]. Tools like HashiCorp Vault can act as a centralised secrets broker, simplifying this process.

Adopting a GitOps workflow can further enhance automation. By storing security configurations (e.g., RBAC rules, network policies, authorisation policies) as code in version-controlled repositories, you can prevent configuration drift and quickly roll back changes if needed. Tools like Terraform or Jenkins can help maintain consistent security settings across multiple cloud providers.

When rolling out strict mTLS, do so gradually. Start with permissive mode and transition to strict mode one namespace at a time. This phased approach allows for troubleshooting and ensures compatibility before enforcing stricter policies organisation-wide.

With policies automated, maintaining system performance becomes a critical focus.

Managing Performance and Latency

Once policies are in place, optimising performance is essential for smooth multi-cloud operations.

Security enforcement can add overhead, but careful architecture can minimise this. Traditional sidecars often create multiple proxy hops, but Ambient Mesh architectures address this by using a shared ztunnel

at the node level for Layer 4 security (mTLS). Layer 7 processing is handled by waypoint proxies only when needed, reducing service mesh infrastructure costs by up to 92% [18].

Distribute control and data plane tasks across cloud zones. This ensures efficient policy propagation while maintaining local enforcement. Use a minimum TLS version to secure communication without the extra load of outdated cryptographic suites. Offload observability tasks by exporting metrics and logs to external platforms like Prometheus or Grafana, which reduces strain on local resources.

Regularly audit the resource usage of sidecars, adjusting CPU and memory limits as necessary to balance security and performance. Observability tools can also help analyse service-network patterns, identifying and resolving bottlenecks. For example, research shows that AKS clusters may face probing attempts within 18 minutes of deployment, highlighting the importance of swift yet efficient security measures [17].

Monitoring, Compliance, and Optimisation

Observability and Monitoring

When implementing Zero Trust policies, maintaining security and performance across multi-cloud environments requires strong monitoring practices. Instead of relying on IP addresses, Zero Trust monitoring focuses on telemetry based on verified identities. Access logs now include the mutual TLS (mTLS) identity of each client, making it possible to pinpoint which workload accessed a service - even in cases where deployments are temporary or spread across multiple clouds [3][4]. This approach ensures continuous identity verification, a key aspect of the Zero Trust model, even in distributed cloud setups.

OpenTelemetry has become the go-to standard for gathering traces, metrics, and logs across platforms like AWS, Azure, and GCP. Unified collectors configured with mTLS encrypt telemetry data during transit [19]. Centralised security dashboards provide a comprehensive view of policy settings, Kubernetes Network Policies, and the health of the mesh across clusters [10]. For multi-cloud environments, best practices suggest retaining logs for 90 days, metrics for 365 days, traces for 30 days, and audit logs for 2,555 days [19]. Real-time alerts should flag any unusual activity, such as repeated failed logins or attempts to escalate privileges. Meanwhile, memory limiters and batch processors in telemetry collectors help keep resource usage in check [19].

Meeting Compliance and Regulatory Requirements

In the UK, organisations must comply with NCSC Principle 6, which requires monitoring of users, devices, and services to ensure system health and configuration integrity [20]. Zero Trust service meshes can support regulations like GDPR (Articles 32–34), SOC2 (Controls CC6.1–6.3), and HIPAA Technical Safeguards by enabling precise access control and maintaining detailed audit trails [19]. For sectors with strict regulatory demands, the data plane should use FIPS 140-2 validated encryption modules for all communications [3][4]. Switching from 'auto mTLS' mode to a strict 'mTLS only' mode ensures encryption-in-transit compliance. Managed Hardware Security Modules (HSMs) backing Certificate Authority services provide additional security for industries requiring custom root certificates [3][4].

Automated key rotation every 90 days, along with AES-256-GCM encryption for stored data and TLS 1.3 for data in transit, further strengthens security [19]. To protect sensitive monitoring traffic, private endpoints should be used to ensure telemetry data does not traverse the public internet between cloud providers [19]. Once regulatory and encryption standards are met, organisations can focus on improving performance within the mesh.

Service Level Objectives and Continuous Improvement

To measure performance in a secure mesh, organisations should monitor the four 'Golden Signals': latency, traffic volume, errors, and saturation [18]. These metrics should be accessible through unified dashboards that span all cloud environments, ensuring Service Level Objectives are consistently met [19]. Locality-aware load balancing can minimise cross-cloud data transfer costs and reduce latency by keeping traffic within the same region or cloud provider whenever possible [16].

Emerging architectures, such as Istio's Ambient Mesh, eliminate the need for sidecars, reducing operational complexity and enhancing performance [18]. Automated policy controllers can validate and audit mesh configurations in real time, helping to avoid accidental misconfigurations [14]. By adopting a GitOps approach, where security settings are stored as version-controlled code, teams can quickly roll back to previous configurations if needed, maintaining alignment with the intended 'source of truth' [10][14].

Conclusion: Zero Trust in Multi-Cloud Service Mesh

Modern multi-cloud environments demand a shift from traditional perimeter-based security to a model of continuous identity verification. As Ashher Syed from HashiCorp explains:

The move to zero trust is not binary; it's an ongoing approach that requires a fundamental shift to your architecture [1].

By embracing Zero Trust principles within a service mesh, organisations can maintain consistent security across platforms, whether they're operating on AWS, Azure, GCP, or even on-premises data centres.

The benefits are clear: organisations leveraging multi-cloud strategies are 1.6 times more likely to exceed performance goals [6], and those embedding security-by-design principles report 65% fewer security incidents [21]. The adoption of service mesh technology has surged by 42% year-on-year, largely due to security needs, with 79% of organisations using it specifically for mutual TLS (mTLS) authentication [21].

These figures highlight the importance of practical, ongoing security measures. Zero Trust isn't a one-time fix - it's a continuous process. Implementing strict mTLS, automating certificate rotations, and enforcing least-privilege access are key steps to securing service-to-service communications. Notably, mTLS adds only 3–5ms of latency per request [21], making it an efficient choice for enhanced security.

For organisations navigating the complexities of multi-cloud security, DevOps transformation, and cost management, Hokstad Consulting provides tailored solutions. Their expertise spans DevOps automation, strategic cloud migration, and custom development, ensuring that Zero Trust principles are seamlessly integrated into cloud infrastructure while aligning with business goals and controlling costs across diverse environments.

This guide illustrates the critical role of integrating Zero Trust with a multi-cloud service mesh to achieve robust and adaptable security.

FAQs

How does a Zero Trust model improve security in multi-cloud environments?

Zero Trust strengthens security in multi-cloud environments by following the principle of never trust, always verify.

This means that every user, device, and application must be continuously authenticated and authorised before accessing any resource.

The core elements of this approach include rigorous identity verification, fine-tuned access controls, and ongoing monitoring of activity. This approach helps to lower the chances of unauthorised access, limits potential attack vectors, and stops threats from spreading within the network. On top of that, it ensures that all communications between workloads are encrypted and mutually authenticated, maintaining a strong security posture across various cloud platforms.

How does a service mesh support Zero Trust security in multi-cloud environments?

A service mesh plays an essential role in applying Zero Trust security within multi-cloud environments. It continuously ensures that every interaction between services is authenticated, authorised, and encrypted. This aligns perfectly with the key principles of Zero Trust, such as verifying identities and securing data access at every step.

By overseeing service-to-service communication policies and enforcing strict security measures, a service mesh helps block unauthorised access and minimises the chances of breaches in complex cloud setups. Its capability to offer detailed control and visibility keeps security strong across all cloud platforms.

How can organisations minimise performance issues when implementing Zero Trust in a multi-cloud environment?

To avoid performance hiccups when implementing Zero Trust in a multi-cloud setup, it’s crucial to fine-tune your service mesh configuration. Opting for a lightweight service mesh can help minimise both latency and resource usage. On top of that, using latency-aware routing, strategically positioning services in different regions, and applying mutual TLS (mTLS) encryption only where it's absolutely needed can make a noticeable difference in performance.

Equally important is continuous monitoring. Keeping an eye on potential bottlenecks and regularly tweaking policies can help maintain a smooth system. By routinely evaluating how security measures affect latency and throughput, you can ensure your setup stays efficient without sacrificing security. Adopting an assume-breach

mindset and evolving your security practices over time is key to striking the right balance between strong protection and system performance.