Vulnerability scanning policies must be reviewed regularly to stay effective. Threats evolve quickly, and outdated policies can leave systems exposed. Here's what you need to know:

- Compliance standards like PCI DSS require quarterly reviews, while others like NIST SP 800-53 allow risk-based schedules.

- High-risk systems (e.g., internet-facing assets) often need daily or weekly scans, whereas lower-risk systems might only need monthly or quarterly checks.

- Event-based updates are critical after major changes like cloud migrations, infrastructure expansions, or new vulnerabilities.

- Automation can enable continuous monitoring and real-time updates, reducing manual effort and response delays.

Organisations should align review frequency with their risk profile, compliance needs, and infrastructure changes. Regular updates ensure policies remain relevant and effective in identifying and mitigating security risks.

#Nessus Compliance scan | Nessus Policy Compliance Auditing | Nessus Compliance scan - Report Review

Need help optimizing your cloud costs?

Get expert advice on how to reduce your cloud expenses without sacrificing performance.

Industry-Standard Review Frequencies

::: @figure  {Vulnerability Scanning Frequency Requirements by Compliance Framework}

:::

{Vulnerability Scanning Frequency Requirements by Compliance Framework}

:::

Compliance Framework Requirements

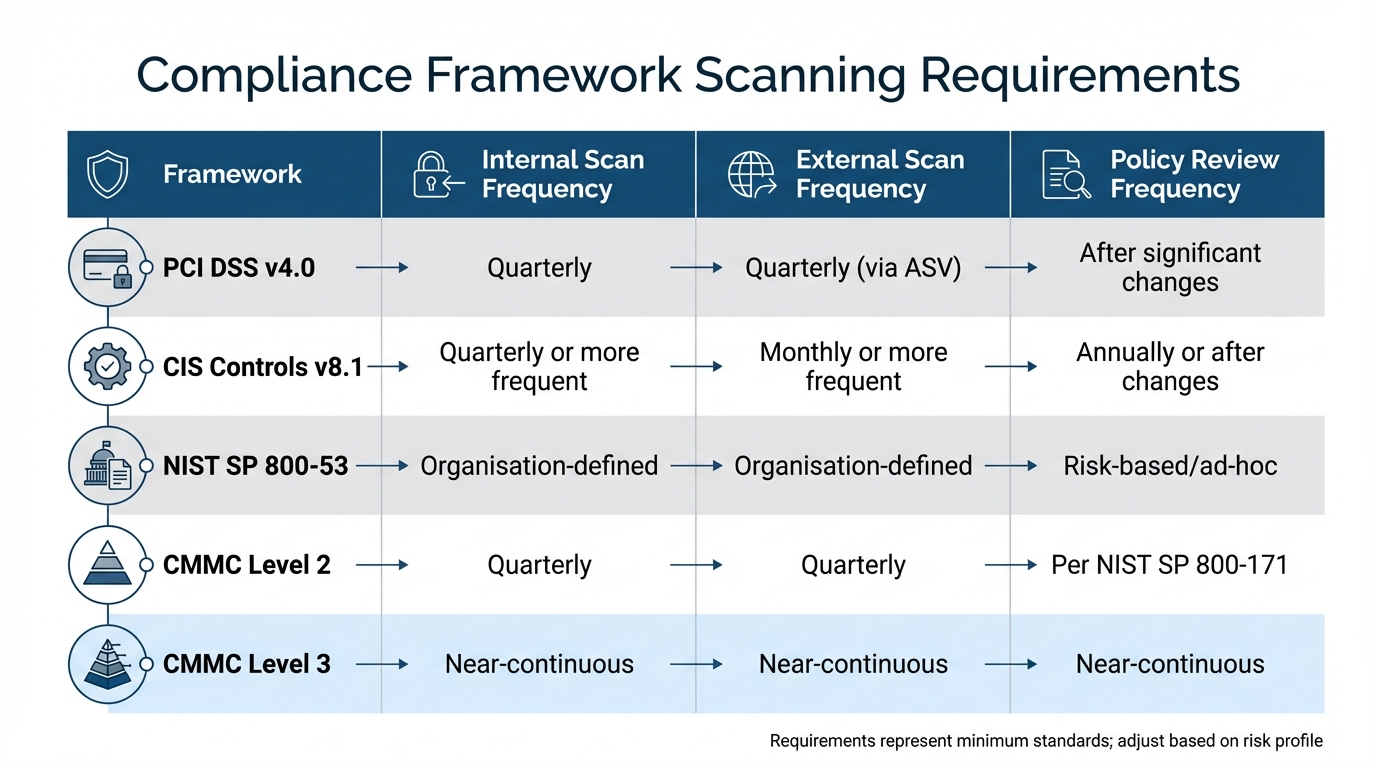

Compliance frameworks establish baseline scanning and review timelines to ensure consistent security practices. For instance, PCI DSS v4.0 requires quarterly scans (no more than 90 days apart) for both internal and external assets, applying to all organisations that process credit card data [8][9].

CIS Controls v8.1 suggests quarterly automated scans for internal assets and monthly scans for external ones [5]. Additionally, it recommends reviewing the vulnerability management process annually or whenever significant changes occur within the organisation [5]. Meanwhile, NIST SP 800-53 takes a flexible approach, allowing organisations to define their own scanning schedules based on risk assessments and security categorisation [10][2].

For organisations pursuing CMMC compliance, Level 2 requires quarterly scans, whereas Level 3 demands near-continuous monitoring [2]. HIPAA, on the other hand, does not specify exact intervals but mandates regular

technical evaluations as part of an overarching risk management strategy [2].

Here’s a quick comparison of these requirements:

| Framework | Internal Scan Frequency | External Scan Frequency | Policy Review Frequency |

|---|---|---|---|

| PCI DSS v4.0 | Quarterly [9] | Quarterly (via ASV) [9] | After significant changes [9] |

| CIS Controls v8.1 | Quarterly or more frequent [5] | Monthly or more frequent [5] | Annually or after changes [5] |

| NIST SP 800-53 | Organisation-defined [10] | Organisation-defined [10] | Risk-based/ad-hoc [10] |

| CMMC Level 2 | Quarterly [2] | Quarterly [2] | Per NIST SP 800-171 [2] |

| CMMC Level 3 | Near-continuous [2] | Near-continuous [2] | Near-continuous [2] |

While these frameworks provide a foundation, organisations often adjust based on their own risk assessments.

Adjusting Review Frequency Based on Risk

Compliance requirements are a starting point, but tailoring review schedules to an organisation’s risk profile is essential. As Kathy Collins from Secure Ideas explains:

For most environments, monthly scanning is considered a reasonable baseline, with more frequent assessments for high-impact systems[2].

Internet-facing assets, for example, often need daily or weekly scans, while lower-risk internal systems might only require monthly or quarterly checks [2][5].

The type of data an organisation handles also influences the frequency of reviews. Those managing sensitive information, such as credit card details, social security numbers, or health records, must adhere to stricter schedules than those dealing with less critical data [11]. Similarly, systems vital to business operations - like payment platforms or customer-facing applications - warrant more frequent policy reviews compared to non-essential infrastructure.

Organisations should also conduct out-of-cycle reviews following major infrastructure changes. Examples include expanding internet-exposed assets, migrating to cloud-based environments, or altering the architecture of web applications [4][9]. Increasingly, businesses are moving toward continuous vulnerability management to minimise exposure windows, especially as attackers exploit known vulnerabilities at an alarming pace [8][2].

How to Review Vulnerability Scanning Policies

Annual and Bi-Annual Full Reviews

Keeping vulnerability management policies up to date is crucial for addressing new threats and aligning with organisational goals. According to CIS Controls v8.1, these policies should be reviewed at least once a year [5][12]. Some organisations, however, prefer bi-annual reviews to catch potential issues sooner. These reviews ensure that scanning schedules, remediation timelines, and asset coverage match the current risk environment.

Policies must also be updated immediately after major changes, such as moving services online or adopting cloud infrastructure [4][1]. Third-party penetration tests can add an extra layer of assurance, often uncovering gaps that internal assessments might overlook [4]. Regular updates like these help organisations stay ahead of evolving threats.

These reviews also pave the way for using automation to maintain compliance more effectively.

Using Automation and Real-Time Monitoring

Automation can transform vulnerability management by enabling continuous policy reviews. Today’s scanning tools can monitor IT environments in real time, automatically evaluating new assets as they are added [13]. By integrating with deployment pipelines through APIs, these tools can initiate scans whenever code changes are made, ensuring policies remain effective even in fast-paced development cycles [1].

Emerging Threat Scans take this one step further, triggering assessments as soon as new vulnerabilities are disclosed, rather than waiting for the next scheduled review [1]. The CIS highlights the importance of this approach:

Cyber defenders must operate in a constant stream of new information: software updates, patches, security advisories, threat bulletins, etc. Understanding and managing vulnerabilities has become a continuous activity, requiring significant time, attention, and resources.[14]

Real-time dashboards offer a clear view of how well policies are working, while automated tracking ensures vulnerabilities are resolved within the required timeline [14]. To avoid alert fatigue, organisations should use tools that filter out less critical issues and focus on high-severity vulnerabilities [1].

Reviews After Remediation and New Discoveries

Policies also need immediate updates following remediation efforts or the discovery of new vulnerabilities. After applying patches, verification scans should be performed to confirm the issue has been fully resolved [4][6]. This step is vital, as patches sometimes fail to address vulnerabilities completely.

If temporary fixes are used, policies should require ongoing monitoring until a permanent solution is implemented [4]. Attackers often exploit vulnerabilities within 7 to 14 days of public disclosure, yet the average organisation takes 74 days to fix critical issues [6].

As Intruder warns:

New vulnerabilities are discovered every day, so even if no changes are deployed to your systems, they could become vulnerable overnight.[1]

Policies and scanning lists should be updated as soon as new CVEs are announced [7]. Subscribing to vendor alerts and threat intelligence services can also help trigger timely policy reviews whenever new advisories are released [4]. This event-driven approach ensures that policies remain relevant in a constantly shifting threat landscape [6].

Adding Vulnerability Scanning to DevOps Workflows

Adding Scans to CI/CD Pipelines

Integrating vulnerability scanning into DevOps workflows strengthens security across the development lifecycle. The best results come from a layered strategy: Static Application Security Testing (SAST) identifies insecure code patterns during the commit phase, Software Composition Analysis (SCA) evaluates third-party libraries during builds, and Dynamic Application Security Testing (DAST) detects runtime vulnerabilities in staging environments.

Automated security gates serve as checkpoints, halting deployments if vulnerabilities exceed acceptable thresholds. For instance, a pipeline might block deployments with any Critical vulnerabilities or more than three High-severity issues. Many organisations start with relaxed thresholds for older applications - failing only on Critical vulnerabilities - and tighten these controls over time as technical debt decreases.

A shift-left approach brings security into earlier stages of development by using tools like IDE plugins and pre-commit hooks to catch vulnerabilities during coding. Early detection not only simplifies fixes but also reduces costs. Tools such as Open Policy Agent (OPA) and Kyverno facilitate Policy-as-Code, embedding compliance rules directly into pipelines to enforce consistent security standards.

Containers require special attention. Every component should be scanned during the build phase, and only images verified within the last 30 days should be deployed [3]. Using SHA256 digests instead of floating tags like :latest ensures that builds rely on specific, trusted images. Additionally, cleaning up outdated images from registries reduces both the attack surface and unnecessary storage costs.

These technical practices can be further refined with expert services designed to integrate and enhance these processes.

Hokstad Consulting's DevOps Security Services

Hokstad Consulting specialises in embedding vulnerability management into DevOps workflows, offering tailored solutions to integrate security seamlessly into CI/CD pipelines. Their services include automating security checks at every stage of deployment, with tools like webhooks to trigger scans, HashiCorp Vault for identity and secrets management, and continuous asset discovery for dynamic cloud resources.

For businesses navigating UK regulations like GDPR, Hokstad Consulting provides guidance to align CI/CD security practices with compliance standards. Their custom automation services not only help tighten security but also reduce deployment times. Clients typically see improvements in both speed and security, whether implementing shift-left strategies for development teams or fine-tuning existing pipelines. Hokstad’s approach ensures that vulnerability scanning remains effective and adaptable in the fast-moving world of DevOps.

Conclusion

Vulnerability scanning policies need to keep pace with the ever-changing threat landscape. Modern infrastructures are dynamic, and attackers are quick to exploit vulnerabilities - often within 7 to 14 days of disclosure. This leaves organisations exposed for as long as 119 days if they rely solely on quarterly compliance scans [6]. The growing number of CVEs highlights the urgency to adapt scanning practices [6].

To stay effective, scanning frequency should match the speed of your infrastructure. For cloud-heavy environments, daily or continuous scans are a must, while stable on-premises systems may manage with weekly checks. However, it’s not just about frequency - policies must evolve alongside infrastructure changes and emerging threats [6]. Asset discovery should occur more frequently than the scans themselves, and event-triggered scans should follow key changes like patch deployments or security incidents [6].

Your vulnerability management process should always be evolving to keep pace with changes in your organisation's estate, new threats or new vulnerabilities.- NCSC [4]

Integrating automated scanning into CI/CD pipelines shifts vulnerability management from a compliance task to a proactive development process. This shift-left

strategy catches vulnerabilities early, reducing both remediation costs and the time systems remain exposed. Post-fix verification scans ensure vulnerabilities are resolved and temporary fixes are replaced with permanent solutions [4]. By embedding this approach into CI/CD workflows, vulnerability management becomes an ongoing practice.

Key Takeaways

Here are some essential practices to consider:

Match scan frequency to compliance and threats: While frameworks like PCI DSS require quarterly scans and FedRAMP mandates monthly ones, these regulatory minimums often lag behind the speed of modern threats [2][3]. For cloud environments and internet-facing systems, daily or continuous scans are crucial, especially since attackers can scan the entire IPv4 space in under 24 hours [6].

Automate wherever possible: Automation reduces manual workload and alert fatigue. Use APIs to integrate scanning tools with CI/CD pipelines, triggering scans after code changes or infrastructure updates. Emerging threat scans can proactively address high-profile vulnerabilities [1][3]. Machine-readable outputs (e.g., XML, CSV, JSON) streamline ticketing and speed up remediation workflows [1][3].

Prioritise asset discovery: Run asset discovery more frequently than vulnerability scans. For instance, if you scan weekly, discover assets daily. Without active discovery, around 32% of cloud assets typically go unmonitored [6]. Policies must include feedback loops to verify remediation efforts and adapt to new threats, ensuring security remains an ongoing process rather than a scheduled task.

FAQs

What triggers an out-of-cycle policy review?

When major changes occur - like the discovery of new vulnerabilities, major system updates, or shifts in potential threats - an out-of-cycle policy review may be necessary. These situations demand immediate attention, bypassing the usual review timeline, to ensure policies stay effective and aligned with current needs.

How do I set scan frequency for mixed-risk systems?

The frequency of scanning mixed-risk systems largely hinges on their importance and the environment they operate in. For high-risk systems, a weekly or monthly scan is advisable to stay ahead of potential vulnerabilities. Systems deemed less critical can typically be scanned on a monthly or quarterly basis without compromising security.

In dynamic environments, such as cloud infrastructure, the landscape changes rapidly. Here, daily or continuous scans are the best approach to catch vulnerabilities as they emerge.

Ultimately, the scanning schedule should align with your organisation's risk tolerance, the criticality of the systems, and the current threat landscape. This tailored approach helps ensure vulnerabilities are detected and addressed promptly.

How can CI/CD automate vulnerability scans and reviews?

CI/CD pipelines streamline the process of identifying vulnerabilities by embedding security tools directly into the development workflow. These tools automatically scan code, dependencies, and infrastructure at key stages, such as code commits or deployments. This proactive approach helps detect issues early, cutting down on manual effort and speeding up the process of fixing them. By integrating security into the development lifecycle, teams can ensure a more secure product. Additionally, automated alerts and detailed reports enable faster responses, supporting continuous and effective vulnerability management.