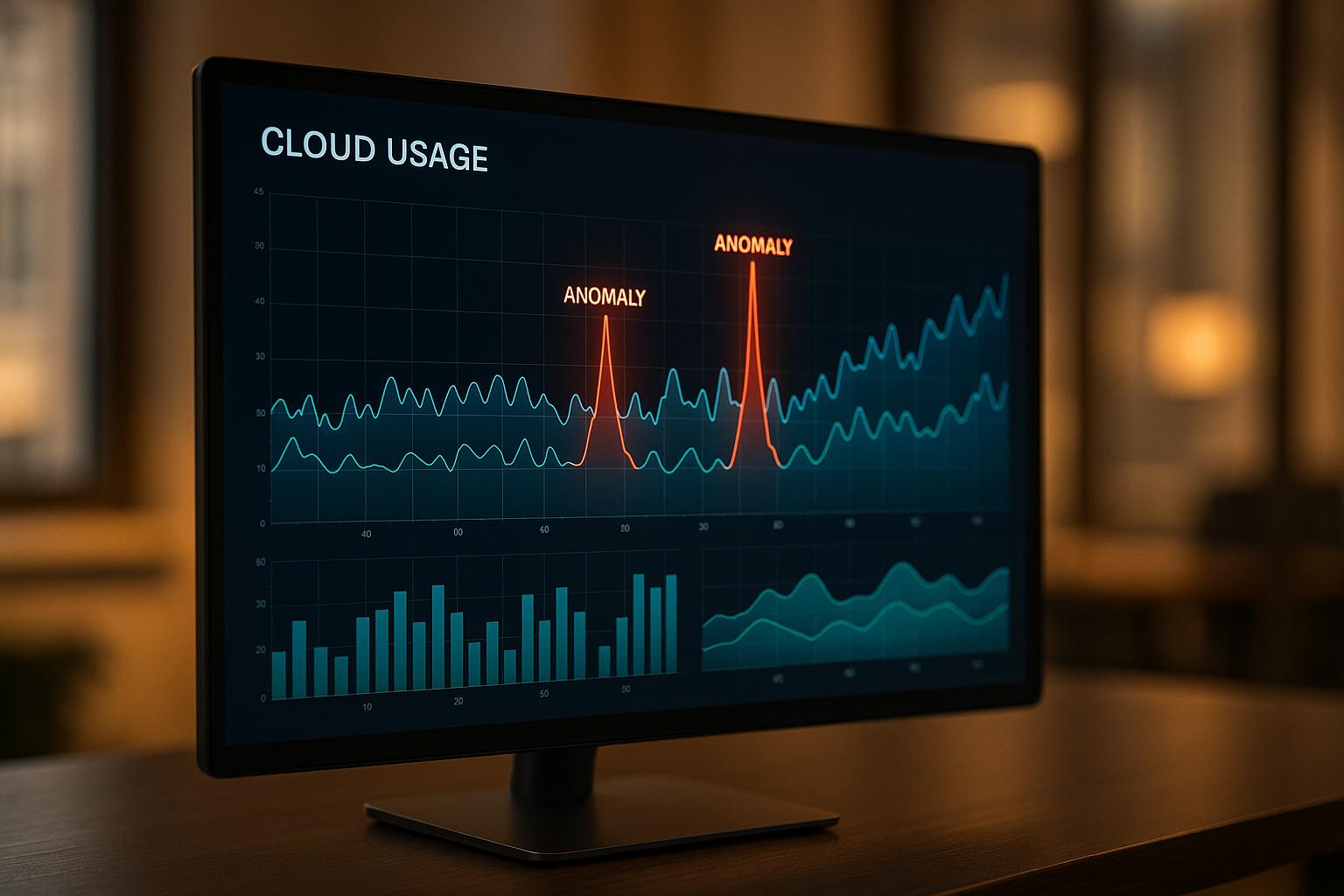

Cloud cost anomaly detection helps you spot unexpected spikes in your cloud expenses before they cause financial trouble. By analysing historical data, it identifies unusual patterns and sends alerts, saving time and resources compared to manual checks. Common causes include misconfigurations, storage inefficiencies, and unexpected usage spikes. Ignoring these anomalies can lead to financial strain, operational issues, and missed growth opportunities.

Key Takeaways:

- What to Monitor: Track compute, storage, network traffic, and service-specific metrics.

- Detection Methods: Use statistical techniques like Z-score or advanced machine learning for complex patterns.

- Tools: AWS, Azure, and Google Cloud offer native solutions, while third-party tools like nOps work well for multi-cloud setups.

- Best Practices: Set clear alert thresholds, use historical data for baselines, and regularly review detection systems.

Effective anomaly detection prevents budget overruns and helps optimise cloud spending, making it a critical tool for UK businesses.

Ep#8 Mastering Cost Anomaly Detection in FinOps with Eric Lam from Google Cloud

Key Metrics and Data Requirements

Effectively detecting anomalies in cloud costs hinges on monitoring the right metrics and relying on high-quality data. Without these essentials, even the most advanced systems can miss real issues or, worse, overwhelm you with false alarms.

Key Metrics to Monitor

Cloud computing costs often revolve around compute resources. Keep a close eye on virtual machine instances, serverless functions, and container services - not just the total spend but also usage patterns. Sudden spikes might indicate increased demand or underutilised resources left running.

Storage costs are another critical area. Monitor storage volumes across different tiers. Unexpected increases could stem from misconfigured backup settings or retention policies. Don’t overlook data transfer charges between storage tiers, as these can quietly drive up costs.

Network traffic is also a key contributor. Pay attention to inbound and outbound traffic, particularly cross-region transfers and external bandwidth usage. Inefficient API calls or poorly routed data can add up quickly.

Service-specific metrics deserve attention too. For example, database operations or API gateway requests can reveal hidden inefficiencies. Tailor your monitoring to match your environment - large databases, for instance, should be tracked for both compute and I/O operations.

Finally, break down costs by service, region, and time period. This granular approach uncovers patterns that might be hidden in overall spend figures. These insights lay the groundwork for the more advanced analyses discussed later.

The Role of Historical Data

Historical data plays a crucial role in cloud cost anomaly detection. It helps establish a baseline for normal spending patterns. Machine learning and statistical models rely on these past trends - often using a seven-day rolling average - to predict costs and flag anomalies when expenses deviate from expected ranges [2][1].

The quality of your historical data is just as important as the quantity. Clean, well-organised data delivers far better results than large, unstructured datasets. Use tagging to categorise spending by service, project, or cost centre, which will make investigations into anomalies far more precise.

Seasonality and Business Events

Cloud spending doesn’t exist in a vacuum. Seasonal trends and business events can cause predictable cost fluctuations. For example, retail sales, marketing campaigns, month-end cycles, or UK-specific events like bank holidays and school terms can all lead to temporary increases in cloud usage. Accounting for these events in your detection models can help reduce false alarms.

Integrating historical data with calendars of business and seasonal events can significantly improve accuracy [1]. Expert advice can further refine these models to better suit the unique needs of your organisation.

Methods and Technologies for Anomaly Detection

Once you have your metrics and historical data in place, the next step is to choose the detection methods that align with your needs. Broadly, there are two main approaches: statistical techniques and machine learning methods. Each has its strengths, depending on the nature of your data and how complex your requirements are.

Statistical Detection Techniques

Statistical methods are often the backbone of anomaly detection, especially for organisations in the early stages of FinOps maturity. These techniques rely on straightforward mathematical principles to spot sudden and significant deviations from established norms [3][1].

One of the most commonly used methods is Z-score analysis, which measures how far a data point is from the mean in terms of standard deviations. For instance, you might set an alert if daily cloud spending exceeds three standard deviations above the weekly average.

Other techniques, like IQR (Interquartile Range) methods, are also effective. These identify outliers by flagging data points that fall beyond 1.5 times the IQR above the third quartile or below the first quartile, making them particularly useful for datasets with irregular distributions.

Another approach is using moving averages, such as a seven-day rolling baseline. For example, you could trigger alerts when current costs exceed this average plus three standard deviations [1].

For environments with predictable growth patterns, time series models can be helpful. These models incorporate trend analysis, allowing them to account for gradual changes over time. This makes them more nuanced than simple threshold-based methods.

The main appeal of statistical methods lies in their clarity and ease of use. They are easy to implement, and teams can quickly understand why an alert was triggered. However, they can struggle with more complex scenarios, like seasonal fluctuations, often resulting in false positives when predictable patterns - like weekend cost drops - are flagged as anomalies [3][1].

While statistical methods are a solid starting point, more advanced environments may require additional sophistication.

Machine Learning for Anomaly Detection

Machine learning methods bring a more advanced layer to anomaly detection, especially in dynamic cloud environments. These models build on historical data, enabling them to identify subtle patterns that statistical methods might miss, while also reducing false positives [3][4][1].

Supervised learning models are particularly effective when you have labelled historical data. These models learn from past examples of anomalies and normal patterns, making them highly accurate in identifying similar issues in new data. They’re ideal for organisations with a well-documented history of cost-related incidents.

On the other hand, unsupervised learning algorithms don’t need pre-labelled data. Instead, they learn what normal

looks like from historical usage and can flag previously unknown anomalies. This makes them useful for uncovering new cost-saving opportunities.

Ensemble methods take it a step further by combining multiple algorithms. For example, you could use statistical baselines for quick detection of cost spikes while leveraging machine learning to analyse more complex patterns. This dual approach ensures comprehensive coverage of different anomaly types.

The key strength of machine learning lies in its ability to adapt to complex patterns, such as seasonal variations, gradual cost increases, or one-off events [3]. Over time, these systems become more refined as they process more data, learning to ignore expected fluctuations like weekend cost drops and focusing on genuinely unexpected events [3][1].

Algorithm Selection and Customisation

Choosing and customising the right algorithms involves balancing simplicity with complexity. The best approach depends on your data’s characteristics and your operational needs. Factors like data volume, speed, and complexity play a critical role [3][5].

For organisations with limited historical data or straightforward usage patterns, statistical methods are often the best starting point. A simple rule - like flagging costs that exceed the previous week’s daily average plus three times the standard deviation - can catch major issues without being overly complicated [1].

In contrast, high-volume, complex environments benefit from machine learning. If your cloud usage spans multiple services, regions, or seasonal patterns, machine learning can untangle these layers to provide more accurate detection.

Performance requirements also influence your choice. Statistical methods are faster and less resource-intensive, making them ideal for real-time alerts. Machine learning models, while requiring more processing power, offer deeper insights into cost trends [3][5].

Scalability is another consideration. Statistical methods scale predictably with data volume, while machine learning may need more advanced infrastructure but can handle increasingly intricate patterns more effectively.

A hybrid approach often works best. Start with statistical methods for quick, straightforward detection, and layer machine learning on top for advanced pattern recognition and to minimise false positives. This combination ensures thorough coverage while keeping operations manageable.

Finally, consider your team’s expertise. Statistical methods require basic analytical skills, whereas machine learning might need specialised knowledge or external support to implement effectively.

Tools and Platforms for Cloud Cost Anomaly Detection

When it comes to detecting anomalies in cloud costs, choosing the right tools is key. Broadly, these tools fall into two categories: cloud-native solutions offered by major platforms and third-party tools designed for multi-cloud environments. Each has its strengths, depending on your setup and needs. These solutions integrate with your existing data streams and detection models, offering a clear view of your cloud expenses.

Cloud-Native Solutions

AWS Cost Anomaly Detection uses machine learning to analyse historical usage patterns and flag unusual cost spikes. It can be set up within 24 hours, making it a fast and efficient option for AWS users [6].

With AWS, you can monitor specific services, cost categories, up to 10 linked accounts, or even cost allocation tags [6]. Alerts can be triggered by pound sterling thresholds or percentage changes, such as anomalies exceeding £1,000 or a 50% increase [6]. Notifications are sent via Amazon Simple Notification Service (SNS) or email, with options for immediate alerts or daily and weekly summaries.

One standout feature is its root cause analysis. When an anomaly occurs, the system identifies up to ten detailed causes, showing their individual contributions. This significantly reduces the time spent diagnosing issues. Additionally, it integrates with AWS Cost Explorer, making it easy to visualise daily cost trends [6].

Other platforms like Azure Cost Management and Google Cloud Monitoring offer similar capabilities tailored to their ecosystems. These tools are particularly effective for single-cloud setups, providing deep integration with platform-specific services and billing structures. However, for multi-cloud environments, third-party tools often provide a more comprehensive solution.

Third-Party Platforms

For businesses seeking more advanced analysis or multi-cloud visibility, third-party platforms offer a robust alternative.

nOps, for example, uses AI to forecast costs, accounting for seasonal trends and workload fluctuations [9]. It detects cost spikes early and traces them back to specific business units, clients, or projects. The platform also provides detailed trend analysis, seasonality detection, and classifies anomalies by severity (Low, Medium, or High) [9]. Beyond anomaly detection, nOps integrates with a range of cloud management tools, offering features like dashboards, budget tracking, cost targets, and optimisation recommendations.

CAST AI takes a more focused approach, specialising in AWS cost optimisation for Kubernetes environments. It automates workload rightsizing, reduces overprovisioning, and efficiently scales clusters using an autonomous system that adjusts to resource demands [8].

Third-party tools shine in multi-cloud setups, combining data from various sources to give a comprehensive view of spending patterns. They often include advanced analytics and customisation options, making them a strong choice for complex cloud environments.

UK-Specific Considerations

UK businesses face unique challenges when managing cloud costs. Tools must handle GBP natively, account for exchange rate fluctuations, and adhere to local compliance standards. For example, organisations in the UK need solutions that comply with regulations like GDPR, PCI DSS for fintech, and NHS data requirements for healthtech [10]. Additionally, granular visibility and cost allocation features are crucial for ensuring accountability, particularly in compliance-heavy industries [11].

Managing international payments to cloud providers can also be a challenge. Using a Wise Business account can simplify this process, helping UK teams reduce currency conversion fees and manage over 40 currencies [7]. Local support is another priority - providers offering assistance during UK business hours can ensure timely issue resolution. For instance, Hyper Talent Solutions offers FinOps Cost Anomaly Detection services tailored to UK government departments, reflecting the demand for localised expertise [11].

Consulting services for FinOps in the UK typically range from £400 to £1,400 per person per day, underscoring the specialised nature of this work [11]. Other challenges include GBP volatility impacting dollar-denominated pricing, GDPR compliance costs, and the need for rapid scaling in a competitive market [10].

For businesses navigating these complexities, expert guidance can make a significant difference. Hokstad Consulting, for example, offers cloud cost engineering services that can reduce expenses by 30–50%, helping UK businesses optimise their cloud spending while staying compliant with regulations.

Need help optimizing your cloud costs?

Get expert advice on how to reduce your cloud expenses without sacrificing performance.

Implementation and Management Best Practices

To effectively manage cloud cost anomalies, you need well-structured workflows that can quickly detect, investigate, and address issues. This means implementing smart alert systems, defining clear investigation protocols, and knowing when to call in expert help.

Setting Up Alerting and Notification Systems

The first step is to establish thresholds that trigger alerts without overwhelming your team with false alarms. These thresholds should align with your spending patterns and risk tolerance. For example, you might route critical alerts (e.g., those exceeding £5,000) to senior management, while sending routine alerts (e.g., under £500) to operational teams.

Each alert should include key details - such as the affected service, percentage cost increase, time period, and a preliminary analysis of the root cause. This ensures your team has the context needed to act quickly.

For real-time communication, integrate platforms like Slack or Microsoft Teams. Use dedicated channels for anomaly notifications and automate incident ticket creation. Features like threaded conversations can help track investigations, creating a searchable record of actions taken and their outcomes.

To ensure no critical issue is missed, configure escalation rules. For instance, if an anomaly isn’t acknowledged within 30 minutes during business hours, escalate it to the next level. For incidents outside normal hours, tools like PagerDuty or OpsGenie can notify on-call staff via SMS or phone. You can also implement smart filtering to suppress duplicate alerts for a few hours, reducing noise and improving focus.

Once alerts are set up, you can use a structured process to identify and address the root causes of anomalies quickly.

Steps for Root Cause Analysis

When an anomaly is detected, take a systematic approach to uncover its cause. Start with a 15-minute assessment to gather the basics - what services are affected, the time range of the anomaly, and the cost impact. Then, dig deeper by examining resource usage and data movement to identify potential triggers.

Look for obvious causes like recent deployments, configuration changes, or scheduled maintenance. Use tools like AWS CloudTrail or Azure Activity Log to trace API calls and administrative actions over the past 24–48 hours.

Calculate the daily cost increase and project its monthly impact. For instance, a £100 daily rise could translate to £3,000 per month if left unaddressed. Determine whether the anomaly is stabilising or worsening.

Once you’ve identified the root cause, implement corrective actions and monitor the situation for 24–48 hours to ensure the issue is resolved. Document the cause, the steps taken, and any updates to alert rules. This documentation not only speeds up future resolutions but also helps prevent similar issues down the line.

Leveraging Expert Consulting

Managing complex cloud environments often calls for specialist expertise. An expert can design anomaly detection systems tailored to your organisation’s needs, ensuring even the trickiest issues are handled effectively.

Hokstad Consulting is one such expert, specialising in cloud cost engineering. They begin with a detailed audit of your spending patterns to identify key cost drivers. This baseline analysis ensures their solutions target areas with the greatest potential for savings.

Their approach includes configuring multi-layered alerting systems that cater to different stakeholders. For example, operational teams might need detailed technical alerts, while executives may prefer high-level summaries of cost impacts. They can also integrate anomaly detection with your DevOps workflows, setting up automated responses to scale down resources when anomalies occur, or creating dashboards that combine cost, performance, and availability metrics.

As your cloud usage evolves, detection algorithms and alert rules need regular updates. Hokstad Consulting provides ongoing reviews to keep your systems effective, typically achieving 30-50% cost reductions with well-implemented monitoring.

What sets them apart is their 'no savings, no fee' model. Their fees are capped as a percentage of the savings achieved, making their expertise accessible even to organisations with limited budgets. This ensures their focus remains on genuine cost reductions, not just deploying tools.

To ensure long-term success, Hokstad Consulting also provides hands-on training for your internal teams. They’ll learn how to operate and maintain the detection system independently, with tailored documentation covering custom configurations, escalation protocols, and troubleshooting steps. This knowledge transfer reduces reliance on external support while empowering your team to manage costs effectively.

Conclusion and Key Takeaways

Summary of Key Points

Cloud cost anomaly detection acts as an early warning system, shielding your organisation from unexpected and excessive expenses. The most successful strategies combine strong data foundations, advanced detection methods, and well-coordinated response plans.

Establishing a clear baseline using historical data is essential. It allows sophisticated algorithms to differentiate between genuine anomalies and normal fluctuations in your cloud usage patterns. This accuracy ensures effective detection, even as business needs evolve.

When it comes to choosing between statistical methods and machine learning, the decision hinges on your organisation's complexity and the resources you have available. The best results come from aligning the detection method with your specific requirements.

Alerting systems work best when they balance sensitivity with practicality. Many organisations find success with a tiered alerting approach - escalating critical anomalies to senior decision-makers while handling routine alerts within operational teams. This ensures that attention is directed where it’s needed most.

These principles form the backbone of a well-rounded cloud cost optimisation strategy.

Final Thoughts on Optimising Cloud Costs

Effective anomaly detection is a powerful tool for keeping small cost increases from spiralling into large-scale budget challenges. By catching issues early, you can prevent minor daily overspending from accumulating into significant annual losses. When paired with real-time monitoring, regular cost reviews, automated scaling, and team training focused on cost awareness, it delivers measurable savings and improved efficiency.

Forward-thinking organisations integrate anomaly detection into their broader cost management strategies. Seeking expert guidance can make a big difference. Consultants like those at Hokstad Consulting bring hands-on experience from numerous implementations, helping you avoid common mistakes and tailor systems to your unique needs.

As cloud technologies continue to evolve, regularly reviewing and adapting your anomaly detection setup will not only improve your financial planning but also free up your technical teams to focus on innovation. This proactive approach turns cost management into a strategic asset, supporting sustainable growth and long-term success.

FAQs

How do I set the right alert thresholds for detecting cloud cost anomalies?

Setting Effective Alert Thresholds for Cloud Cost Anomalies

When setting up alert thresholds to catch cloud cost anomalies, it’s wise to start small. Begin with a conservative percentage of your estimated monthly expenses - something like 10%. As you collect more data and gain a clearer picture of your usual spending habits, you can fine-tune these thresholds to better suit your needs.

The key is to focus on notable changes rather than minor day-to-day variations. This prevents a flood of unnecessary alerts that could distract from genuine issues. Use your historical spending data to customise these thresholds, keeping in mind factors like seasonal trends or unique business activities that might impact costs. By regularly reviewing and tweaking your settings, you’ll ensure your alerts stay relevant and genuinely helpful.

What advantages does machine learning offer over traditional statistical methods for detecting cloud cost anomalies?

Machine learning offers a powerful edge over traditional statistical methods in spotting anomalies in cloud costs. It can handle large, complex datasets with ease, picking up on subtle and unexpected irregularities by analysing historical spending trends. This results in more precise detection and fewer false alarms.

What’s more, machine learning models adjust dynamically to shifts in cost patterns, providing real-time and continuous oversight. This means businesses can manage costs more effectively and act swiftly when issues arise, keeping operations running smoothly.

How do UK-specific factors like currency changes and compliance standards affect cloud cost anomaly detection?

UK-Specific Challenges in Cloud Cost Anomaly Detection

In the UK, certain factors add complexity to detecting anomalies in cloud costs. For instance, fluctuations in the GBP exchange rate can create confusion. A sudden shift in currency value might appear as an unusual billing spike, potentially triggering false alarms in detection systems.

Another layer of complexity comes from regulations like the GDPR. These laws dictate how sensitive billing data must be handled, requiring tools to be configured with privacy and security in mind. This careful setup is crucial but can sometimes limit the tools' ability to spot genuine anomalies. Ensuring detection systems comply with these rules while maintaining accuracy is a key challenge for effective monitoring in the UK.