Integrating private cloud APIs with legacy systems allows businesses to modernise without replacing outdated infrastructure. This approach connects older systems to cloud services securely, enabling real-time data access, improved scalability, and reduced manual processes. However, challenges like compatibility issues, cybersecurity risks, and network complexities require careful planning.

Key Points:

- Private Cloud APIs: Secure interfaces for connecting legacy systems to cloud services within a controlled environment.

- Challenges: Outdated protocols, security vulnerabilities, and scalability limitations.

- Solutions:

- Use API gateways for secure connections and protocol translation.

- Implement containerisation to modernise specific functions.

- Opt for phased migration (e.g., Strangler Pattern) to minimise risks.

- Tools: Kubernetes for orchestration, service meshes for internal communication, and API gateways for traffic management.

- Security: TLS encryption, OAuth 2.0 for access control, and real-time monitoring for compliance.

By leveraging these strategies and tools, organisations can modernise operations while maintaining the stability of their existing systems.

AWS re:Invent 2024-Private API integration for Amazon EventBridge and AWS Step Functions-API313-NEW

What to Consider Before Integration

Before diving into integration, take a step back to evaluate your current systems and long-term goals. Skipping this step can result in security vulnerabilities and technical debt that’s hard to untangle later. The Government Digital Service warns: Legacy systems... can make it hard for you to build a service that brings about real change because: it takes time and effort to move away from them; they do not support real-time queries and changes; they're expensive to license.

[6]

Assessing Your Legacy System

Start with a thorough technical audit of your existing setup. Ask yourself: can your current hardware and software handle real-time API queries, or are they held back by outdated data formats that complicate synchronisation? Also, review network configurations and DHCP settings to avoid IP conflicts during integration.

Legacy systems often fail to meet modern cybersecurity benchmarks, including UK GDPR requirements for data encryption and residency. If your system stores sensitive customer data, you’ll need to implement additional safeguards during the integration process. One effective strategy is the Strangler Pattern

, which involves wrapping your legacy system in an API layer. This allows new code to interact with the old system while gradually replacing the backend, reducing risk and ensuring business continuity.

Don’t forget to review existing vendor contracts. Plan the transition well in advance of contract expirations to avoid expensive renewals. At the same time, assess the ongoing maintenance costs of your legacy system to ensure they align with your integration goals.

Once you've clarified the technical and security profile of your legacy system, you can move on to defining your integration objectives.

Defining Your Integration Goals

A clear set of objectives is essential to keep your project on track and avoid unnecessary scope expansion. Decide on your primary goals - whether it’s digital transformation, streamlining workflows, or cutting costs - and ensure they align with your broader organisational strategy. For example, if your goal is to enable AI-driven analytics, your API design should prioritise real-time data access and seamless queries.

Set measurable metrics for both technical and business success. On the technical side, define acceptable latency, API call volumes, and uptime targets. For business outcomes, establish KPIs like cost savings, faster deployment, or enhanced customer experience. With 89% of organisations expecting to increase their API usage in the next year [11], planning for scalability from the start is crucial.

Strategy development begins with a simple question: what is the organisation trying to achieve with its APIs?- IBM [8]

Identify specific use cases to guide your integration. Are your APIs primarily for internal data sharing, or are they customer-facing? Each scenario requires different considerations for security, performance, and scalability. Documenting these requirements in detail will help you avoid headaches down the line.

After defining your goals, turn your attention to the financial and staffing needs of the project.

Planning Your Budget and Resources

Integration projects often require running both legacy and cloud systems side by side during the transition, which can temporarily double your infrastructure costs. Plan your budget carefully to account for this hybrid model, and include a 1–2 month buffer in your timeline to handle potential technical challenges [6].

Beyond technical expertise, you’ll need a multidisciplinary team. This team should cover technical, legal, and commercial areas to address compliance with data sovereignty laws, manage vendor relationships, and handle the complexities of integrating old and new systems [6][13]. Many UK businesses find it helpful to bring in DevOps specialists on an hourly or retainer basis for senior-level guidance [12].

Finally, set a firm decommissioning date for your legacy systems in collaboration with all stakeholders. Without a clear deadline, there’s a risk the hybrid setup could become permanent, leading to higher long-term costs and security vulnerabilities [13]. Perform a cost analysis - such as calculating unit costs per transaction or user - to decide whether scaling your legacy system via API or opting for a full replacement is the smarter choice. This will help you determine whether a phased migration or a complete overhaul is the best approach.

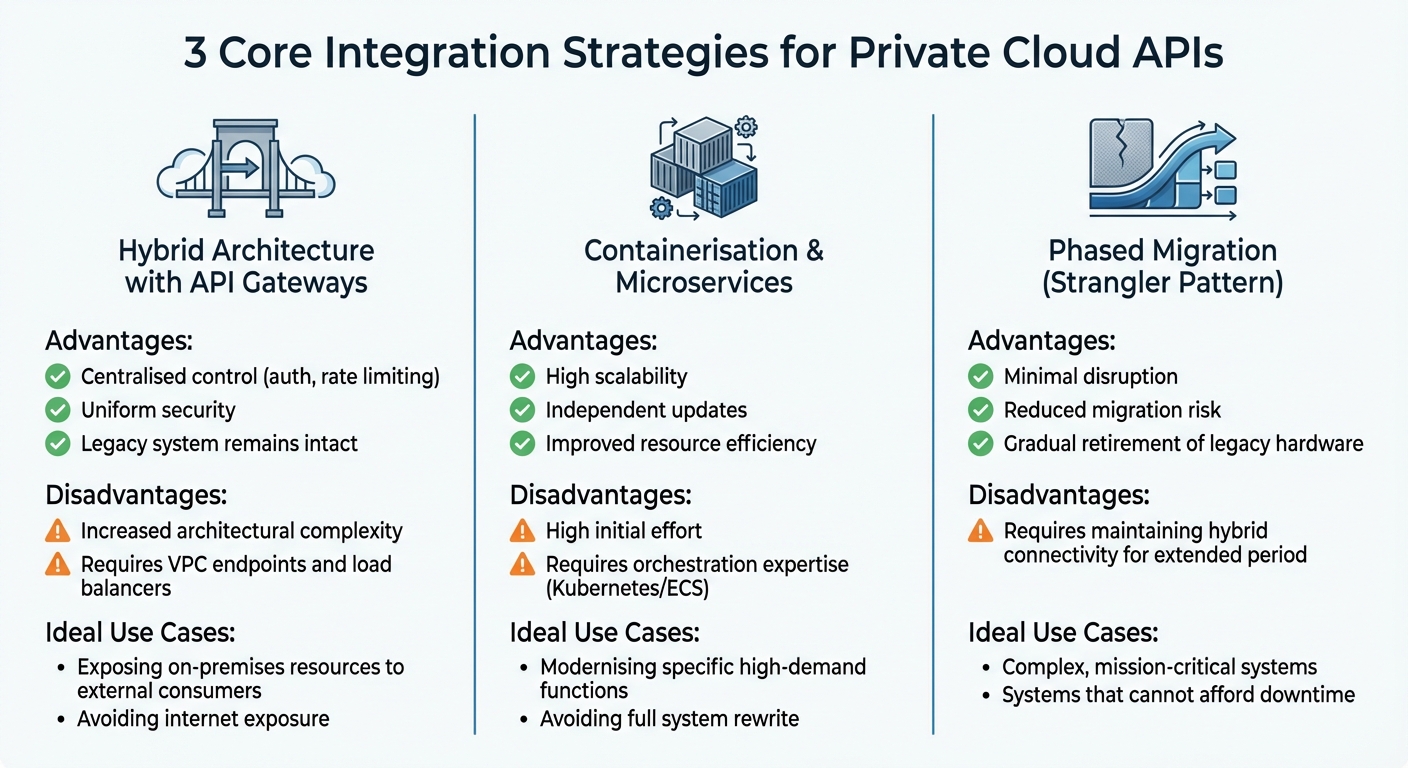

3 Core Integration Strategies

::: @figure  {Comparison of Three Core Private Cloud API Integration Strategies}

:::

{Comparison of Three Core Private Cloud API Integration Strategies}

:::

After evaluating your legacy systems and setting clear goals, the next step is to select the right integration strategy. Each approach comes with its own balance of complexity, risk, and long-term adaptability. The three key strategies to consider are hybrid architecture with API gateways, containerisation and microservices, and phased migration with data synchronisation. These methods build upon the foundational assessments you’ve already completed and cater to a variety of integration needs.

Hybrid Architecture with API Gateways

API gateways serve as a single entry point that connects your cloud services with legacy systems. They simplify backend complexities by acting as a bridge, making it easier to expose on-premises resources to external users securely - without exposing them to the public internet [2][14]. This approach extends the functionality of your existing system while avoiding the costs and risks of a full replacement.

API Gateway provides a layer of abstraction between the API consumers and the backend services, allowing for centralised control.- AWS Compute Blog [14]

API gateways streamline tasks such as authentication, rate limiting, and monitoring while also handling protocol translation [14][16]. They are built to handle high traffic, managing hundreds of thousands of concurrent API calls, which makes them invaluable for hybrid setups [16]. For private cloud integrations, you can use VPC links and Network Load Balancers to ensure traffic never touches the public internet [2][14]. To reduce latency and secure dedicated bandwidth, consider using direct connections like AWS Direct Connect instead of standard VPNs [5].

However, this approach adds architectural complexity. Additional components like VPC endpoints and load balancers need careful configuration [2]. Despite this, it’s an excellent choice when keeping your legacy system intact is a priority, and a full rewrite isn't practical.

Containerisation and Microservices

If scalability is a challenge with your legacy system, containerisation offers a way to modernise specific functionalities without overhauling the entire system. This involves breaking down monolithic systems into smaller, independent services - each handling a specific function. Tools like Docker and orchestration platforms like Kubernetes are commonly used for this [5][15].

Containerisation allows for flexible scaling and independent updates, making it easier to address high-demand functions. However, decomposing a monolithic system requires significant effort and expertise in managing container orchestration platforms [5]. Dependencies between services must be carefully mapped, and your team will need the skills to handle the complexities of this architecture.

This strategy works best when your legacy system struggles to scale or adapt efficiently, and when you’re ready to invest in the upfront effort required for refactoring [5].

Phased Migration with Data Synchronisation

Gradual migration is a safer route to modernisation, especially for mission-critical systems. The Strangler Pattern is a method where specific functions of your legacy system are gradually replaced with new cloud-based services while the remaining features continue to operate [5]. This allows traffic to be routed to the appropriate services as migration progresses.

Gradually replacing specific functions with new applications and services is known as a 'strangler pattern.'- AWS Architecture Blog [5]

This approach reduces disruption for users and lowers the risk of migration compared to a full system replacement [5]. It’s particularly effective for complex systems where downtime is not an option. However, maintaining hybrid connectivity during the transition can increase short-term costs [5].

To ensure smooth operation, you can automate health checks by registering on-premises server IPs in target groups within your Network Load Balancer. This helps monitor performance and allows for seamless failover as legacy components are retired [14].

| Strategy | Advantages | Disadvantages | Ideal Use Cases |

|---|---|---|---|

| Hybrid Architecture (API Gateway) | Centralised control (auth, rate limiting); uniform security; legacy system remains intact [5][14] | Increased architectural complexity; requires VPC endpoints and load balancers [2] | Exposing on-premises resources to external consumers without internet exposure [2][14] |

| Containerisation & Microservices | High scalability; independent updates; improved resource efficiency [5] | High initial effort; requires orchestration expertise (Kubernetes/ECS) [5] | Modernising specific high-demand functions without a full rewrite [5] |

| Phased Migration (Strangler Pattern) | Minimal disruption; reduced migration risk; gradual retirement of legacy hardware [5] | Requires maintaining hybrid connectivity for extended period [5] | Complex, mission-critical systems that cannot afford downtime [5] |

Tools and Technologies for Integration

When it comes to integrating private cloud environments with legacy systems, choosing the right tools is just as important as having a solid strategy. The tools you select will directly impact how effectively private cloud APIs interact with older systems. The key categories to focus on include orchestration platforms for managing containerised applications, service meshes for secure and efficient internal communication, and API gateways for handling protocol translation and traffic management.

Kubernetes for Orchestration

Kubernetes serves as a powerful abstraction layer that simplifies connectivity between cloud-native applications and legacy systems. In containerised environments, pods are ephemeral, which can make it challenging for older systems to maintain consistent connections. Kubernetes addresses this by using Services, which provide stable IP addresses and DNS names that remain constant even as pods change [21]. This ensures that legacy systems can reliably connect to cloud resources without needing to track the lifecycle of individual containers.

The newer Kubernetes Gateway API has replaced the older Ingress API, offering enhanced traffic routing capabilities. With resources like HTTPRoute and GRPCRoute, it enables advanced routing scenarios such as header-based matching and traffic splitting [17]. This is especially useful during phased migrations, where traffic needs to be gradually shifted from legacy systems to cloud-based services. Kubernetes has become the go-to choice for orchestration, with over 50% of publicly accessible container platforms relying on it [19].

To complement Kubernetes, service meshes add another layer of security and efficiency for internal traffic.

Service Mesh for Interoperability

Service meshes are essential for managing internal communication in complex integrations. While API gateways handle external traffic (often referred to as north-south

), service meshes focus on internal east-west

traffic between microservices [18]. Tools like Istio externalise network logic - such as retries, circuit breaking, and load balancing - by offloading these tasks to a separate data plane using sidecar proxies [20]. This approach eliminates the need to embed resiliency patterns directly into application code, which is particularly helpful when working with legacy systems that were not designed with modern networking principles.

Service meshes provide visibility, resiliency, traffic, and security control of distributed application services. They deliver policy-based networking for microservices in the constraints of virtual network and continuous topology updates.- IBM Cloud Architecture [20]

Another critical feature of service meshes is mutual TLS (mTLS), which ensures encrypted and authenticated communication between services. This is crucial for safeguarding data exchanged between private cloud microservices and legacy backends, especially considering that around 66% of security breaches originate internally [4]. By adding this layer of internal security, service meshes play a vital role in protecting sensitive information during integration.

While service meshes address internal communication, API gateways take care of external traffic and protocol compatibility.

API Gateways for Protocol Translation

API gateways act as intermediaries, translating external traffic into formats that internal cluster services can understand. They support multi-protocol routing, including HTTP/HTTPS, gRPC, and TCP/UDP, which is invaluable when dealing with legacy systems that rely on outdated protocols [23].

If your API needs advanced traffic control, authentication, and observability, an API gateway is a better choice than a simple Ingress controller.- API7.ai [18]

API gateways also centralise critical functions like authentication, authorisation, and rate limiting. This simplifies integration with legacy systems that may lack modern security capabilities [22]. For private cloud environments, using Virtual Private Cloud (VPC) links and Network Load Balancers allows traffic to remain within a secure network, avoiding exposure to the public internet [2]. Popular API gateway solutions include Amazon API Gateway, Kong, and Apache APISIX, each offering a range of features suited to different integration scenarios [18].

| Component | Primary Role | Key Benefit for Legacy Systems |

|---|---|---|

| Kubernetes | Orchestration & Scheduling | Provides stable endpoints [20][21] |

| Service Mesh | Inter-service Communication | Offloads security (mTLS) and resiliency tasks [20] |

| API Gateway | Protocol & Traffic Management | Simplifies protocol translation [21] |

| Gateway API | Advanced Routing | Enables sophisticated traffic splitting [17] |

Need help optimizing your cloud costs?

Get expert advice on how to reduce your cloud expenses without sacrificing performance.

Step-by-Step Implementation Guide

Integrating private cloud APIs with legacy systems can feel like a daunting task, but breaking it down into clear, structured phases can make the process much more manageable. By balancing technical execution with business priorities, you can ensure a smooth transition with minimal disruption. Here’s a closer look at how to move from planning to action in three key phases.

Phase 1: Assess and Plan

The first step is all about laying a strong foundation. This phase determines whether your integration will thrive or face challenges right from the start. Begin by thoroughly identifying and evaluating your existing legacy systems. How many systems need to interact with the new service? What level of changes will be required? These questions will help you gauge the scale of the task ahead and explore alternative data access options.

It's also crucial to address any technical constraints early. For example, you’ll need to consider network complexities like VPN or Direct Connect requirements and prioritise internal security measures from the outset. Cyber resilience isn’t something to leave for later.

By understanding your unique priorities and challenges, we create strategies to maximise the value of change.- Morson Praxis [24]

Adopt a business-focused mindset. Ask yourself: how will this integration drive growth? How will it modernise workflows and serve your target segments? Engaging stakeholders early is key to identifying hidden risks and ensuring your team has the expertise - whether contractual, legal, or technical - to move forward confidently. Also, resist the temptation to simply replicate old processes with new technology. Instead, focus on user needs and build workflows that reflect modern capabilities.

One practical tip: set a firm decommission date for the legacy system. This prevents delays and keeps everyone motivated. Allow a buffer of one to two months before contract expiration to account for any unforeseen challenges while maintaining momentum.

Phase 2: Build and Test

This is where the technical work begins. The goal here is to create API wrappers and middleware that allow legacy systems to communicate with modern interfaces. Using contemporary frameworks, start with the core API functionalities to minimise disruptions during the transition.

API gateways like Apigee or AWS API Gateway play a critical role at this stage. They act as a bridge, handling protocol translations, managing traffic, and enforcing security between legacy systems and private cloud environments [7][10]. In private cloud setups, VPC links are particularly useful for accessing private resources while keeping them shielded from the public internet [3][2].

To bridge the gap between APIs and legacy systems, consider using middleware or API gateways. Middleware can connect older systems to modern technology, whilst gateways help manage traffic, security, and scaling effectively.- Jeffrey Zhou, CEO and Founder, Fig Loans [7]

Testing is non-negotiable. This includes functional, performance, and usability testing, often starting with pilot runs to ensure system stability. Conduct load tests in parallel environments to see how the new API integrations impact legacy system response times. Automate data cleansing pipelines to handle inconsistencies, such as missing values or mismatched formats, before data reaches your cloud-native tools.

Once the build and testing phases are complete, the focus shifts to deployment and ongoing improvements.

Phase 3: Deploy and Optimise

Deployment is all about reducing disruptions while transitioning to the new system. A gradual approach, like the Strangler Fig pattern, can be particularly effective. This method involves wrapping legacy functionality in an API gateway and replacing it incrementally, avoiding the risks of a single, large-scale migration [25]. Industries like finance and manufacturing have used this approach to improve fraud detection and lower maintenance costs [25].

After deployment, monitoring becomes essential. Tools like Prometheus and Grafana can track metrics such as API call volume, latency, and error rates. To reduce latency, consider caching solutions like Redis, which can maintain state in stateless serverless environments [25]. Automated error handling is another game-changer, potentially cutting downtime by up to 75% [25].

| Migration Type | Description | Best Use Case |

|---|---|---|

| Rehost (Lift & Shift) | Moving workloads with minimal changes | Ideal for quick migrations when source code cannot be altered |

| Replatform (Lift & Optimise) | Lifting workloads and making minor optimisations for the cloud | Suitable for organisations seeking cloud benefits like elastic computing without a full rewrite |

| Refactor (Move & Improve) | Modifying code to leverage cloud-native features | Best when legacy architecture isn’t compatible with the target environment |

| Rebuild (Rip & Replace) | Decommissioning and rewriting the application from scratch | Necessary when legacy systems are too expensive to maintain or migrate |

Lastly, security should be layered and robust. Use Security Groups for ENIs, Resource Policies for VPC endpoints, and TLS/HTTPS listeners for load balancers [2]. This approach ensures that if one layer is compromised, others remain in place to safeguard sensitive data throughout and after the integration process.

Security and Compliance Requirements

When integrating private cloud APIs with legacy systems, ensuring security and compliance is a top priority. These integrations, while offering modernisation benefits, can expose unique vulnerabilities, particularly when outdated authentication methods are used in contemporary infrastructures. To protect sensitive data and maintain compliance, it's essential to adopt robust security measures throughout the integration process.

Data Residency and Encryption

In the UK, organisations must have a clear understanding of where their data resides and ensure compliance with all relevant legal frameworks. The Cyber Security and Resilience Bill, introduced in November 2025, has expanded the Network and Information Systems Regulations (NISR) to include Managed Service Providers and data centres. Under this legislation, organisations must report incidents within 24 hours, with serious breaches risking fines of up to £17 million or 4% of global annual turnover [28].

For secure data transmission, use TLS 1.2 or above. Older protocols such as SSL or TLS 1.0 are no longer acceptable [27][29]. In private cloud setups, configure TLS listeners on Network Load Balancers and Application Load Balancers to secure traffic between legacy systems and cloud APIs [2]. When dealing with sensitive information, anonymise or pseudonymise data to align with UK GDPR, particularly if the data is collected for monitoring purposes.

To strengthen authentication, adopt OAuth 2.0 for delegated access and OpenID Connect (OIDC) for identity verification [26]. Store API credentials securely in dedicated secrets managers, backed by hardware-based solutions like HSMs or Cloud Key Management Systems (KMS). Avoid embedding credentials in source code and automate their rotation to minimise risks.

Access Controls and Monitoring

Beyond encryption, access control and activity monitoring play a critical role in securing integrations. Implement Role-Based Access Control (RBAC) using OAuth 2.0 to define precise scopes, such as read-only or write access [27]. Adhere to the principle of least privilege by granting users and processes only the access rights they need.

Enforce least privileges: grant users or processes the bare minimum access rights necessary for their tasks, thus restricting potential security breaches or malicious activities.- NCSC [26]

Network isolation is another key measure. Use interface VPC endpoints and security groups to ensure traffic between legacy systems and private cloud APIs remains off the public internet [2]. For further credential security, move beyond simple bearer tokens to more secure options like signed JSON Web Tokens (JWTs) or certificates, which offer replay resistance [26].

Real-time monitoring is indispensable. Employ API gateways to log all requests involving personal or sensitive data, supporting UK GDPR compliance and enabling timely responses to data subject access requests [27][29]. Validate inputs rigorously with schema validation and type checking to guard against exploits targeting weak input validation [27]. Additionally, maintain clear API versioning (e.g., /v1/, /v2/) to manage updates without disrupting legacy integrations, and systematically phase out insecure endpoints [27][29].

Best Practices for Successful Integration

After implementation, adhering to these best practices can ensure your integration remains efficient, scalable, and prepared for future challenges.

Custom API Development and Automation

Starting with an API-first design is crucial for creating robust integrations that can handle future demands. Using abstraction layers helps protect modern applications from disruptions caused by changes in legacy data sources. This means that even if the underlying database is modified, the API continues to function seamlessly. For consistency and ease of maintenance, standardise development with frameworks like REST and OpenAPI. These not only simplify automated testing but also ensure your documentation stays up-to-date. Custom APIs act as translators, converting outdated formats like XML or SOAP into the more modern JSON format. To minimise risks, sandbox environments equipped with synthetic data provide a safe space for testing new integrations before they go live [27].

Hokstad Consulting offers expertise in custom development and automation. As they explain:

We understand that off-the-shelf solutions don't work for everyone... we'll ensure your infrastructure is built to support it securely, reliably, and at scale.[12]

Reducing Costs and Improving Performance

Balancing cost and performance is essential for successful integration. API wrappers and gateways are effective tools for modernising legacy systems while preserving their core functionality [7][8]. Maintaining on-premise systems, however, can rack up substantial annual management costs [4]. A phased migration approach, where workloads are shifted gradually, helps reduce potential disruptions [9][10]. Performance can also be improved with techniques like caching and pagination, which prevent unnecessary strain on infrastructure [9][11]. Additionally, adopting consumption-based pricing models ensures that you only pay for what you use, making expenses more predictable and aligned with actual resource demands [10].

Hokstad Consulting provides cloud cost engineering services tailored to reduce expenses. Their flexible engagement options - ranging from hourly consulting to retainer-based support or even a no savings, no fee

model - are designed to deliver measurable cost reductions [12].

Monitoring and Planning for Growth

Sustained success requires continuous monitoring and forward-thinking planning. Centralised observability tools make it easier to diagnose issues and analyse performance [31]. Setting measurable Service Level Objectives (SLOs) and Service Level Indicators (SLIs) - such as latency, error rates, and uptime - ensures that services consistently meet business needs [31]. Using Infrastructure as Code (IaC) enables scalable, consistent deployments while simplifying version control.

Automated testing plays a key role in identifying potential bottlenecks, especially during rapid development cycles. For instance, rate limit testing can uncover performance constraints before they become critical [30]. IBM highlights this shift in modern IT practices:

The old 'break-fix' model just doesn't work in modern IT environments with greater customer demands, sprawling multi-cloud solutions, and fewer skilled employees to manage it all.[31]

Conclusion

Integrating private cloud APIs with legacy systems has become a critical step for organisations aiming to modernise while preserving the value of their existing investments [1]. Success in this endeavour hinges on choosing the right tools and taking a phased approach that focuses on the business functions delivering the greatest impact.

The numbers speak for themselves. By adopting automated CI/CD pipelines and DevOps methodologies, organisations can achieve 75% faster deployments and cut errors by 90%. Streamlined infrastructure can slash downtime by up to 95%, while cloud cost engineering can trim infrastructure expenses by 30–50%. Deployment times, which once took six hours, can now be reduced to just 20 minutes [32]. These figures highlight the real-world advantages of thoughtful integration.

A hybrid architecture continues to be the go-to solution, enabling private clouds to support new services while legacy systems remain on-premises, linked through APIs or middleware. Breaking down monolithic systems into microservices, leveraging Infrastructure as Code (IaC), and implementing robust monitoring with clear SLOs are key to achieving scalability and resilience. The Strangler Fig pattern offers a proven, low-risk approach to gradually replace legacy functionalities [33][1].

Expert guidance can make all the difference in navigating these complex transitions. Hokstad Consulting, for example, brings expertise in DevOps transformation and cloud cost engineering, offering flexible engagement options like hourly consulting, retainers, and even a no savings, no fee

model. As they put it:

Your cloud. Your terms. Backed by expert support[12].

Their vendor-neutral stance ensures that their recommendations are tailored to your unique requirements, not tied to any specific provider.

Moving forward, organisations must balance innovation with practicality. An API-first mindset, rigorous security measures, and partnerships with specialists who understand both legacy systems and modern cloud technologies can pave the way for agility and efficiency. This approach enables businesses to meet the demands of today’s fast-paced environment without compromising the dependability of their existing systems.

FAQs

What challenges can arise when integrating private cloud APIs with legacy systems?

Integrating private cloud APIs with legacy systems is no small feat, largely due to a mix of technical and operational hurdles. One major issue is technical incompatibility. Older systems often depend on outdated protocols and proprietary data formats that don’t mesh easily with modern APIs. Bridging this gap frequently demands custom-built solutions, which can complicate tasks like data mapping and schema translation.

Then there’s the matter of network and security challenges. Private cloud endpoints are usually safeguarded by virtual private networks (VPNs) or other secure configurations. While these measures are essential, they can also slow down the setup process and increase the likelihood of configuration errors. On top of that, performance bottlenecks can emerge when requests have to pass through multiple network layers, leading to delays - especially problematic for time-sensitive operations.

Addressing these challenges calls for meticulous planning, the use of robust middleware to handle data transformation, and the implementation of strict security protocols. Hokstad Consulting supports UK organisations in crafting effective integration strategies, helping to establish smooth and reliable connections between private cloud APIs and legacy systems without sacrificing performance or stability.

How do API gateways improve security when integrating with legacy systems?

API gateways play a crucial role in bolstering security by managing authentication and authorisation. This includes enforcing IAM policies or using custom authorisers. They also implement resource-level policies to regulate access and terminate TLS connections, ensuring incoming traffic is validated and filtered before reaching your legacy systems.

Serving as a centralised control hub, API gateways help reduce risks such as unauthorised access and harmful traffic, making the integration process both secure and efficient.

What is the Strangler Pattern, and how does it help reduce risks when integrating private cloud APIs with legacy systems?

The Strangler Pattern, inspired by the way a strangler fig tree grows around its host, offers a gradual method for modernising legacy systems. Instead of overhauling the entire system in one go, it shifts functionality piece by piece to new, updated services. A façade, like an API gateway, plays a key role by routing requests to either the legacy system or the newer components, ensuring everything runs smoothly during the transition.

This approach reduces risks by enabling incremental updates. Each new service can be tested with live traffic, making it easier to spot and address issues early. If a problem arises, it’s confined to the newly added component, simplifying rollbacks and minimising disruptions. Over time, the legacy system is completely phased out, revealing dependencies and allowing for more effective planning - especially valuable for organisations managing private cloud environments in the UK.