Managing CI/CD pipelines across multiple cloud providers can be complex but essential for businesses aiming for flexibility and growth. Here's the crux:

- Multi-cloud CI/CD involves running pipelines across platforms like AWS, Azure, and Google Cloud, offering flexibility and avoiding vendor lock-in.

- Challenges include tool incompatibility, fragmented security, monitoring gaps, and cost management.

- Key strategies for success:

- Use Infrastructure as Code (IaC) tools like Terraform for consistency.

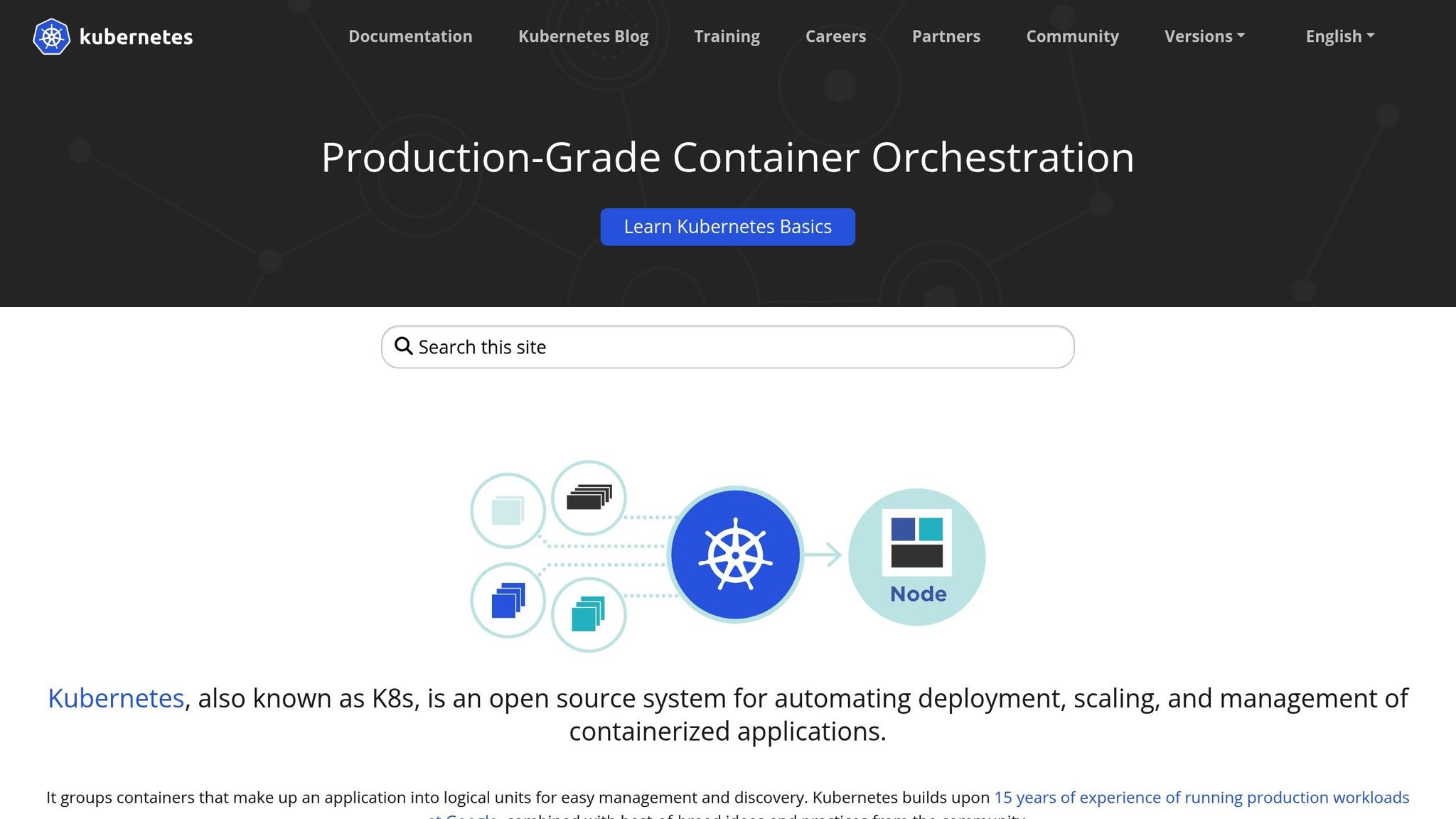

- Leverage Kubernetes for portability and orchestration.

- Apply cost-saving techniques like spot instances, ephemeral agents, and automated resource management.

- Standardise monitoring with tools like OpenTelemetry and enhance security with solutions like HashiCorp Vault.

- Advanced practices: Blue-Green and Canary deployments ensure smooth updates with minimal downtime.

Outcome: Scalable, reliable, and cost-efficient multi-cloud CI/CD pipelines that support business growth while maintaining control over complexity and expenses.

Accelerating Software Delivery: Building a Scalable CI/CD Pipeline

Main Strategies for Optimising Multi-Cloud CI/CD

Tackling the challenges of multi-cloud CI/CD requires methods that are precise, repeatable, and scalable.

Infrastructure as Code for Consistency

Infrastructure as Code (IaC) helps eliminate manual configuration errors when working across multiple cloud environments. Tools like Terraform and Pulumi allow you to define your infrastructure in code, creating a unified workflow for AWS, Azure, and GCP. This means you don’t need to become an expert in each provider’s APIs or consoles [4][2].

Treat your infrastructure like you would application code. By storing your IaC files in version control systems such as GitHub, you can track every change, roll back mistakes, and collaborate effectively [5]. Teams can even review changes via pull requests, with automated speculative plans

showing what will be modified before any changes are applied to production [5].

Policy-as-code adds another layer of control. Tools like OPA Gatekeeper and Terraform Sentinel integrate compliance checks directly into your IaC workflows. They automatically enforce security and tagging policies across all cloud platforms, catching issues before deployment [6][2]. Standardising resource naming and tagging conventions across providers can also simplify monitoring and cost management significantly [6][1].

For consistent secret management and identity handling across clouds, consider a solution like HashiCorp Vault [6][2].

Containerisation and Orchestration with Kubernetes

Kubernetes offers the abstraction layer needed for seamless multi-cloud deployments. Its standardised approach to application deployment ensures portability across providers.

Adopt GitOps workflows with tools like ArgoCD or FluxCD, which rely on Git as the single source of truth. This ensures that application states remain consistent across clusters and eliminates the risks associated with manual deployments [3]. A pull-based

model like this is especially valuable when managing environments across multiple clouds.

For production environments, prioritise regional clusters over zonal ones. Regional clusters distribute control planes across zones, ensuring the Kubernetes API remains available even during maintenance or outages [3]. This architectural choice is one reason 70% of enterprises using Kubernetes report better scalability and operational efficiency [3].

To optimise performance, enable Horizontal Pod Autoscaling to adjust container instances dynamically based on metrics like CPU or memory usage. Additionally, use NodeLocal DNSCache to avoid performance bottlenecks [3]. For security, disable default service account automounting on Pods that don’t need access to the Kubernetes API. This can significantly reduce unnecessary API interactions in large-scale environments [3].

These practices address many of the interoperability and scaling issues common in multi-cloud setups.

Resource Allocation and Load Balancing

Dynamic resource allocation is critical for avoiding both over-provisioning and performance bottlenecks. For example, Google Cloud's VPC-native networks can support up to 15,000 virtual machines in a single network, but only if resource allocation is carefully planned [3]. A good rule of thumb: match CPU capacity to expected IOPS, typically one CPU per 2,000–2,500 IOPS [3].

For scaling, use internal load balancers where appropriate [3]. Container-native load balancing, particularly with External Application Load Balancers, removes node limits entirely, making it ideal for large-scale operations [3].

Keep an eye on maximum egress bandwidth limits in Google Cloud, which range from 1 to 32 Gbps depending on the machine type. Right-sizing your instances is essential to avoid throttling during peak usage while managing costs effectively [3].

Next, we’ll explore the tools that support these strategies in detail.

Tools and Technologies for Multi-Cloud CI/CD

Selecting the right tools is key to building a multi-cloud CI/CD pipeline that's scalable and easy to maintain.

CI/CD Orchestration Tools Comparison

When it comes to managing the challenges of interoperability and varying APIs, Spinnaker is a standout choice for multi-cloud deployments. Designed specifically for orchestrating releases across AWS, Azure, and GCP, it offers built-in features like automated rollbacks and deployment strategies, making it particularly effective [2]. Unlike other tools, Spinnaker treats multi-cloud deployment as a core capability [2].

Choosing tools with native multi-cloud support simplifies the process of managing integrations, especially as cloud providers frequently update their APIs. This choice also lays the foundation for consistent monitoring and strong security across multiple cloud platforms.

Monitoring and Observability Solutions

After selecting your tools, effective monitoring becomes essential to maintain the health of your pipeline. OpenTelemetry has become the go-to standard for multi-cloud observability, offering a unified instrumentation layer that integrates seamlessly with backends like Datadog, Prometheus, or New Relic [9]. This flexibility ensures you're not tied to a single monitoring platform.

If your tooling is dictating your observability strategy, then you need to invert the approach. Tools are meant to enable and empower observability, not to limit your choices.- AWS Observability Guide [9]

For cost management, Kubecost provides real-time tracking of Kubernetes expenses, helping to avoid budget surprises [10]. Meanwhile, Datadog excels at offering a unified view across diverse cloud APIs, thanks to its SaaS-based platform [2][10].

To ensure consistency, standardise Service Level Indicators (SLIs) and Service Level Objectives (SLOs) across all providers. This approach allows you to measure pipeline health uniformly, no matter which cloud is running the workload [1][2]. Additionally, align your dashboards with business metrics - such as conversion rates or user satisfaction - rather than focusing solely on infrastructure data [9].

Security and Compliance in Multi-Cloud Environments

Strong security and compliance measures are just as important as monitoring in multi-cloud setups. HashiCorp Vault simplifies secrets management by offering just-in-time credentials, eliminating the need for static, provider-specific keys [6][8]. This reduces the risks associated with long-lived credentials across different clouds.

To streamline access control, adopt OpenID Connect (OIDC) or federated identity management. These approaches allow you to manage access across AWS, Azure, and GCP without creating separate IAM users for each platform [6][8]. By enabling your CI/CD platform to assume temporary roles dynamically, you can significantly reduce the attack surface.

Policy-as-code tools like Open Policy Agent (OPA) and Terraform Sentinel are invaluable for enforcing security and compliance rules before deployment [6][8]. Sentinel, for instance, operates during the Terraform plan stage, offering detailed, resource-level controls. On the other hand, AWS Service Control Policies (SCPs) enforce broader, account-level restrictions at execution time [8]. Use Sentinel for tasks like enforcing tagging and encryption standards, while SCPs can limit access to specific services or regions [8].

Need help optimizing your cloud costs?

Get expert advice on how to reduce your cloud expenses without sacrificing performance.

Cost Optimisation Techniques for Scalable CI/CD

::: @figure  {Multi-Cloud CI/CD Optimization Results: Before vs After Metrics}

:::

{Multi-Cloud CI/CD Optimization Results: Before vs After Metrics}

:::

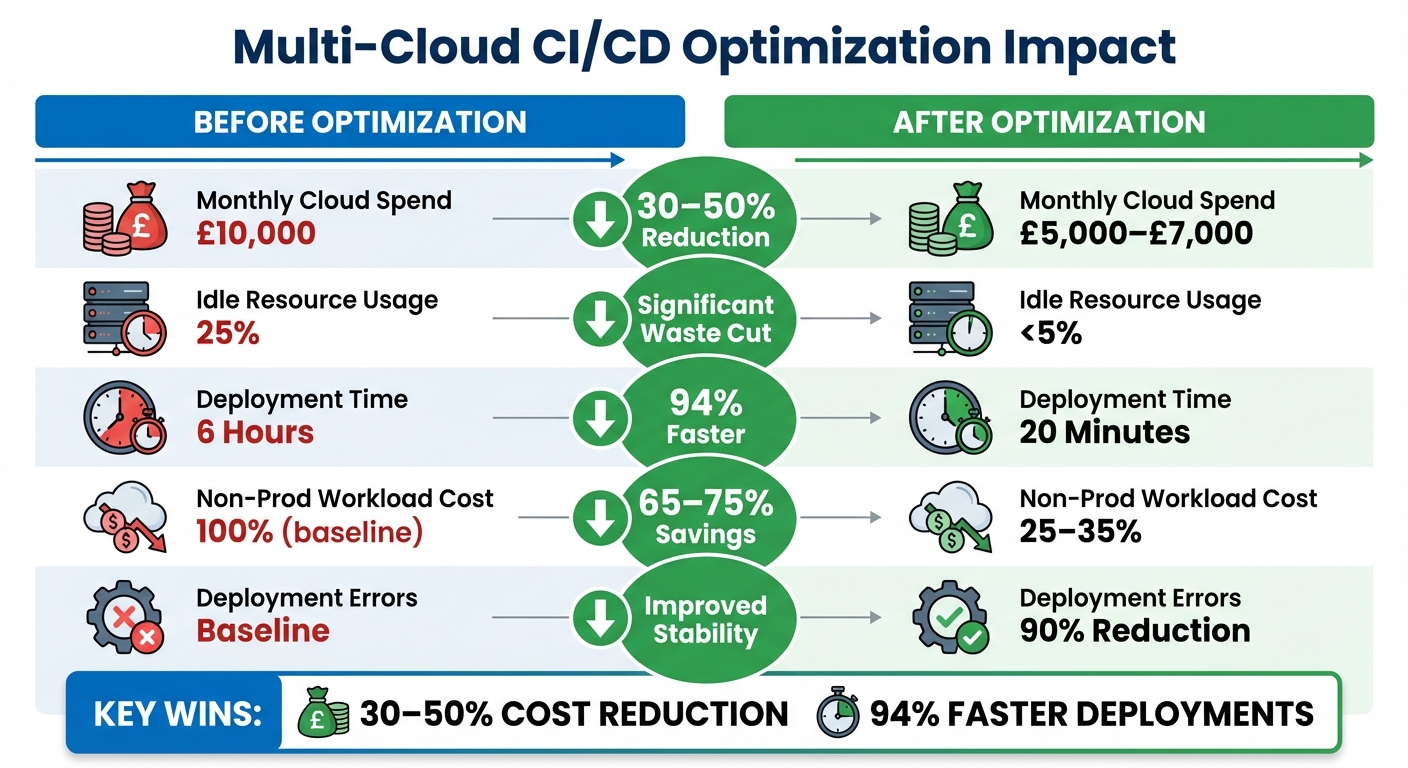

Keep your multi-cloud CI/CD pipelines efficient without letting costs spiral out of control by leveraging automation and closely monitoring cost metrics.

Reducing Cloud Costs Through Automation

Spot instances can save a lot on non-critical workloads. These are ideal for testing and development tasks where interruptions are tolerable. For more predictable production workloads, consider Savings Plans, which offer discounts while avoiding the unpredictability of spot instances [11]. Automation is key to cutting non-production costs significantly.

Ephemeral build agents are a game-changer for avoiding idle costs. Instead of keeping build servers running around the clock, you can spin up ephemeral agents for each task and shut them down immediately after. This approach eliminates waste, especially when combined with resource tagging for details like environment, owner, and project. Tags not only help with automated cleanup but also ensure accurate cost tracking across teams.

Multi-stage builds help streamline container images, ensuring they only include essential binaries and executables [7]. By standardising a ‘golden image’, you can reduce storage costs and minimise maintenance [7]. These automation tactics integrate seamlessly into broader cost-saving strategies.

Measuring Optimisation Results

How do you know if your cost-saving efforts are paying off? Focus on key performance metrics. Track cost per pipeline run to identify inefficiencies and keep an eye on idle time percentage to find resources that are sitting unused. Metrics like build duration trends and Mean Time to Recovery (MTTR) can reveal whether your pipeline is getting faster and more reliable.

| Metric | Before Optimisation | After Optimisation | Impact |

|---|---|---|---|

| Monthly Cloud Spend | £10,000 | £5,000–£7,000 | 30–50% Reduction |

| Idle Resource Usage | 25% | <5% | Significant Waste Cut |

| Deployment Time | 6 Hours | 20 Minutes | 94% Faster |

| Non-Prod Workload Cost | 100% | 25–35% | 65–75% Savings |

| Deployment Errors | Baseline | 90% Reduction | Improved Stability |

Set up alerts for low CPU usage (e.g., below 10% for 30 minutes) and budget thresholds to prevent unexpected cost spikes. Right-size your resources by basing instance sizes on actual CPU and memory usage, rather than peak estimates. Strive for 100% tagging compliance to maintain precise cost tracking across all resources.

Hokstad Consulting's Approach to Cost Engineering

Hokstad Consulting blends technical automation with smart cost management to deliver measurable savings and boost pipeline efficiency. Their tailored strategies can cut cloud costs by 30–50%, addressing waste in your CI/CD pipelines while improving performance.

Their process begins with a detailed cloud cost audit paired with DevOps transformation, identifying inefficiencies and implementing automation to reduce expenses without compromising quality. What sets them apart is their No Savings, No Fee

model - fees are capped at a percentage of the savings they achieve for you. This ensures their success is directly tied to yours.

Whether you need a one-time audit or ongoing support through a retainer, Hokstad Consulting’s expertise in hybrid and multi-cloud environments ensures your CI/CD pipelines run smoothly across AWS, Azure, and GCP.

Advanced Deployment Patterns for Scalability

After tackling cost optimisation, advanced deployment patterns take scalability and performance to the next level.

Blue-Green and Canary Deployments

Blue-Green deployments rely on two separate production environments: one active (Blue) and one staging (Green). The Blue environment manages all user traffic, while the Green environment is used for testing updates. When the new version in Green is verified, traffic is seamlessly switched over using load balancers or DNS. If an issue arises, traffic can be redirected back to Blue instantly, ensuring a quick rollback [12][14].

This approach drastically reduces downtime but comes with higher costs, as it requires maintaining duplicate infrastructure [12]. For multi-cloud setups, ensure database schema changes are backward-compatible. Using feature toggles allows both versions to function simultaneously during transitions, easing the process [12].

Canary deployments take a gradual approach by introducing the new version to a small portion of traffic - typically around 5%. This method allows you to monitor performance and error rates before scaling up. Tools like service meshes or advanced ingress controllers can handle the traffic splitting, particularly in multi-cloud environments [12].

| Feature | Blue-Green Deployment | Canary Release |

|---|---|---|

| Rollback Speed | Immediate (switch back) [12] | Fast (stop rollout) [13] |

| Cost Impact | High (requires 2x resources) [12] | Low (incremental scaling) [13] |

| Complexity | Moderate [14] | High (requires traffic splitting) [12] |

Once these deployment strategies are in place, it’s crucial to evaluate your pipeline’s performance to maintain scalability.

Performance Testing for Scalable Pipelines

After improving deployment practices, performance testing becomes critical to ensure scalability. Using a unified observability stack is key for standardising monitoring. This approach consolidates deployment health metrics into a single dashboard, avoiding fragmented data across multiple cloud providers [2][1][15].

Pay close attention to network latency between cloud providers and variations in service offerings, as these factors can distort performance results [1][15]. Always test under real-world traffic conditions rather than theoretical peak loads. Keep an eye on metrics like deployment time, resource usage, and error rates to pinpoint bottlenecks before they affect users.

Conclusion: Optimising Multi-Cloud CI/CD Pipelines

Summary of Main Strategies

To build scalable and cost-effective multi-cloud CI/CD pipelines, a few key strategies stand out. Start with Infrastructure as Code tools like Terraform to ensure consistent provisioning across environments. Use Kubernetes for container orchestration, offering portability, and adopt unified resource management to tackle the complexity of multi-cloud setups.

Cost optimisation is equally critical. Automate resource lifecycle management with features like scheduled shutdowns, ephemeral build agents, and TTL tags to minimise waste. Spot instances are a smart choice for non-critical builds, cutting costs by up to 90% compared to on-demand pricing. Additionally, AI-driven self-healing systems can significantly reduce downtime and operational expenses. Enforce governance through Policy as Code, which ensures idle resources are terminated and prevents oversized provisioning.

To measure success, track metrics across three key areas: delivery performance (DORA), team productivity (SPACE), and financial efficiency (FinOps). With 92% of enterprises already adopting multi-cloud strategies [16], incorporating regular financial reviews and weekly optimisation discussions can help maintain cost awareness and drive continuous improvement [17]. These practices create a strong foundation for expert-led cost and performance optimisation.

How Hokstad Consulting Can Help

Hokstad Consulting applies these principles to deliver tangible results for businesses. They specialise in DevOps transformation and cloud cost engineering, helping clients achieve savings of 30–50% on cloud expenses while speeding up deployment cycles. Their services include strategic cloud migrations with zero downtime, automated CI/CD pipeline implementation, and ongoing infrastructure monitoring.

Hokstad Consulting offers flexible engagement options, including a No Savings, No Fee

model, where their fees are tied to the savings they achieve for your business. Whether you need cloud cost audits, custom automation solutions, or AI-driven DevOps tools, they tailor their support to fit public, private, hybrid, and managed hosting environments.

Visit Hokstad Consulting to learn how their on-demand expertise can help optimise your multi-cloud CI/CD pipelines for better scalability and cost efficiency.

FAQs

What are the key challenges of managing CI/CD pipelines in a multi-cloud environment?

Managing CI/CD pipelines across several cloud platforms comes with its fair share of challenges. One of the biggest hurdles is maintaining consistent infrastructure and configurations. Each provider has its own set of APIs and tools, making it tricky to ensure everything aligns seamlessly.

Another issue is dealing with security and governance policies. When these policies are fragmented or not properly aligned, they can create vulnerabilities and increase risks.

Cost management is another area that can get out of hand. Without proper monitoring and optimisation, a multi-cloud setup could lead to unexpected expenses. On top of that, latency variations between providers can affect performance, adding another layer of complexity. Ensuring pipelines are both scalable and observable in such an environment demands thoughtful planning and strong solutions.

How does Infrastructure as Code ensure consistent setups across multiple cloud platforms?

Infrastructure as Code (IaC) lets you define and manage resources using reusable scripts, often with tools like Terraform or Pulumi. These scripts are version-controlled, ensuring that you can replicate the same configurations and provisioning across various cloud platforms, including AWS, Azure, and Google Cloud.

By automating processes, IaC removes the risk of manual errors, avoids configuration drift, and ensures consistent setups. This makes managing and scaling multi-cloud environments much more straightforward and efficient.

What are the advantages of using Kubernetes to manage CI/CD pipelines across multiple cloud platforms?

Kubernetes brings a range of advantages to managing CI/CD pipelines, especially in multi-cloud setups. One standout feature is its ability to ensure workload portability across platforms like AWS, Azure, and Google Cloud. This flexibility means organisations can avoid being tied to a single cloud provider, making it easier to adapt to changing needs.

Another strength lies in its automated scaling and self-healing features, which help maintain application availability. These capabilities reduce downtime and improve fault tolerance, ensuring systems run smoothly even under unexpected conditions.

Kubernetes also supports declarative deployments through tools like Argo CD. This approach simplifies rollbacks and streamlines pipeline management, saving time and effort. On top of that, by optimising resource allocation and offering better observability, Kubernetes helps manage cloud costs effectively without compromising performance or scalability.