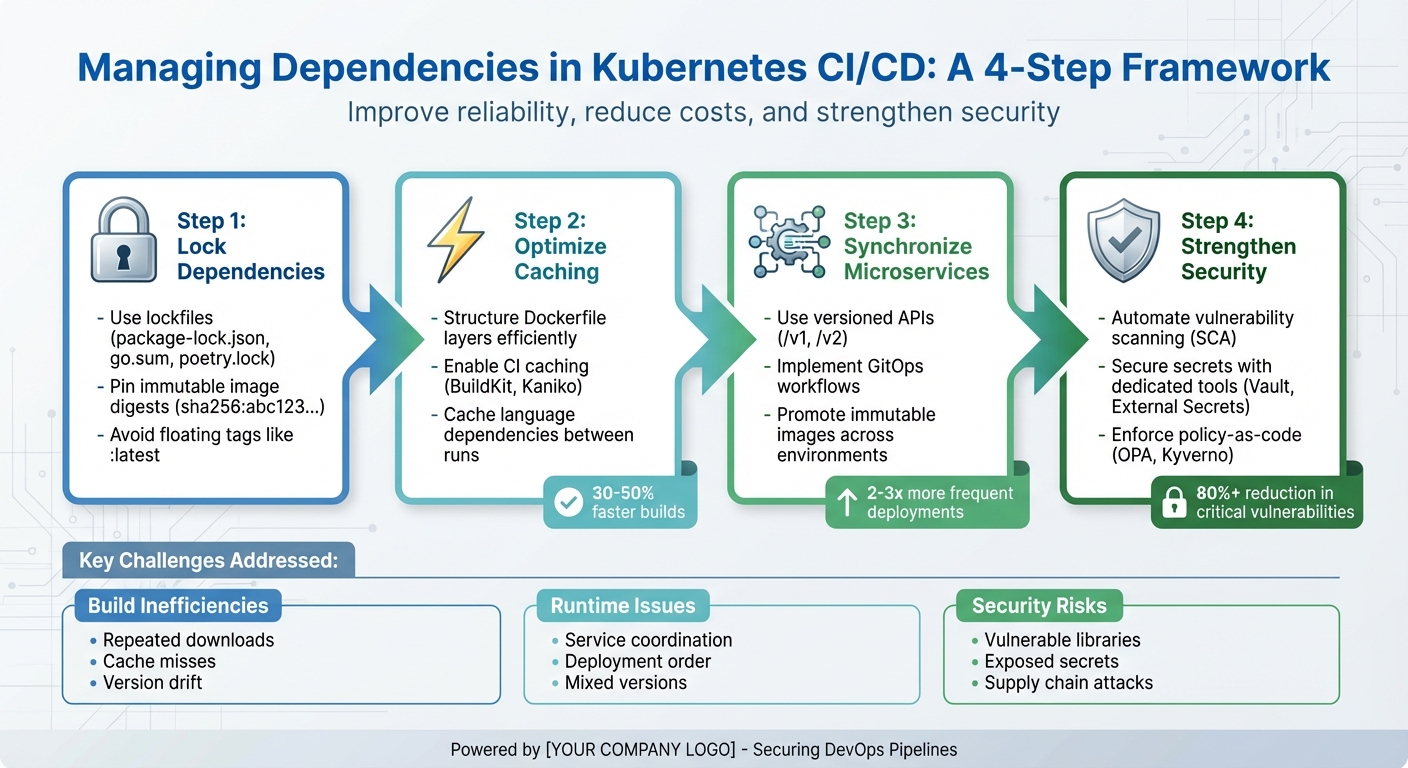

Dependency management in Kubernetes CI/CD pipelines is critical for maintaining stable builds, reducing costs, and ensuring security. Mismanaged dependencies can lead to pipeline failures, environment inconsistencies, and inflated cloud bills. Below are the main challenges and solutions:

Key Challenges:

- Build inefficiencies: Repeated downloads, cache misses, and version mismatches increase costs and build times.

- Runtime issues: Poor coordination of microservices and dependency updates cause crashes and failures.

- Security risks: Vulnerable libraries, outdated images, and mishandled secrets expose pipelines to attacks.

Solutions:

- Lock dependencies: Use lockfiles and immutable image digests to ensure consistency across environments.

- Optimise caching: Structure Dockerfile layers and enable CI caching to speed up builds and reduce costs.

- Synchronise microservices: Use versioned APIs, GitOps workflows, and immutable image promotions for reliable deployments.

- Strengthen security: Automate vulnerability scanning, secure secrets with dedicated tools, and enforce policies for dependency sources.

These practices not only improve reliability but also reduce cloud expenses and security risks. Start by identifying inefficiencies in your pipelines, and focus on implementing lockfiles, caching, and automated security checks. For complex setups, consulting experts like Hokstad Consulting can help streamline your pipelines and ensure compliance with UK regulations.

::: @figure  {4-Step Framework for Kubernetes CI/CD Dependency Management}

:::

{4-Step Framework for Kubernetes CI/CD Dependency Management}

:::

CI/CD Tutorial for Kubernetes: Build & Deploy with GitLab, Harbor and ArgoCD

Main Challenges in Kubernetes Dependency Management

Building on the earlier discussion of dependency pitfalls, this section delves into the specific challenges that arise during the build, runtime, and security phases. Poor dependency management in Kubernetes CI/CD pipelines can lead to slower builds, unstable runtimes, and increased security risks in microservices. Tackling these issues can reduce costs and speed up deployments. Below, we explore the key challenges, from inefficiencies during builds to runtime failures and security vulnerabilities.

Build-Time Dependency Issues

One of the biggest frustrations during build processes is the repeated downloading of the same dependencies. Every CI job often re-downloads dependencies, which can total hundreds of megabytes. On top of this, poorly written Dockerfiles can invalidate caches over minor changes, forcing full dependency reinstalls. Another common issue is version drift - differences in dependency versions between local, CI, and production environments - which leads to the infamous works on my machine

problems.

For organisations in the UK managing multiple pipelines daily across numerous microservices, these inefficiencies can rack up significant costs. Longer-running CI jobs consume more Kubernetes CPU and memory, while repeated downloads inflate network egress charges. Monthly cloud bills can easily rise by hundreds or even thousands of pounds. To make matters worse, a lack of visibility into metrics like cache hit ratios, average build times, and registry traffic makes it tough to connect these technical inefficiencies to their financial impact.

These build inefficiencies often set the stage for more complex runtime challenges.

Runtime and Inter-Service Dependencies

Microservices architectures introduce intricate runtime dependencies that are difficult to manage through CI/CD pipelines. For instance, an API change in one service might unexpectedly break downstream consumers. Deployment order becomes critical - rolling out a service before its dependencies are ready can lead to repeated pod crashes. Deploying in the wrong sequence might leave the system in a mixed-version state, causing inconsistent behaviour.

These coordination issues often escalate into production incidents. Pods may fail readiness checks and get rolled back automatically, error rates can spike on specific routes, and teams may struggle to pinpoint which service version combination caused the failure. In Kubernetes environments, where auto-scaling and frequent rollouts are the norm, keeping track of which service versions depend on others is a moving target. Strategies like blue/green or canary deployments add even more complexity, as pipelines must validate cross-service interactions while multiple versions run simultaneously.

Such runtime challenges underscore the importance of maintaining strong security practices.

Security Risks in Dependency Supply Chains

Third-party libraries, base images, and container images represent a significant attack surface in Kubernetes CI/CD pipelines. Outdated or vulnerable dependencies are prime targets for exploitation, and software supply-chain attacks increasingly exploit trust in upstream package repositories and image registries. Without robust CI/CD security checks, a single compromised dependency can put entire clusters at risk.

Another major concern is the mishandling of secrets. CI/CD jobs often require access to credentials for container registries, databases, message brokers, and external APIs. Common pitfalls include secrets being exposed in build logs, overly privileged service accounts, and unencrypted secrets stored in etcd. Managing and rotating these credentials across multiple clusters and environments - such as development, staging, and production - adds complexity and increases the risk of a breach. For UK organisations in regulated industries like finance, healthcare, and the public sector, such missteps can result in compliance violations and costly incident responses.

Specialist DevOps consultancies, such as Hokstad Consulting, provide tailored solutions for UK businesses. They help design secure pipeline workflows and enforce policies that balance robust security with the need for rapid deployment across public, private, and hybrid cloud environments.

Solutions for Better Dependency Management in Kubernetes CI/CD

Once you've pinpointed the main challenges, the next move is to adopt practical strategies that make managing dependencies quicker, safer, and more cost-efficient. Tackling build, runtime, and security issues requires focused improvements in how dependencies are handled. The following approaches target inefficiencies during builds, streamline runtime coordination, and ensure deployments remain consistent across various environments.

Codify and Lock Dependencies

Leverage lockfiles for consistent builds. Most programming languages provide lockfiles like package-lock.json, go.sum, or poetry.lock to lock dependency versions. Make your CI/CD pipeline fail if these lockfiles are missing or outdated. This ensures that every environment - whether it’s local, CI, staging, or production - installs the exact same versions of dependencies, avoiding the dreaded works on my machine

situation.

Use immutable digests for base images instead of floating tags. Rather than relying on tags like python:3.12 or node:20-alpine, reference immutable digests such as python:3.12@sha256:abc123... in your Dockerfiles. Floating tags can unexpectedly pull newer images, leading to inconsistencies. Treat Dockerfiles as detailed dependency manifests, including the base image, system packages, and language runtime. Multi-stage builds can further isolate build-time tools (e.g., compilers) from the final runtime image, reducing both the image size and potential vulnerabilities.

For organisations managing multiple microservices, centralised base images maintained by a dedicated platform team can simplify consistency. Teams can rely on pre-approved, patched base images with curated dependencies, reducing duplication and ensuring compliance across the board. Companies like Hokstad Consulting specialise in helping UK businesses implement these standards across various cloud environments, whether public, private, or hybrid.

Locking dependencies is just the start. To speed up builds, optimised caching is key.

Optimise Caching for Faster Builds

Smart caching can dramatically reduce build times and cloud expenses. Structuring Dockerfile layers efficiently allows you to minimise rebuilds. Stable layers - like base images, OS packages, and dependencies - should come first, while frequently changing application code should be added later. For instance, running commands like npm install or mvn dependency:go-offline in their own layer ensures these steps are only repeated if the lockfile changes. Tools like Docker BuildKit and Kaniko can then reuse these layers, cutting build times by 30–50% in many CI pipelines[2].

Enable Docker layer caching in your CI/CD setup. BuildKit offers options like --cache-from and --cache-to to store and reuse cached layers in your container registry. Kaniko can achieve similar results by saving cache layers directly in the registry. This reduces the need to repeatedly download unchanged layers, saving both time and network bandwidth - an important cost-saving measure for teams running hundreds of builds daily.

Cache language-specific dependencies between pipeline runs. Configure your CI system to store dependency caches for tools like npm (~/.npm), Maven (.m2/repository), pip (~/.cache/pip), and Go modules. Use the lockfile checksum as the cache key. If the lockfile hasn’t changed, the CI runner can skip downloading dependencies altogether. Hosting CI agents within the same Kubernetes cluster or VPC as your container registry can further reduce image pull and push latency, speeding up builds while keeping costs down.

Coordinate Microservices and Environments

Runtime challenges often stem from poor service coordination and deployment strategies. Here’s how to bring order to the chaos.

API versioning and clear contracts are essential. To avoid breaking changes, use versioned endpoints like /v1 and /v2, and adopt a contract-first approach with tools like OpenAPI or gRPC definitions. Backwards compatibility is key - run old and new API versions side by side for a while, giving consumers enough time to migrate. This ensures smoother transitions and prevents runtime failures caused by premature updates.

Multi-service pipelines and GitOps simplify complex deployments. Instead of deploying microservices individually, create pipelines that test and deploy related services together. Store Kubernetes manifests, Helm charts, and environment-specific configurations in Git. Tools like Argo CD or Flux can synchronise clusters automatically. This approach makes dependency changes transparent and auditable. For example, promoting a new image tag from staging to production becomes as simple as a Git commit and pull request. Surveys suggest teams using GitOps often deploy two to three times more frequently, with fewer failures[1].

Immutable image promotion ensures consistency across environments. Build and test each container image once, then promote the same immutable digest through all environments. Use ConfigMaps or external secret managers to handle environment-specific configurations. Paired with GitOps, this method simplifies rollbacks - just revert the Git commit and re-sync to return the system to a stable state. This not only improves reliability but also makes managing microservices at scale far more predictable.

Need help optimizing your cloud costs?

Get expert advice on how to reduce your cloud expenses without sacrificing performance.

Securing the Dependency Supply Chain

Modern supply chain attacks have evolved, now targeting dependencies, build systems, and CI/CD tooling - not just application code. A compromised third-party library, a vulnerable base image, or an untrusted registry can introduce malicious code or exploitable vulnerabilities well before an application reaches production. For organisations in the UK, these risks can result in GDPR violations, regulatory fines, and expensive incident responses. To mitigate these threats, dependencies must be treated as part of the attack surface, adopting a zero trust for dependencies

approach[3][4]. Strengthening the supply chain is the final step in securing Kubernetes CI/CD pipelines while maintaining cost-efficiency. This complements earlier efforts to optimise build and runtime dependency management, emphasising the importance of a secured dependency supply chain.

Automated Scanning and Vulnerability Management

Incorporating Software Composition Analysis (SCA) and container image scanning into your CI pipeline is a must. Use SCA tools early in the process - scan lockfiles like package-lock.json, requirements.txt, or pom.xml as soon as dependencies are installed. Similarly, container images should be scanned right after they’re built. Set clear severity thresholds: block builds with critical or high vulnerabilities while logging or issuing warnings for medium or low ones. This shift-left

approach identifies issues early, long before they reach production.

For example, a CI pipeline can include a dedicated security scan stage. A Tekton task might pull a scanner image, securely handle registry credentials, and produce a machine-readable report for audits. A fintech company that implemented mandatory image scanning and registry whitelisting reduced high and critical vulnerabilities by over 80% within three months, saving thousands of pounds every quarter[3][4]. To avoid overwhelming developers, introduce age-based SLAs - for instance, addressing critical CVEs within seven days and high CVEs within 30 days. Integrate findings into collaboration tools with triage rules to group related issues, making them easier to manage.

Secure Secrets and Sensitive Configurations

Secrets should never be stored in code, container images, or CI logs. Instead, use a dedicated secrets manager such as HashiCorp Vault, AWS Secrets Manager, GCP Secret Manager, or the Kubernetes External Secrets Operator. Pipelines can fetch secrets at runtime using environment variables or mounted volumes. For instance, a GitHub Action or Tekton task can authenticate to a secrets backend using workload identity, retrieve database credentials just-in-time, and inject them into the pod environment without writing them to disk.

Short-lived credentials are preferable to static access keys. Use OIDC-based federation or workload identity to issue tokens that automatically expire. Regularly rotate secrets and enforce stricter IAM policies across environments. For example, one organisation adopted Vault-backed secrets and removed sensitive information from Git repositories. When a source-code repository exposure occurred, audit logs confirmed that no production credentials were compromised, avoiding a costly incident response. Keep configuration data (non-sensitive information stored in Git) separate from secrets, and use tools like Sealed Secrets or SOPS to encrypt any sensitive data that must remain alongside manifests.

These practices establish a strong foundation for enforcing strict policies on dependency sources.

Enforcing Policies for Dependency Sources

Policy-as-code tools can ensure that only approved libraries, container images, and registries are used. Tools like Open Policy Agent (OPA) with Gatekeeper or Kyverno allow you to enforce rules at admission time by evaluating pod specifications against predefined, version-controlled policies. Common rules include rejecting pods that use images from unapproved registries, requiring hardened base images, and blocking images tagged as latest.

Running the same policy engine during CI to validate Kubernetes manifests and Helm charts provides a two-tier defence - pre-deployment checks followed by in-cluster enforcement.

Expert teams often treat policy bundles like internal security products, versioning and testing them as rigorously as application code. Firms like Hokstad Consulting, known for their DevOps and cloud expertise, have observed that combining supply-chain security controls with pipeline optimisation not only reduces security incidents but also leads to faster, more predictable deployments and measurable savings in cloud costs.

Conclusion: Simplify and Optimise Dependency Management for Kubernetes CI/CD Pipelines

Managing dependencies effectively in Kubernetes CI/CD pipelines is key to achieving fast, secure, and cost-efficient deployments. By codifying and locking dependencies using GitOps workflows, lockfiles, and pinned image tags, teams can ensure builds are reproducible and rollbacks are straightforward. These practices lay the groundwork for dependable and secure CI/CD operations.

On top of these essential strategies, improving build performance through caching plays a vital role. Proper caching not only cuts build times but also reduces operational costs. Coordinating microservices with versioned APIs and implementing progressive delivery strategies ensures stable inter-service dependencies during deployments. Meanwhile, integrating automated security checks and enforcing policies helps mitigate supply-chain risks and meet regulatory standards.

These techniques collectively enhance metrics that matter to UK leadership: higher deployment frequency, reduced change failure rates, shorter lead times, and quicker recovery times. They also provide the traceable and auditable records required for compliance. The result? Faster deployments, fewer errors, and lower cloud costs - delivering both technical reliability and business value.

Adopting these dependency management practices consolidates the benefits discussed throughout this article. UK organisations, especially those managing hybrid or multi-cloud setups or dealing with Kubernetes skills shortages, can benefit from automated, opinionated practices that reduce the need for deep platform expertise across all teams. Start by evaluating your current pipelines for issues like dependency sprawl, slow builds, and security gaps. Focus on high-impact changes first - locking dependencies, enabling caching, and adding automated security checks - before moving to more advanced techniques. Using an incremental, GitOps-driven approach allows teams to improve one service or environment at a time, keeping changes version-controlled and auditable.

If in-house resources or expertise are limited, partnering with specialists can speed up the process. Hokstad Consulting offers extensive experience in DevOps transformation, Kubernetes CI/CD design, and cloud cost optimisation. They assist UK organisations in implementing dependency-aware pipelines, fine-tuning build caching, and enforcing robust security practices, all while demonstrating financial savings in £ terms. Their services cover everything from strategic cloud migrations to custom automation and AI-driven DevOps tools, tailored to public, private, hybrid, and managed hosting environments. For organisations with complex microservices or strict compliance needs, experienced partners ensure that dependency management aligns with UK regulatory standards while delivering measurable improvements in cost and reliability.

FAQs

What role do lockfiles play in ensuring consistency in Kubernetes CI/CD pipelines?

Lockfiles are key to ensuring consistency in Kubernetes CI/CD pipelines by locking in specific dependency versions. This guarantees that every environment uses the exact same versions, avoiding discrepancies that might cause deployment issues or unexpected behaviour.

By taking a structured approach to managing dependencies, lockfiles help make builds more predictable and consistent, cutting down on errors and streamlining the deployment process.

How does optimising caching benefit Kubernetes CI/CD pipelines?

Optimising caching in Kubernetes CI/CD pipelines can make a noticeable difference in how efficiently your system operates. By cutting down build times and limiting resource consumption, you can achieve quicker deployments and smoother workflows, keeping your pipeline responsive and adaptable.

Better caching strategies also translate to reduced infrastructure costs. By avoiding the need to repeatedly allocate resources, you simplify the development process while promoting more economical and resource-conscious operations.

How does automation improve security in the supply chain?

Automating security checks adds an extra layer of protection to your supply chain by ensuring vulnerabilities are consistently and continuously monitored. This approach minimises the chances of human error and helps identify potential issues in dependencies early on.

When you integrate automated checks into your CI/CD pipelines, you can tackle security risks head-on. This not only helps you address threats promptly but also strengthens your organisation's overall security and ability to adapt to challenges.