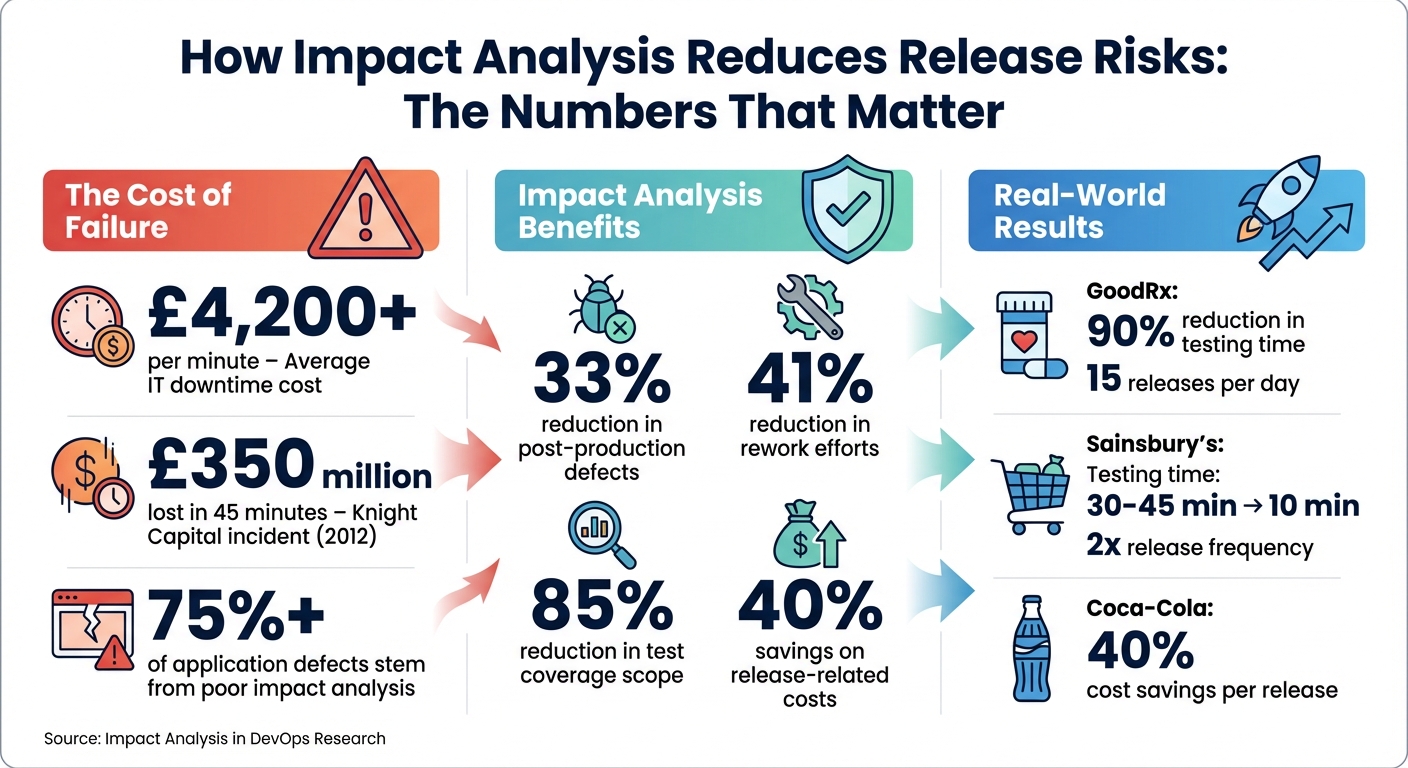

Every software release carries risks, from unexpected bugs to system failures. Impact analysis helps teams understand the potential consequences of changes before deployment, reducing these risks significantly. By identifying affected components, dependencies, and possible issues, teams can focus their efforts on testing and preparation, saving time and money. Here’s why it matters:

- Costs of failure: IT downtime costs over £4,200 per minute, with errors like the 2012 Knight Capital incident resulting in losses of £350 million in just 45 minutes.

- Defect prevention: Over 75% of application defects stem from poor impact analysis.

- Efficiency gains: Proper analysis can reduce post-production defects by 33% and rework by 41%.

Tools like Test Impact Analysis (TIA) and automated pipelines make it easier to identify risks, prioritise testing, and implement rollback strategies. Companies like GoodRx and Sainsbury's have used these methods to speed up releases and cut costs. By integrating impact analysis into DevOps workflows, organisations can ensure safer, faster, and more reliable software updates.

::: @figure  {Impact Analysis Benefits: Key Statistics for DevOps Release Risk Reduction}

:::

{Impact Analysis Benefits: Key Statistics for DevOps Release Risk Reduction}

:::

Software Impact Analysis | How to Assess Risks & Changes in Code | Dev & QA Best Practices

The Problem: High Release Risks in DevOps

The fast pace of DevOps often comes with a hidden cost - elevated risks that can disrupt even the most meticulously planned releases. When teams deploy code without fully grasping its broader impact, they’re essentially working in the dark. The fallout from such missteps can be significant, highlighting the importance of addressing these challenges head-on.

Common Causes of Release Risks

At the heart of many release failures are unexamined code changes. Teams often lack a clear picture of how their systems are interconnected. A minor adjustment - like modifying an API endpoint or a database field - can trigger a chain reaction, affecting integrations, third-party services, and dashboards. These ripple effects

stem from hidden dependencies that only come to light when something breaks.

Manual processes further amplify these risks. Configuration errors, environment drift, and overlooked details frequently slip past traditional security measures. Third-party vulnerabilities, such as unpatched libraries or insecure container images, add another layer of risk. The fleeting nature of containers and the complexity of Kubernetes configurations make systems vulnerable unless actively secured by administrators.

Cultural factors compound these technical issues. Testing, at times, is viewed as a roadblock rather than a necessity, leading to the accumulation of security debt.

Tight deadlines often force teams to cut corners. Bill Dickenson, a seasoned consultant and former VP at IBM, emphasises the importance of maintaining code quality:

One goal of DevOps is to continue to improve the code base and not allow defective code to creep in and destroy the value of more frequent releases [5].

Consequences of Poor Release Management

The financial toll of downtime is staggering, costing over £4,200 per minute. This often results in expensive rework, with teams spending half of their maintenance time verifying fixes [5]. However, organisations that adopt proper impact analysis can significantly reduce these issues - cutting post-production defects by 33% and rework efforts by 41% [2].

Operationally, poor release management can throw teams into chaos. Integration hell

becomes a reality when code merges at the end of a cycle create bottlenecks. Without proper impact analysis, teams either test everything - wasting time - or rely on users to report bugs in production. Both approaches lead to urgent fixes, which sap morale, cause burnout, and shift focus away from innovation.

The ripple effects extend beyond internal operations. For instance, GoodRx, a digital healthcare platform, faced these challenges before implementing automated impact analysis. By reducing its testing time by 90%, the company was able to release updates 15 times per day [3]. Similarly, Sainsbury's replaced manual testing scripts that took 30–45 minutes with automated ones, cutting execution time to just 10 minutes and doubling its release frequency [3].

Customer trust is another casualty of poor release practices. Unplanned outages erode user confidence, while repeated issues shake executive faith in DevOps teams. Tejas Ghadge, a senior engineering leader, captures the stakes perfectly:

It is a 'sin' if customers tell us about a problem in our system and we don't know about it [6].

What is Impact Analysis?

Impact analysis is a systematic way to evaluate the potential consequences of a change before it’s implemented. Think of it as a technical pre-flight checklist - it ensures teams have a clear understanding of what will happen when they modify code, update configurations, or roll out new features [1]. By identifying affected components, estimating the effort needed for testing, and highlighting downstream risks, this process helps teams effectively manage release risks within DevOps workflows.

At its heart, impact analysis seeks to answer three key questions: What will this change affect? How severe could the consequences be? And who needs to be informed? The process involves mapping out all elements related to the change - such as data models, APIs, third-party integrations, and workflows - and estimating the blast radius

by assessing both the scope (who or what is affected) and the severity (potential for issues like revenue loss or compliance breaches).

For organisations, the benefits are clear. A thorough impact analysis can lead to a 33% reduction in post-production defects and a 41% decrease in rework [2]. Considering that IT downtime costs can exceed £4,200 per minute [1], understanding a change’s impact before deployment becomes not just useful but essential.

Core Components of Impact Analysis

Impact analysis typically relies on three interrelated techniques:

- Traceability analysis: Tracks connections between requirements, design elements, specifications, and tests to determine the full scope of a change [7].

- Dependency analysis: Examines relationships between code, variables, modules, and APIs to uncover detailed linkages [7].

- Experiential analysis: Leverages expert knowledge, team discussions, and engineering judgement to identify risks that automated tools might overlook [7].

To prioritise testing efforts, teams often use a risk matrix. This matrix assigns risk levels - high (red), medium (yellow), or low (green) - to different system components based on their importance [8]. This visual approach helps stakeholders quickly grasp the risks and ensures testing resources are focused where they’re needed most.

Another crucial element is defining a rollback strategy. Before deploying any change, teams should establish a parachute

- a plan for reverting to a safe state if things go wrong. This could involve feature flags, version control reversions, or database restore points, ensuring any unexpected issues can be swiftly mitigated [1].

How Impact Analysis Fits into DevOps

In DevOps environments, impact analysis is often integrated directly into CI/CD pipelines, helping teams catch risks early in the release process. By automating tasks like dependency discovery and risk assessment [3][4], teams can generate impact reports for every code submission. This shifts impact analysis from being a time-consuming manual task to a quick safety check that supports faster, more reliable releases.

A standout feature is Test Impact Analysis (TIA), which identifies only the parts of the system affected by a specific change. Instead of running exhaustive regression tests, teams can focus solely on the impacted components [3]. This targeted approach can reduce testing scope by up to 85%, significantly accelerating the release cycle. For example, The Coca-Cola Company’s IT team used automated impact analysis for their SAP transports, cutting business risks and saving about 40% on release-related costs [3].

Need help optimizing your cloud costs?

Get expert advice on how to reduce your cloud expenses without sacrificing performance.

Key Benefits of Impact Analysis

Impact analysis offers a range of practical benefits that can transform how teams manage changes in software development.

Faster Releases with Focused Testing

One of the standout advantages of impact analysis is its ability to steer teams away from the inefficient test everything

approach. Instead of running lengthy and exhaustive regression tests, teams can zero in on the specific components affected by a change. Modern AI tools help identify which areas are at risk, allowing quality assurance (QA) efforts to focus where they're needed most [3].

By narrowing the scope of testing, impact analysis tools can reduce test coverage by as much as 85% [3]. This streamlined process not only saves time but also speeds up release cycles. Many organisations have reported significant reductions in testing time and faster software launches [3].

The process prioritises test scenarios based on their importance and potential impact, ensuring that critical areas receive attention first. By clearly defining testing needs from the start, teams can create more predictable timelines for both testing and releases [2].

While quicker releases are a clear win, another major advantage of impact analysis is its role in safeguarding against production issues.

Minimising Production Failures

Impact analysis serves as an essential safety net to prevent disruptions in production. By mapping out how code, data models, APIs, and third-party integrations are interconnected, teams can identify and address potential risks before a minor change causes major downstream problems [1]. It categorises changes based on their scope and severity, helping teams anticipate and mitigate potential issues [1].

Skipping this step can lead to cascading failures, which can be both costly and damaging. The average cost of IT downtime exceeds £4,500 per minute [1], making it clear how vital it is to understand the potential consequences of a change.

According to a 2020 IBM report, over 75% of application defects stem from insufficient impact analysis [2]. Organisations that implement robust impact analysis processes have seen a 33% reduction in post-production defects and a 41% drop in rework efforts [2]. Additionally, impact analysis supports strategies like canary releases, where a small fraction of users (1%–5%) experience the update first. These approaches limit the fallout from bugs and allow for automated rollbacks if performance issues arise [6].

Saving Costs and Optimising Resources

The financial benefits of impact analysis extend far beyond preventing downtime. By pinpointing which components are affected by an upgrade, teams can optimise their testing budgets and make better use of available resources [3]. This precision allows for more accurate staffing and budgeting [2].

Automating impact analysis significantly reduces manual effort, cutting release-related costs by up to 40% [3].

The cumulative savings are impressive. Organisations leveraging impact analysis have reported doubling their release frequency [3]. At the same time, they’ve reduced tester wait times by up to 50% by using cloud-based testing tools integrated with impact analysis [3]. Considering that catching bugs early in the development cycle is far less expensive than fixing them later, the financial return on investment becomes undeniable.

Implementing Impact Analysis with Hokstad Consulting

Hokstad Consulting takes the concept of impact analysis and weaves it into DevOps workflows, helping businesses lower the risks associated with software releases.

Adding impact analysis to your DevOps workflow isn't just about using new tools - it’s about aligning technical processes with broader business goals. Hokstad Consulting specialises in embedding impact analysis into every stage of the software delivery lifecycle, from automating pipelines to optimising costs.

Custom CI/CD Pipeline Integration

Hokstad Consulting designs CI/CD pipelines that incorporate impact analysis at key decision points, reducing the risks tied to software releases. By applying Observability as Code

(OaC) and automated rollback mechanisms, teams can respond quickly to issues with minimal manual input. These pipelines pinpoint which components are affected by code changes, allowing teams to focus testing on high-risk areas instead of running exhaustive tests across the entire application.

Key features of this approach include setting precise triggers, such as latency spikes or HTTP errors, to automatically initiate rollbacks. For example, JPMorgan Chase implemented a similar strategy and saw a 37% drop in incidents, avoiding losses of around £115 million in a single year thanks to automated rollbacks [citation needed]. Additionally, feature flags play a crucial role by separating deployment from activation, enabling teams to gradually roll out updates to small user groups and minimise the chance of large-scale disruptions.

This pipeline-centric strategy not only reduces risks but also creates a solid foundation for managing cloud costs and driving DevOps transformation.

Cloud Cost Engineering and Optimisation

Because impact analysis can increase computational demands, Hokstad Consulting addresses this challenge with solutions like right-sizing resources, automated scaling, and strategic purchasing models. By ensuring that cloud resources match actual workload needs, businesses avoid overpaying for unused capacity while maintaining the performance required for effective impact analysis.

Hokstad Consulting’s No Savings, No Fee

model has proven effective in identifying inefficiencies and cutting monthly cloud costs significantly. Early implementation of tagging governance - categorising resources by project, environment, cost centre, and application owner - provides detailed insights into spending. Coca-Cola applied a similar AI-driven impact analysis approach during SAP upgrades, saving up to 40% per release by testing only the components that needed attention [3].

Tailored Solutions for DevOps Transformation

Hokstad Consulting goes beyond standard practices, crafting tailored strategies that integrate impact analysis with zero-downtime migrations and automation. By breaking large projects into smaller, manageable units and using iterative releases, businesses can deliver value faster while addressing potential issues early in the process. This approach has enabled teams to release updates more frequently and with less risk. For example, GoodRx implemented a customised strategy that reduced testing time by 90%, allowing for up to 15 releases per day [3].

Security and compliance are also key aspects of Hokstad Consulting’s approach. Their solutions integrate DevSecOps practices to ensure that all releases meet UK GDPR requirements. By monitoring DORA metrics - Deployment Frequency, Lead Time for Changes, Change Failure Rate, and Mean Time to Recovery (MTTR) - teams can continuously measure and improve pipeline performance. This comprehensive approach ensures that impact analysis becomes a seamless part of the release process, rather than an extra layer of complexity.

Conclusion

Impact analysis plays a crucial role in achieving success within DevOps. It transforms release management from a process filled with uncertainty into one that's systematic and reliable. By pinpointing dependencies, assessing the potential blast radius, and testing rollback plans in advance, teams can steer clear of the chaos that often comes with unforeseen failures. As highlighted earlier, thorough impact analysis significantly reduces defects and the need for rework.

Focusing testing efforts on components directly affected by changes - rather than testing everything - delivers measurable benefits. This targeted approach speeds up release cycles while maintaining high-quality standards. Take GoodRx as an example: they’ve managed to release updates 15 times per day, all while reducing testing time by an impressive 90% [3].

The financial advantages are equally compelling. With downtime costs soaring and over 75% of application defects linked to poor impact analysis practices [2], investing in proper analysis quickly proves its worth. Coca-Cola’s adoption of these practices resulted in savings of up to 40% per release [3], showing that impact analysis not only mitigates risks but also drives cost efficiency.

For organisations looking to integrate impact analysis into their DevOps workflows, the way forward includes automation, collaboration across teams, and ongoing performance tracking. By adopting automated pipelines and focusing on targeted testing, businesses can achieve faster and safer releases. These practices not only strengthen stakeholder confidence but also help align DevOps strategies with broader business objectives, ensuring more streamlined and cost-effective operations.

FAQs

How does impact analysis help minimise the risk of software release issues?

Impact analysis plays a key role in minimising the risks associated with software releases. By carefully examining dependencies and forecasting the potential ripple effects of proposed changes, teams can spot risks early and tackle them before they escalate. This foresight helps ensure a more seamless release process.

Moreover, impact analysis aids in developing rollback plans and effective communication strategies. These elements are essential for swiftly addressing any unexpected problems. By taking this proactive approach, teams can reduce defects, minimise downtime, and enhance overall system reliability and recovery speed.

How does Test Impact Analysis (TIA) enhance release efficiency?

Test Impact Analysis (TIA) is a smart way to refine the testing process. It works by pinpointing the exact tests that are affected by specific code changes. Instead of running the entire test suite, TIA allows you to focus only on the tests that matter, cutting down testing time significantly and delivering quicker feedback.

By adopting this targeted approach, teams can speed up their release cycles while reducing the chances of missing critical issues. The result? More efficient testing and smoother, more dependable deployments.

How can impact analysis be incorporated into DevOps workflows to reduce release risks?

Integrating impact analysis into your DevOps workflows is a smart way to spot potential risks early, helping to ensure smoother releases while keeping disruptions to a minimum. By incorporating this step into your CI/CD pipeline, you can test changes in a controlled setting before deployment. Comparing these changes against baseline metrics allows you to catch issues before they escalate.

This approach often involves generating detailed impact reports, setting up testing environments, and automating rollbacks or fixes for high-risk problems. For organisations in the UK, Hokstad Consulting provides tailored expertise to refine these processes. Their solutions are designed to align with your release schedules and compliance requirements, making impact analysis more efficient and improving the overall deployment process.