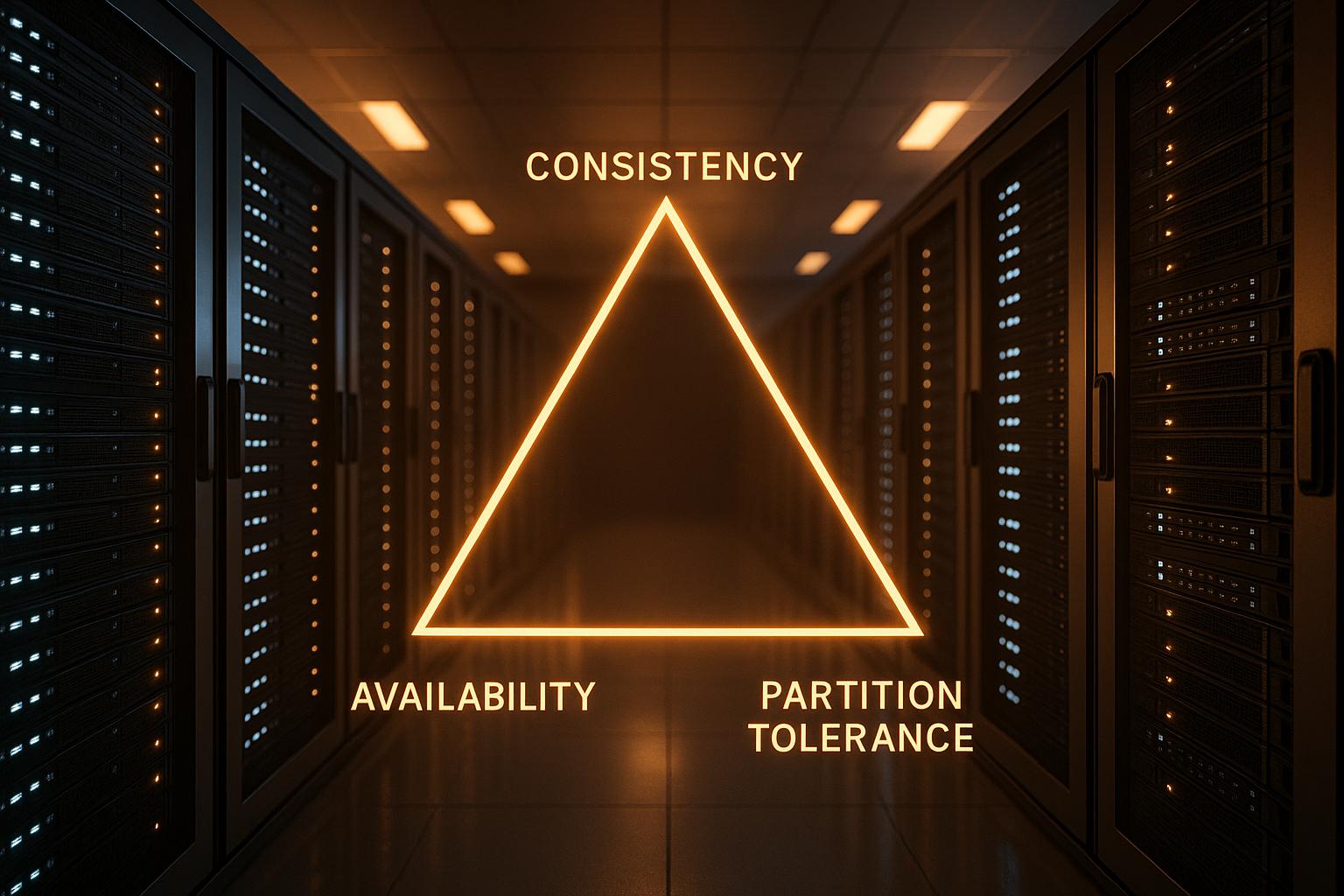

CAP theorem explains a hard truth about distributed systems: you can only guarantee two out of three properties - Consistency, Availability, and Partition Tolerance - at the same time. Since network failures are unavoidable in cloud storage, you must choose between:

- Consistency: Data is the same across all nodes instantly, but some requests might fail during disruptions.

- Availability: The system stays responsive, though it might show outdated data during failures.

- Partition Tolerance: Keeps working even when parts of the network can’t communicate - this is non-negotiable in distributed systems.

For example, a banking system prioritises consistency to ensure accurate balances, while a social media platform values availability so users can keep posting during outages. Modern cloud systems often balance these trade-offs using techniques like replication, sharding, and caching.

The choice between CP (Consistency + Partition Tolerance) and AP (Availability + Partition Tolerance) systems depends on your goals. Financial services lean towards CP, while e-commerce platforms often favour AP for uninterrupted service. Tools like AI and DevOps help fine-tune these decisions, ensuring cost-effective, reliable systems tailored to specific needs.

System Design 21 CAP Theorem Consistency Availability Partition tolerance Distributed Cloud storage

The 3 CAP Components Explained

The CAP theorem plays a crucial role in guiding cloud storage design, as each of its components - Consistency, Availability, and Partition Tolerance - offers distinct benefits and challenges. These properties influence how systems handle data during both normal operations and unexpected network disruptions. Let’s break down each component to understand their practical effects.

Consistency in Cloud Storage

Consistency ensures that any update to data is immediately visible across all nodes in the system. Simply put, when information is updated in one location, every subsequent read operation - no matter where it happens - must reflect that change instantly.

In cloud storage, this means synchronising updates across all regions without delay. Take financial systems, for example: they rely on strong consistency to ensure accurate transactions. If you're transferring money between accounts, the system must ensure all nodes agree on the updated balances before completing the process. Without this, errors like overdrafts or duplicate payments could occur.

But achieving strict consistency comes at a cost. During network partitions, systems that prioritise consistency might block operations entirely to avoid serving outdated data. While this approach protects data integrity, it can frustrate users who are unable to access their information during disruptions.

Systems like MongoDB, Redis, and HBase fall into the CP (Consistency and Partition Tolerance) category. They prioritise data accuracy and will temporarily become unavailable rather than risk providing incorrect information during network failures[2].

Availability in Distributed Systems

Availability focuses on ensuring that every request to a functioning node gets a response, even during network issues or partial system failures. This property is all about keeping the system operational and responsive, though it may sacrifice the most up-to-date data to do so.

In practice, availability means users can continue interacting with the system, even if the data they see isn’t fully up to date. For instance, during network disruptions, available systems respond to requests using locally stored data, even if that data is slightly outdated.

Social media platforms and content delivery networks often prioritise availability. Users expect to browse, post, and interact without interruptions, even if it means seeing an older version of a friend's status. Temporary inconsistencies are usually more acceptable than a complete service outage.

Examples of AP (Availability and Partition Tolerance) systems include Cassandra, CouchDB, and DynamoDB[2]. These platforms ensure uptime and access, making them ideal for scenarios where uninterrupted service is critical. E-commerce sites, for instance, might let customers browse and add items to their baskets during a network issue, updating inventory levels only after connectivity is restored.

With availability covered, let’s explore how systems handle network disruptions.

Partition Tolerance and Network Failures

Partition tolerance allows systems to continue functioning even when network failures disrupt communication between nodes. This is essential in distributed environments where network disruptions are an unavoidable reality.

Network partitions happen more often than we realise - undersea cables might break, data centre connections can fail, or routing issues could isolate entire regions. Without partition tolerance, these disruptions could render distributed systems inoperable.

When a partition occurs, nodes on either side of the split can’t communicate. The system must decide how to handle this, balancing service continuity with data accuracy. Even advanced cloud providers, using tools like private fibre networks and GPS clock synchronisation, can only reduce the frequency of partitions - they can’t eliminate them entirely[3].

Systems handle partitions in different ways:

| System Response | Approach | Trade-off |

|---|---|---|

| Deny requests | Refuse operations that can’t be synced | Users lose access to data during partitions |

| Provide local copies | Respond with locally stored data | Users might receive outdated information |

| Queue operations | Store requests for later processing | Delays in updates and potential conflicts |

One effective strategy for managing partitions is geographic data sharding, where data is stored closer to users based on their location. For example, a UK-based business might store European customer data locally, while maintaining separate systems for other regions. This reduces reliance on intercontinental connections and minimises the impact of network disruptions.

Ultimately, the choice between consistency and availability during network partitions shapes the entire system design. From database selection to user experience, these trade-offs must align with the organisation's goals and the expectations of its users.

Cloud Storage Design Trade-offs

When designing cloud storage systems, you’re often faced with tough decisions. The CAP theorem reminds us that you can’t optimise for consistency, availability, and partition tolerance all at once. In distributed systems, where network partitions are inevitable, you must prioritise either data consistency or service availability. This choice influences the entire architecture of your system.

The CAP Trade-off During Network Partitions

At the core of the CAP theorem is a critical constraint: during a network partition, your system can only guarantee two out of the three properties - consistency, availability, and partition tolerance - at the same time [1][2][3]. Partition tolerance is non-negotiable in cloud environments because network failures are a given. This leaves you with a choice: consistency or availability?

Here’s how it plays out. If you prioritise consistency, the system ensures data accuracy by delaying or rejecting requests that can’t be synchronised across all nodes. This means users might experience temporary interruptions. On the other hand, prioritising availability means the system remains operational, even if it risks serving slightly outdated or inconsistent data.

Take a UK-based e-commerce platform as an example. If consistency is the priority, customers might be unable to complete purchases during a network issue until the system fully synchronises inventory levels. If availability is prioritised, shopping can continue uninterrupted, but inventory data might not always be up-to-date. This trade-off is at the heart of CAP and shapes how systems are categorised into CP, AP, and CA types.

For added complexity, different parts of an application might have different needs. A user authentication system might require strong consistency to ensure security, while a content delivery network can afford eventual consistency to keep performance high. This has led many organisations to adopt hybrid approaches, tailoring CAP strategies to specific components.

CP, AP, and CA System Types

The CAP theorem defines three system types, each with its own advantages and limitations. Here’s how they stack up in cloud storage design:

CP systems (Consistency + Partition Tolerance) focus on data accuracy. If a network partition occurs, these systems will prioritise consistency, even if it means becoming temporarily unavailable. They’re ideal for applications where data integrity is critical, such as banking or inventory management, where serving outdated data could lead to costly errors.

AP systems (Availability + Partition Tolerance) ensure the system remains operational during network partitions. These systems prioritise uptime, even if it means occasional inconsistencies. They’re well-suited to scenarios like social networks, content management systems, or e-commerce platforms, where user experience often outweighs the need for absolute accuracy.

CA systems (Consistency + Availability) are more of a theoretical concept in distributed environments. They can maintain both consistency and availability, but only in the absence of network partitions. Traditional relational databases in single-site setups sometimes fit this model, but they’re impractical for distributed cloud storage due to their inability to handle network failures [2][3].

| System Type | Guarantees | Strengths | Weaknesses |

|---|---|---|---|

| CP | Consistency, Partition Tolerance | Ensures strong data integrity; reliable under partitions | May become unavailable during network failures |

| AP | Availability, Partition Tolerance | High uptime; always responsive | Can serve outdated or inconsistent data |

| CA | Consistency, Availability | Maintains data accuracy and responsiveness | Fails to handle network partitions effectively |

The choice between these system types depends on your organisation’s priorities and risk tolerance. Some modern systems even allow for tunable consistency, letting you adjust the balance between consistency and availability based on specific operations [2].

For UK businesses, the decision is further influenced by factors like GDPR compliance, customer expectations for high availability, and cost considerations. Consulting with experts, such as Hokstad Consulting (https://hokstadconsulting.com), can help tailor your approach to meet both regulatory and business needs.

Need help optimizing your cloud costs?

Get expert advice on how to reduce your cloud expenses without sacrificing performance.

Performance and Data Access Pattern Optimisation

The principles of the CAP theorem play a central role in determining the performance of cloud storage systems. Choosing between consistency and availability isn't just a technical decision - it shapes how data is accessed, impacts response times, and defines the user experience. By understanding these performance trade-offs, you can design systems that align with your technical needs and business goals. This understanding lays the groundwork for effective optimisation strategies.

Strong Consistency vs Eventual Consistency

Your choice of consistency model has far-reaching effects on system architecture. With strong consistency, every user sees the latest data immediately after a write operation, no matter which node they access. While this ensures data accuracy, it requires synchronising updates across all nodes before responding to requests, which can significantly increase latency.

On the other hand, eventual consistency focuses on speed and availability. Updates propagate asynchronously, allowing nodes to respond quickly without waiting for global synchronisation. Although users may temporarily encounter slightly outdated data, the system remains fast and highly responsive.

In geo-distributed systems, eventual consistency can drastically reduce latency compared to strong consistency. This trade-off often guides architects in deciding between prioritising data accuracy or system responsiveness.

Take real-world scenarios: a banking system demands strong consistency - delays in updating account balances could lead to overdrafts or compliance issues. Meanwhile, social media platforms can tolerate slight delays in updates without detracting from the user experience.

In June 2022, MongoDB Atlas introduced configurable consistency levels in their managed cloud database. This allowed fintech clients to enforce strict consistency for transaction data while using eventual consistency for analytics, cutting overall query latency by 30%.

However, strong consistency can also limit scalability. The need to synchronise across nodes creates coordination overhead, which can become a bottleneck. In contrast, eventual consistency allows nodes to operate independently, enabling greater scalability and higher throughput.

Performance Optimisation Under CAP Constraints

Optimising performance while navigating CAP constraints requires strategic planning. Several techniques can help you strike the right balance between consistency, availability, and partition tolerance.

Caching: By storing frequently accessed data closer to users, caching reduces read latency and improves overall performance. To maintain data accuracy, implement smart cache invalidation policies that balance speed with freshness.

Sharding: Distributing data across multiple nodes prevents single-node bottlenecks. This approach improves scalability and fault tolerance by spreading the workload, though careful planning is needed to ensure related data remains accessible and queries stay efficient.

Replication: Creating multiple data copies across nodes enhances availability and speeds up local access. However, synchronising these replicas without sacrificing performance remains a challenge.

Failover mechanisms: These detect node failures and redirect traffic to healthy nodes, ensuring uninterrupted service. Depending on your replication setup, failover may temporarily introduce inconsistencies.

A notable example is Amazon DynamoDB. In 2021, they improved global read latency by 40% through adaptive caching and multi-region replication. Led by Principal Engineer Mark Brooker, this initiative achieved a 99.999% availability rate and reduced user-reported stale reads by 25% [3].

| Optimisation Strategy | Primary Benefits | CAP Impact | Best Use Cases |

|---|---|---|---|

| Caching | Faster reads, better user experience | May delay consistency updates | Frequently accessed, relatively static data |

| Sharding | Enhanced scalability, balanced load | Improves partition tolerance | Large datasets, high-throughput systems |

| Replication | Better availability, faster access | Balances availability and consistency | Mission-critical or globally distributed systems |

| Failover | Maintains uptime during outages | Focuses on availability | High-uptime systems |

Often, the best approach combines multiple strategies. For instance, you might use aggressive caching for applications that prioritise low latency with eventual consistency, while employing synchronous replication for critical data requiring strong consistency.

Modern cloud storage systems now offer tunable consistency levels, letting you adjust the balance between consistency and performance for specific operations or datasets. This flexibility allows you to tailor solutions to your application's unique needs instead of relying on a one-size-fits-all method.

For UK organisations navigating these complex decisions, expert guidance can make a significant difference. Hokstad Consulting (https://hokstadconsulting.com) specialises in evaluating application requirements and designing cloud storage architectures that balance CAP properties. Their services include implementing strategies like sharding, replication, and caching, as well as expertise in DevOps automation, cost optimisation, and AI-driven performance tuning. With their help, businesses can achieve efficient, high-performing cloud storage solutions that comply with local regulations and operational demands.

Balancing CAP Properties with Cost and DevOps

The CAP theorem plays a critical role in shaping the performance, cost, and operational efficiency of cloud systems. For UK businesses, particularly those juggling tight budgets and strict service-level agreements, finding the right balance between consistency, availability, and partition tolerance demands careful planning and strategic decision-making.

Cost-efficient Cloud Storage Design

When designing cloud storage systems, CAP trade-offs force architects to prioritise between consistency, availability, and partition tolerance, especially during network failures. These decisions have direct financial implications. For instance, prioritising consistency often requires complex synchronisation mechanisms and redundant infrastructure, which can drive up costs. On the other hand, focusing on availability may reduce downtime but could lead to added reconciliation efforts later on[1][2][6].

One way to manage these costs is by leveraging caching techniques to optimise resource use. Tools like Redis and Memcached, which act as distributed in-memory caches, help reduce the need for direct database reads. This approach not only improves performance and availability but also cuts down on cloud resource consumption. Techniques such as read-through and write-back caching enable systems to handle network partitions effectively by tolerating eventual consistency[2].

The potential savings are significant. Recent reports suggest that UK businesses can lower cloud infrastructure expenses by up to 30% through advanced caching and automation strategies. Automated cache invalidation and tiered storage further enhance resource efficiency, enabling organisations to manage costs while maintaining acceptable levels of consistency.

Automation is another key driver of cost optimisation. Tools like infrastructure-as-code (IaC), auto-scaling, and automated failover allow businesses to allocate resources dynamically based on demand and network conditions. This reduces manual intervention, minimises over-provisioning, and ensures systems can adapt to changing conditions by shifting between consistency and availability as needed.

Cost modelling also plays an important role. By simulating different CAP configurations, organisations can estimate their impact on infrastructure usage, downtime, and reconciliation efforts. Using historical data and predictive analytics, businesses can forecast costs under various scenarios while accounting for local currency requirements, typical transaction volumes, and compliance obligations[1][6]. These strategies enable smarter, more dynamic approaches to cloud cost management.

AI Agents in Cloud Optimisation

AI-driven solutions are transforming how CAP trade-offs are managed. These systems analyse real-time data access patterns and system health to dynamically adjust consistency and availability settings based on business priorities. This allows cloud storage systems to adapt intelligently to changing conditions, ensuring both performance and cost-efficiency[6].

A great example is Hokstad Consulting's AI-driven solutions. Their AI agents can, for example, prioritise consistency for financial transactions during peak hours while relaxing it for less critical operations. By predicting potential partition events and reconfiguring storage policies proactively, these tools help UK businesses maintain optimal trade-offs and reduce costs.

The benefits are practical, not just theoretical. AI agents can identify frequently accessed data and implement caching strategies automatically, ensuring critical information remains consistent while less critical data can tolerate slight delays. This kind of adaptability means businesses no longer have to rely on rigid consistency models - they can adjust in real time to meet operational demands.

For instance, in the UK financial sector, AI agents might enforce strict consistency for transaction data during business hours but relax it for analytical workloads during quieter periods. Retailers, on the other hand, might prioritise availability during peak shopping times while focusing on inventory consistency during off-peak hours. This sector-specific flexibility makes AI-driven solutions particularly valuable for organisations with diverse operational needs.

DevOps Role in Managing CAP Properties

While AI offers dynamic optimisation, robust DevOps practices provide the operational backbone for managing CAP trade-offs effectively. Continuous integration and continuous deployment (CI/CD) pipelines allow for rapid configuration changes, enabling systems to adapt to evolving conditions like network partitions or shifting business requirements[2].

Real-time infrastructure monitoring tools, such as Prometheus and Grafana, provide visibility into network partitions and performance metrics. These tools enable quick responses and policy adjustments, reducing manual errors and improving system resilience. For UK businesses, this means maintaining an optimal balance between CAP properties while keeping operational costs under control. In fact, DevOps transformations have been shown to lead to up to 75% faster deployments and 90% fewer errors[5].

The integration of DevOps with CAP management delivers measurable results. Automated CI/CD pipelines, IaC, and robust monitoring eliminate manual bottlenecks, ensuring swift and accurate responses during partition events. This automation is especially critical for maintaining service availability and minimising costly downtime. Together, AI and DevOps enable real-time adjustments that align cost, performance, and reliability with business needs.

Key metrics for monitoring CAP property balancing include response times, data consistency lags, system availability percentages, and the frequency of partition events. By establishing thresholds aligned with service-level objectives and employing automated alerts, businesses can ensure timely adjustments when anomalies occur[2][6].

Hokstad Consulting provides a strong example of how expert DevOps practices can drive improvements. Their clients have reported up to 95% reductions in infrastructure-related downtime and 10x faster deployment cycles through tailored automation solutions[5]. By implementing CI/CD pipelines, IaC, and comprehensive monitoring, they help UK businesses optimise CAP trade-offs while meeting specific operational goals.

Conclusion

The CAP theorem plays a pivotal role in shaping the design of cloud storage systems, forcing architects to weigh trade-offs that impact scalability, cost, and overall performance. It highlights a fundamental limitation: during network failures, it’s impossible to guarantee consistency, availability, and partition tolerance all at once[1][3].

These trade-offs carry real-world business consequences. For example, downtime during cloud storage outages can cost UK businesses over £4,000 per minute - a stark reminder of the importance of balancing consistency and availability[4]. Different industries prioritise these factors based on their unique operational needs and risk profiles.

The path to effective cloud storage management lies in continuous refinement and well-informed decision-making. Many modern systems lean towards the AP (Availability and Partition Tolerance) model, embracing eventual consistency, while CP systems are critical for scenarios where data accuracy cannot be compromised[2][3].

Organisations that navigate these challenges successfully often rely on AI-powered tools and strong DevOps practices to dynamically adapt CAP decisions as business needs evolve. By employing advanced strategies and seeking expert advice, businesses can fine-tune these trade-offs, ensuring their cloud storage solutions align closely with their strategic objectives.

FAQs

What role does the CAP theorem play in designing cloud storage systems?

The CAP theorem explains the delicate balance between Consistency, Availability, and Partition Tolerance in distributed systems. When building cloud storage solutions, finding the right balance among these elements is essential, depending on the system's goals and use cases.

Take a global content delivery network as an example. Such a system prioritises high availability, ensuring users can always access data, even if that means compromising on strict consistency. On the other hand, financial systems lean heavily towards consistency, as data accuracy is crucial - even if it results in occasional delays. By understanding these trade-offs, system architects can design solutions that align with specific business needs.

How does the CAP theorem affect decisions on consistency and availability in distributed systems during network issues?

The CAP theorem underscores an essential compromise in distributed systems: when a network partition occurs, you have to decide between consistency - making sure all nodes reflect the same data - and availability, which ensures the system remains functional. This dilemma plays a key role in shaping how cloud storage systems are designed, as favouring one often means sacrificing the other.

Take consistency-focused systems as an example. These might hold off on responding to requests until data is fully synchronised across all nodes, which can lead to delays and affect user experience during network issues. On the flip side, systems that prioritise availability will keep running, but users could encounter outdated or mismatched data. The decision ultimately hinges on what your application values more: precise, up-to-date information or uninterrupted service.

How do AI and DevOps practices help optimise cloud storage systems while addressing CAP theorem principles?

AI and DevOps are transforming the way cloud storage systems are designed, particularly when it comes to managing the CAP theorem principles: consistency, availability, and partition tolerance. With AI-powered analytics, organisations can keep a close eye on storage demands, predict future needs, and allocate resources more efficiently. Plus, AI can automate fault-tolerant designs, ensuring systems remain available and perform well, even under pressure.

On the other hand, DevOps practices like continuous integration and deployment (CI/CD) bring agility and resilience to the table. These methods allow teams to rapidly iterate and build cloud storage systems that can handle network partitions without compromising too much on consistency or availability - customised to fit specific business priorities. Together, AI and DevOps create cloud storage solutions that are not only efficient but also dependable, even in the most challenging distributed setups.