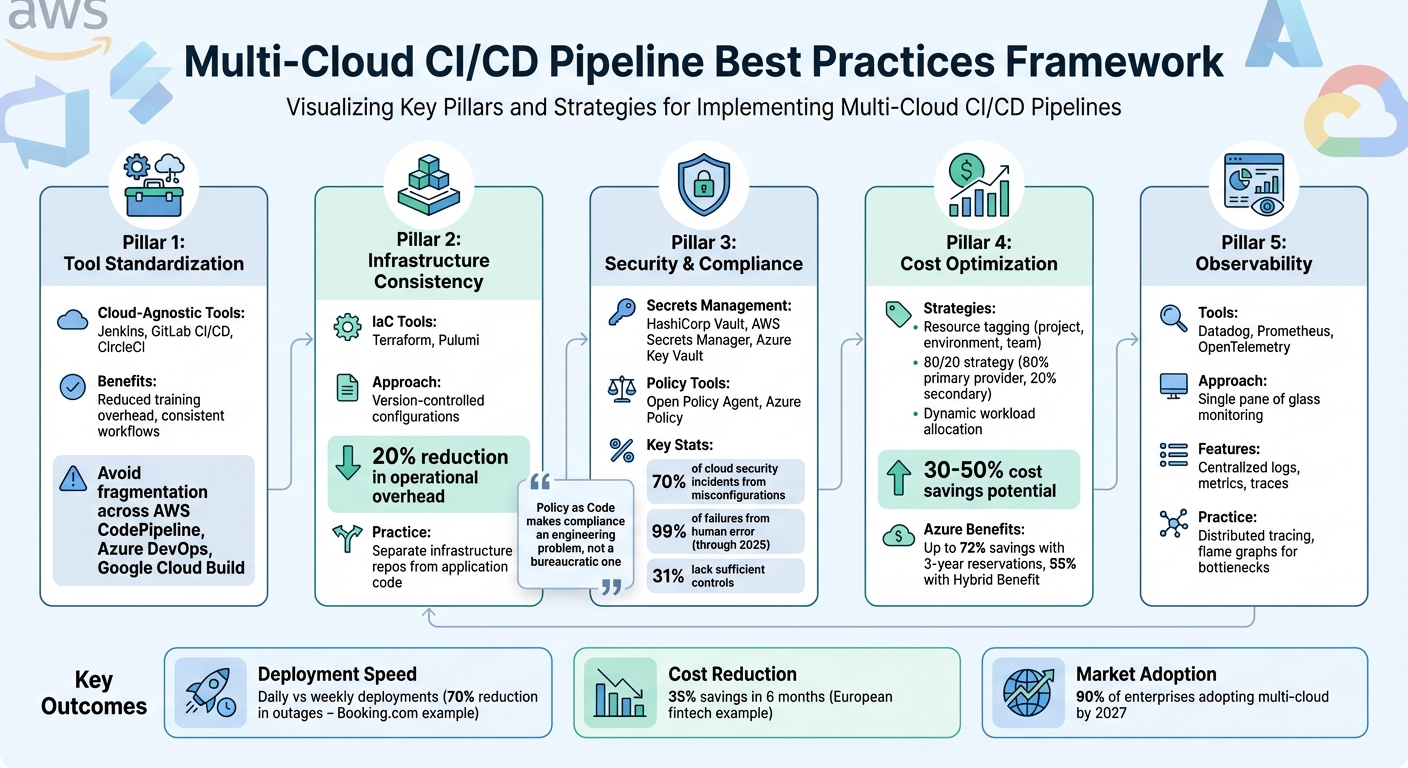

Multi-cloud CI/CD pipelines allow organisations to automate building, testing, and deploying applications across multiple cloud providers like AWS, Azure, and Google Cloud. This approach reduces reliance on a single vendor, improves resilience during outages, and enables access to specialised services. However, it also introduces challenges like tool fragmentation, security complexities, and cost management issues.

Key Takeaways:

- Tool Standardisation: Use cloud-agnostic tools like Jenkins, GitLab, or CircleCI to operate across platforms and reduce training overhead.

- Infrastructure Consistency: Adopt Infrastructure as Code (IaC) tools like Terraform for uniform resource management.

- Security Measures: Centralise secrets management with tools like HashiCorp Vault and enforce consistent policies using Open Policy Agent.

- Cost Optimisation: Tag resources for tracking, manage data transfer fees, and balance workloads across providers.

- Observability: Use tools like Datadog and OpenTelemetry for centralised monitoring and diagnostics.

By addressing these challenges with standardised tools, efficient workflows, and robust security practices, organisations can optimise multi-cloud pipelines for performance and cost-efficiency.

::: @figure  {Multi-Cloud CI/CD Pipeline Best Practices Framework}

:::

{Multi-Cloud CI/CD Pipeline Best Practices Framework}

:::

How to Scale Thousands of CI/CD Pipelines on AWS without Additional Overhead #cicd #devops

Need help optimizing your cloud costs?

Get expert advice on how to reduce your cloud expenses without sacrificing performance.

Common Challenges in Multi-Cloud CI/CD

Multi-cloud CI/CD introduces an extra layer of complexity compared to single-provider setups. Key issues include tool fragmentation, security and compliance hurdles, and cost management difficulties. These challenges call for efficient strategies and reliable tools to ensure smooth operations [1][2][4].

Tool Fragmentation and Integration Problems

Cloud providers like AWS, Azure, and Google Cloud each offer their own native CI/CD tools - AWS CodePipeline, Azure DevOps, and Google Cloud Build - that work seamlessly within their ecosystems. However, these tools often operate in silos, with varying APIs, workflows, and configurations. When teams need to integrate services across multiple clouds, such as deploying to AWS, processing data in Google Cloud, and monitoring via Azure, the lack of standardisation demands additional effort and resources [1].

This fragmentation also impacts team efficiency. Training staff to master multiple native tools can be costly and time-intensive. To simplify operations, many organisations turn to cloud-agnostic solutions like Jenkins, GitLab, or CircleCI, which offer more consistency across environments [1].

Security and Compliance Across Multiple Clouds

Security and compliance become more challenging when dealing with multiple cloud providers. Each platform uses its own identity and access management system, making it difficult to enforce uniform permissions and monitor access effectively [2][4]. Hardcoding credentials across various environments can expose vulnerabilities, so centralised secrets management tools like HashiCorp Vault, AWS Secrets Manager, or Azure Key Vault are crucial for safeguarding sensitive data such as tokens, certificates, and API keys [1][3].

One of the most common mistakes is treating security as an afterthought. When organizations retrofit security into pipelines, they often leave gaps.– Deepakraj A L, DevOps Engineer, CloudThat [3]

Compliance adds another layer of complexity, particularly when adhering to regulations like GDPR, HIPAA, or PCI DSS across AWS, Azure, and Google Cloud. Implementing consistent policies across these platforms can significantly reduce operational headaches. For example, tools like Open Policy Agent or Azure Policy enable organisations to enforce security standards automatically across different cloud environments [3][4]. Research indicates that unifying IT processes in hybrid and multi-cloud setups can cut operational overhead by 20% [4]. Once security is streamlined, the focus can shift to managing costs effectively.

Managing Costs in Multi-Cloud Deployments

Cost management is a critical concern in multi-cloud environments, especially when dealing with hidden or unexpected expenses. Data egress fees - charges for transferring data between clouds - can quickly erode any cost savings achieved through cheaper compute resources. Additionally, unmonitored development or test environments can lead to unnecessary spending across multiple providers.

To control costs, resource tagging is essential. Tags for attributes like project name, environment, and team help track expenses and pinpoint which workloads are driving costs [1]. However, ensuring consistent tagging across AWS, Azure, and Google Cloud requires both discipline and automation.

Another challenge lies in balancing vendor flexibility with cost efficiency. While spreading workloads across providers avoids vendor lock-in, it also makes it harder to qualify for high-volume discounts, such as AWS Reserved Instances. Many organisations address this by adopting an 80/20 strategy - keeping 80% of workloads with a primary provider while using secondary clouds for specific tasks. This approach helps minimise complexity and data transfer fees while still leveraging the benefits of a multi-cloud setup.

Designing Multi-Cloud CI/CD Pipelines

Building multi-cloud CI/CD pipelines requires a balance between standardising tools and workflows and allowing for specific adjustments tailored to individual cloud environments. Without this balance, organisations face fragmented systems that are hard to maintain and scale. A cohesive strategy ensures better tool integration, consistent infrastructure management, and smoother Kubernetes operations.

Standardise CI/CD Tools and Workflows

To streamline deployments across multiple providers, rely on cloud-agnostic platforms like Jenkins, GitLab CI/CD, or CircleCI. These tools remove the need to train teams on various native solutions, simplifying the deployment process. As Robert Krohn, Head of Engineering at Atlassian, points out:

If you don't have a toolchain that ties all these processes together, you have a messy, uncorrelated, chaotic environment. If you have a well-integrated toolchain, you can get better context into what is going on[8].

For Jenkins pipelines, combining sequential steps into a single external script reduces overhead. Running all processing tasks on agents prevents controller bottlenecks, and using declarative syntax instead of scripted approaches unlocks advanced features like matrix builds while boosting controller performance [6].

Once pipelines are unified, the next step is ensuring consistent, auditable infrastructure provisioning.

Use Infrastructure as Code (IaC)

Tools like Terraform and Pulumi make it possible to manage infrastructure consistently across different cloud APIs. By defining resources in version-controlled files, you can maintain uniform configurations across AWS, Azure, and Google Cloud. This approach eliminates manual errors and ensures all infrastructure changes are auditable and repeatable - addressing the fragmentation challenges of multi-cloud environments.

Keep infrastructure repositories separate from application code. This separation allows infrastructure teams to manage deployments independently, maintaining clear version control and avoiding conflicts with development cycles [5][7]. Additionally, using automated drift detection ensures that live cloud resources remain aligned with the configurations in version control, preventing accidental or unauthorised changes from persisting [7].

Adopt GitOps for Kubernetes Management

GitOps introduces familiar Git workflows to infrastructure management, enabling continuous reconciliation of live environments with desired configurations. Tools like Argo CD and Flux monitor Kubernetes clusters and automatically correct any drift from the defined Git state. This automation is especially useful in multi-cloud setups, where manual synchronisation across providers is impractical.

GitOps isn't a product or platform that you use on top of existing deployment workflows; it's an operational framework composed of best practices that brings familiar developer workflows to infrastructure management.– Datadog [5]

By versioning infrastructure changes in Git, teams can quickly roll back to a stable state by reverting to a previous commit. For more complex deployments, tools like Crossplane and Spinnaker provide unified control planes, enabling resource coordination across multiple providers through a single interface [5].

This approach simplifies operations, ensuring consistency and reliability across diverse cloud environments.

Security and Observability in Multi-Cloud CI/CD

Once you've established standardised CI/CD pipelines and Infrastructure as Code (IaC) practices, the next step in a multi-cloud strategy is ensuring robust security and observability. These elements are essential for safeguarding sensitive data and maintaining operational visibility across different cloud providers. Without centralised controls, teams risk dealing with scattered secrets, inconsistent monitoring, and fragmented compliance efforts. By integrating these measures into the pipeline framework, organisations can enhance both security and operational insights.

Centralised Identity and Secrets Management

Managing secrets across multiple clouds often leads to credentials being scattered across various systems, making oversight challenging. Tools like HashiCorp Vault address this by offering a unified, cloud-neutral platform for secrets management. Applications can authenticate using trusted identities - such as AWS IAM, Azure AD, or Kubernetes - before accessing secrets [10].

Vault's dynamic credentials feature is particularly useful. It generates short-lived secrets with automatic time-to-live (TTL), limiting the risk window if credentials are compromised [9][10].

For CI/CD pipelines, Workload Identity Federation with OpenID Connect (OIDC) allows runners like GitHub Actions or GitLab to authenticate directly with Vault, eliminating the need for hardcoded secrets [11][12]. To ensure fault tolerance, deploy Vault clusters in pairs across regions within each cloud provider. You can also use path filters to limit secret replication to only the regions where they are needed [13].

The move to a cloud operating model involves a shift in thinking... instead of focusing on a secure network perimeter with the assumption of trust, the focus is to acknowledge that the network in the cloud is inherently 'low trust'.– HashiCorp [10]

Monitoring and Observability Across Clouds

To gain a clear view of pipeline performance and infrastructure health, it's critical to consolidate telemetry data from all cloud providers into a single pane of glass. Solutions like Datadog and Prometheus help centralise logs, metrics, and traces while maintaining consistent access controls and retention policies [14][16].

Using OpenTelemetry (OTel) simplifies this process. OTel provides a vendor-neutral way to collect logs, metrics, and traces through a unified protocol. This flexibility allows teams to switch observability platforms without needing to modify application code [17]. Regular diagnostic tests can help establish baseline health metrics. If these tests fail or show delays, the issue likely lies with the infrastructure rather than the application itself [15].

Distributed tracing and flame graphs are invaluable for pinpointing performance bottlenecks. Monitoring metrics like median and p95 durations can help identify regressions [15]. To protect sensitive information, configure agents like Fluent Bit to redact data before logs are sent to the central system. For long-term compliance, export data to platforms like BigQuery [14].

While observability focuses on real-time insights, automating compliance through Policy as Code ensures standards are consistently upheld.

Policy as Code for Compliance

Policy as Code (PaC) helps automate compliance by embedding business, security, and regulatory requirements into testable rules that run during CI/CD processes [12][18]. This proactive approach catches violations early, before resources are provisioned.

Tools like Open Policy Agent (OPA) and HashiCorp Sentinel enforce consistent guardrails across cloud APIs, ensuring policies like encryption or firewall rules are applied uniformly, regardless of the provider [12][19]. Storing policies in version control enables audit trails and code reviews, and policies can be grouped into reusable sets for global or project-specific application [12][18].

It's worth noting that up to 70% of cloud security incidents stem from resource misconfigurations, and more than 31% of organisations feel they lack sufficient controls to prevent such errors [12]. Gartner projects that through 2025, 99% of cloud security failures will result from human error [12]. PaC mitigates these risks by blocking non-compliant resources - like unencrypted S3 buckets - before deployment, and by continuously monitoring live infrastructure to detect unauthorised changes [12][19].

Performance and Cost Optimisation in Multi-Cloud CI/CD

Once security and observability are in place, the next step is ensuring that pipelines operate efficiently without breaking the bank. This section builds on earlier strategies, focusing on fine-tuning performance and managing costs through smarter resource allocation and automation.

Workload Balancing and Resource Rightsizing

Distributing workloads intelligently can lead to 30–50% savings while improving availability [20]. Known as dynamic workload allocation, this method runs specific tasks on the provider offering the best price-to-performance ratio at any given moment. Tools like Spinnaker and ArgoCD can automate these decisions, making the process seamless.

Another critical area is container optimisation. By using multi-stage builds, you can ensure container images only include the essentials, which not only speeds up builds but also lowers storage expenses [20]. Removing development dependencies ensures builds remain lean and reproducible.

Dynamic scaling with Kubernetes’ Horizontal Pod Autoscaler (HPA) helps avoid over-provisioning by adjusting resources based on demand. Regular audits of resource usage can identify underutilised VMs or containers that should be resized or decommissioned [22][26].

Cost Tracking and Simulation

To manage costs effectively, centralise tracking with a unified schema. This approach combines usage data from multiple providers, making it easier to tag resources by team, project, or business unit [21]. Such tagging enables cost simulations and lets you trace expenses back to specific activities [23][26].

Going beyond raw spending figures, unit cost metrics provide clarity. For instance, linking cloud costs to metrics like cost per customer or transaction can reveal the true value of CI/CD operations [22][24]. As Microsoft’s Azure Well-Architected Framework explains:

A cost-optimised workload isn't necessarily a low-cost workload[25].

The key is to align spending with business outcomes.

Before rolling out changes, use cost simulation to assess the financial impact of running workloads on different providers [2]. Scenario modelling can guide decisions like switching from IaaS to PaaS or scaling up for a major launch [22]. When setting budgets, allow for a small buffer to cover unexpected expenses [22]. Configure budget alerts at 90% (ideal), 100% (target), and 110% (over-budget) thresholds to stay ahead of potential overruns [21].

AI-based anomaly detection can spot unusual spending patterns by comparing them to historical data [21][24]. To prevent costs spiralling out of control, link budgets to automated action groups that can scale resources or shut them down when limits are exceeded [26]. Azure Reservations, for example, can cut compute costs by up to 72% with a three-year commitment, while Azure Hybrid Benefit offers savings of up to 55% on SQL Database options by using existing licences [26].

Scaling Pipelines with Custom Automation

Once costs are under control, tailored automation can take pipeline scalability to the next level. These enhancements build on the standardised CI/CD pipelines discussed earlier, reinforcing the broader multi-cloud approach.

Streamlining processes like consolidating sequential shell steps into external scripts or moving complex logic into shared libraries reduces overhead on build agents and controllers. This approach also minimises the workload on Jenkins controllers [6]. For heavy tasks like data parsing or compilation, offload the work to build agents to avoid bottlenecks [6]. When handling large files, steer clear of memory-heavy tools like Groovy’s JsonSlurper; instead, use shell commands with utilities like jq to process data on the agent side [6]. Wrapping input statements in timeouts ensures that executors and workspaces aren’t tied up indefinitely waiting for manual approvals [6].

Hokstad Consulting offers tailored automation solutions designed to lower cloud costs and streamline deployment cycles. Their services focus on creating automated CI/CD pipelines optimised for multi-cloud setups, often achieving expense reductions of 30–50% through strategic resource management and cost engineering. For organisations dealing with fragmented tools or inefficient workflows, customised automation can deliver the scalability needed to support growth while keeping costs manageable.

Conclusion

Multi-cloud CI/CD pipelines go beyond solving technical problems - they provide a strategic edge. By 2027, it's projected that 90% of enterprises will adopt multi-cloud environments, making it crucial to embrace the practices discussed here to remain competitive [28]. The trick lies in balancing flexibility and control by standardising workflows, managing costs effectively, and prioritising strong security measures.

To keep feedback loops under 10 minutes, focus on optimising build times through aggressive caching and parallelising independent tasks. Additionally, design for portability by steering clear of provider-specific dependencies, leveraging containers and standard APIs [1][27][29].

Treat your test suite as a first-class citizen. It's not an afterthought; it's the core mechanism that enables speed, reliability, and developer confidence.– Group107 [29]

Real-world examples highlight the importance of expert-driven strategies. For instance, in June 2023, Booking.com implemented blue-green deployments and canary releases across AWS and Azure under DevOps expert Maria Ivanova. This shift reduced deployment-related outages by 70% and enabled daily deployments instead of weekly ones [29]. Similarly, a European fintech company, led by CTO James O'Connor, cut cloud costs by 35% within six months by using Kubernetes and Crossplane for unified resource management [29].

Building on such successes, Hokstad Consulting offers tailored automation solutions that bridge theory and practice. Their expertise lies in optimising DevOps processes, cloud infrastructure, and hosting costs. By focusing on strategic resource management and cost engineering, they help businesses reduce cloud expenses by 30–50% while streamlining deployment cycles across public, private, and hybrid infrastructures.

This blend of strategy, practical tools, and specialised expertise defines the multi-cloud CI/CD journey. Success in this space hinges on viewing compliance as an engineering challenge.

Policy as Code makes compliance an engineering problem, not a bureaucratic one.– Group107 [29]

With the right tools and processes in place, organisations can turn the complexities of multi-cloud environments into clear, measurable advantages.

FAQs

What are the best strategies for managing fragmented tools in multi-cloud CI/CD pipelines?

Managing fragmented tools in multi-cloud CI/CD pipelines can be challenging, but there are ways to simplify and unify processes across different environments. One effective method is to use Infrastructure as Code (IaC) tools like Terraform or Pulumi. These tools help standardise how resources are provisioned, reducing the chances of manual errors and ensuring consistency.

Another key strategy is leveraging container orchestration platforms such as Kubernetes. These platforms make it easier to maintain portability and streamline deployments, no matter which cloud provider you're working with.

Automation also plays a crucial role. Incorporating GitOps practices, automated testing, and deployment techniques like blue-green or canary releases can help minimise discrepancies and boost reliability. On top of that, monitoring tools like Prometheus and Grafana provide valuable visibility, enabling teams to detect and address issues more efficiently.

By combining these approaches, organisations can simplify their operations, achieve consistent deployments, and better manage costs in multi-cloud environments.

What are the best practices for maintaining security and compliance in a multi-cloud environment?

To keep security and compliance in check within a multi-cloud setup, you need a well-thought-out and proactive plan. A great starting point is using infrastructure as code (IaC) tools. These tools allow you to automate how infrastructure is set up while embedding security policies directly into the process. The result? Consistent configurations, early detection of any drift, and adherence to compliance standards across all your cloud platforms.

Another smart move is adopting a multi-account strategy. By separating workloads into different accounts, you create clear security boundaries and make compliance management more straightforward. On top of that, building a unified security framework for your multi-cloud environment is critical. This framework should address key areas like data protection, threat detection, and network security, ensuring it aligns with the specific needs of each cloud platform.

Don't overlook the importance of regular audits, continuous monitoring, and automated policy enforcement. These steps help you stay on top of the unique security challenges posed by different providers. By blending automation, clear resource separation, and ongoing oversight, businesses can protect their multi-cloud environments while staying compliant.

What are the best ways to reduce costs when setting up CI/CD pipelines in a multi-cloud environment?

To manage costs effectively in multi-cloud CI/CD pipelines, businesses should prioritise resource efficiency and automation. Start by implementing dynamic scaling and tagging resources. This approach helps prevent over-provisioning and ensures every resource is used purposefully. Automating tasks, such as shutting down non-production environments during off-hours or enabling auto-scaling, can also significantly reduce idle costs.

Using Infrastructure as Code (IaC) tools is another smart move. These tools ensure consistent resource provisioning across different cloud providers, minimising the risk of costly misconfigurations. Regularly reviewing and analysing cloud usage can help pinpoint inefficiencies, while cost forecasting enables proactive adjustments. By adopting these strategies, you can build a cost-conscious, adaptable, and reliable multi-cloud CI/CD pipeline.