Managing cloud costs across AWS, Azure, and Google Cloud is complex. Each provider uses different billing systems and cost structures, making it hard to track spending. Traditional forecasting methods often fail due to their inability to handle sudden changes or large datasets. AI offers a solution by analysing live data, improving cost predictions, and detecting anomalies.

Key Takeaways:

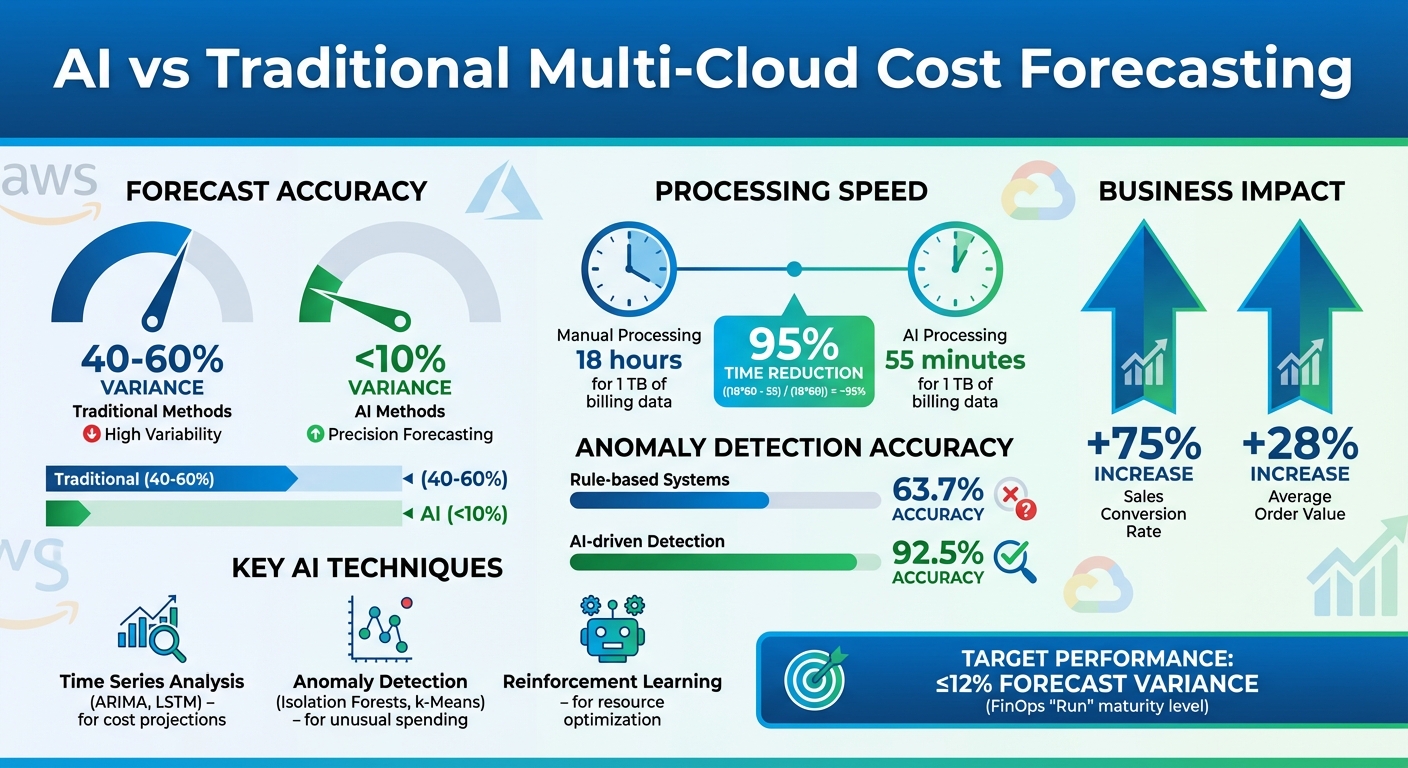

- AI Accuracy: Reduces forecast variance from 40–60% to within 10%.

- Time Savings: Processes 1 TB of billing data in 55 minutes vs. 18 hours manually.

- Techniques: Time series analysis (ARIMA, LSTM), anomaly detection, and reinforcement learning optimise resource allocation and cost control.

- Automation: Tools like Hokstad Consulting automate AI deployment, ensuring efficient and accurate forecasting.

AI bridges gaps between engineering and finance teams, provides real-time insights, and simplifies cost management for UK businesses dealing with multi-cloud environments.

::: @figure  {AI vs Traditional Multi-Cloud Cost Forecasting: Performance Comparison}

:::

{AI vs Traditional Multi-Cloud Cost Forecasting: Performance Comparison}

:::

S2 E2- The Cloud Cost Playbook: Forecasting, FinOps, and Flawed Assumptions

AI Techniques for Multi-Cloud Cost Forecasting

Delivering accurate cost predictions in multi-cloud environments requires advanced techniques. Below, we explore key AI-driven methods that provide practical solutions for UK businesses looking to refine their forecasting strategies and address the challenges of traditional approaches.

Time Series Analysis for Cost Projections

AI models like ARIMA and LSTM are powerful tools for identifying spending trends and projecting future costs. ARIMA excels with stable workloads and linear patterns, while LSTM is better suited for handling complex, non-linear trends [4]. By breaking down forecasts to granular levels - such as by account, project, or subscription - businesses can align costs with specific KPIs, like cost per transaction or cost per 100,000 words for AI services [2][4]. For annual forecasts, it’s smart to include a buffer for minor overruns. These projections also help in making long-term commitments, such as Reserved Instances or Savings Plans, to secure cost savings [6].

Anomaly Detection for Unusual Spending Patterns

Anomaly detection is where AI truly shines. Methods like Isolation Forests, k-Means clustering, and autoencoders identify unusual cost patterns without relying on manual thresholds [7][1]. The results are impressive: AI-driven anomaly detection achieves an accuracy rate of 92.5%, compared to just 63.7% for manual or rule-based systems [4]. These tools can highlight specific issues like misconfigurations, unauthorised usage, or runaway processes [7][1]. They’re also invaluable for spotting early signs of security breaches, such as unauthorised access or crypto-mining activity [7][4]. By applying context-aware filtering, these systems can separate legitimate expenses from wasteful spending [4].

Reinforcement Learning for Resource Allocation

Reinforcement Learning (RL) offers a dynamic approach to resource optimisation. By continuously evaluating trade-offs - such as performance, instance types (Spot vs. On-Demand), and scaling policies - RL ensures resource allocation is both efficient and cost-effective [4]. RL agents can adjust resources in real time based on demand forecasts, preventing over-provisioning and avoiding bottlenecks [3]. For AI-intensive tasks, targeting near 100% GPU utilisation or employing automated scaling to shut down idle resources can significantly cut costs [2]. Tools like the AWS Billing MCP Server, paired with conversational AI (e.g., Amazon Q), further streamline cost investigations, reducing analysis time from hours to mere minutes [5]. When combined with time series analysis and anomaly detection, RL completes a comprehensive toolkit for multi-cloud cost management.

These cutting-edge techniques are paving the way for more efficient and precise cost forecasting, ensuring businesses can seamlessly integrate them into their multi-cloud strategies.

Implementing AI in Your Multi-Cloud Strategy

Integrating AI into your multi-cloud strategy requires a structured approach. It starts with gathering accurate data, building effective models, and automating the deployment process to ensure your forecasting system operates seamlessly across platforms.

Collecting Data from Cloud Provider APIs

Cloud platforms like AWS, Azure, and Google Cloud provide APIs to access billing and usage data, but each has its own format. For instance, AWS offers the Cost Explorer API and Cost and Usage Reports, Azure provides the Consumption API, and Google Cloud uses the Cloud Billing API. The challenge lies in extracting and standardising this data, as the structures vary widely. You'll need to normalise fields such as resource types, pricing models, and time zones to ensure consistency for your AI models. To improve forecasting accuracy, track detailed metrics and set up automated pipelines that pull data based on your spending habits and precision needs. Once you have clean, standardised data, you can move on to training and validating your models.

Training and Validating AI Models

With at least six months of historical data, you can start training your AI models. Divide your dataset into training (70–80%), validation (10–15%), and testing (10–15%) subsets. Begin with simpler models like ARIMA for a baseline and advance to more complex methods, such as LSTM networks, if your spending patterns are intricate. Focus on identifying key cost drivers, including time of day, seasonal trends, deployment events, and business metrics like transaction volumes or user activity. This ensures your predictions align with the operational demands of a multi-cloud setup. Validation is essential - test your models against unseen data to confirm they can predict accurately without overfitting. Use metrics like Mean Absolute Percentage Error (MAPE) and Root Mean Square Error (RMSE) to gauge performance. If your models start to drift, implement continuous retraining with updated data to maintain accuracy as your infrastructure evolves.

Automating Deployment with Hokstad Consulting

Once your models perform reliably, automating their deployment is the next step to keep the system efficient and accurate. Manual deployment is time-consuming and prone to errors. This is where Hokstad Consulting can make a difference. They specialise in automating the entire process, from setting up data pipelines to deploying and monitoring models. Their solutions integrate directly with your existing DevOps workflows, using CI/CD pipelines to automatically update models as new data flows in. Real-time monitoring dashboards track model performance, alerting you to any deviations in predictions or drops in accuracy.

Hokstad Consulting operates on a No Savings, No Fee model, meaning you only pay if their solutions reduce costs. Beyond forecasting, their expertise in cloud cost management helps identify additional optimisation opportunities, such as rightsizing resources, implementing automated scaling, and negotiating better deals with cloud providers. By automating the entire cycle - from generating forecasts to implementing cost-saving actions - you ensure your AI system stays accurate, up-to-date, and actionable without requiring constant manual effort. This holistic approach makes managing multi-cloud expenses more efficient and effective.

Need help optimizing your cloud costs?

Get expert advice on how to reduce your cloud expenses without sacrificing performance.

Advanced Techniques for Better Forecasting Accuracy

Once you've mastered the basics of AI-driven forecasting, it's time to delve into advanced techniques that can fine-tune accuracy even further. Refining AI models after deployment and managing multi-cloud complexities are critical steps. Two key strategies here include dealing with cross-platform data variability and leveraging ensemble methods to improve predictions.

Handling Data Variability Across Platforms

One of the biggest challenges in a multi-cloud setup is the inconsistency in how different providers structure and present their data. For instance, cloud providers like AWS, Azure, and Google Cloud have unique formats for reporting data, billing cycles, pricing models, and even regional pricing structures. These differences can introduce inconsistencies that may skew your forecasts.

To overcome these hurdles, you’ll need to normalise your data at a granular level. This means addressing factors like time zone differences, currency conversions, and variations in pricing models. Additionally, mapping equivalent services across providers (e.g., AWS EC2, Azure Virtual Machines, and Google Compute Engine) into unified categories ensures your comparisons are meaningful. Feature engineering can also play a critical role here - identifying trends like total compute spend across platforms can help reduce data noise and reveal actionable insights. These steps, when combined, create a solid foundation for more accurate forecasting. Once your data is consistent and clean, you can take things a step further by integrating multiple models for even better results.

Using Ensemble Methods for Better Predictions

Ensemble learning is another powerful tool for improving forecasting accuracy. By combining the strengths of multiple AI models, this approach delivers predictions that are often more reliable than what any single model could achieve on its own. As IBM explains:

Ensemble learning rests on the principle that a collectivity of learners yields greater overall accuracy than an individual learner [8].

This method is particularly effective in multi-cloud environments, where the complexity of data often requires sophisticated solutions. Techniques such as bagging, stacking, and boosting are especially useful here.

- Bagging reduces variance by training multiple models on different subsets of data, ensuring the final prediction is less prone to overfitting.

- Stacking takes a different approach by combining models that specialise in specific areas. For example, one model might focus on AWS spot instance trends, while another handles Azure reserved capacity. A meta-model then blends their outputs for a unified prediction.

- Boosting, with algorithms like XGBoost, trains models sequentially, allowing each new model to correct the errors of its predecessor. This makes boosting particularly effective for identifying sudden spending spikes or anomalies.

Measuring ROI with AI-Driven Forecasting

When it comes to AI-driven multi-cloud cost forecasting, the real value lies in measurable outcomes. The challenge is knowing which metrics to prioritise and ensuring consistent tracking across your organisation. Let’s explore the key metrics and strategies that validate these returns.

Key Metrics for Forecasting Success

AI takes cost management to the next level by shifting the focus from analysing past spending to predicting and optimising future expenses. While this shift is a vital indicator of progress, digging deeper into specific metrics is essential for gauging performance accurately.

Forecast accuracy is a great place to start. Organisations operating at the Run

maturity level in FinOps typically achieve a forecast variance of 12% or less between predicted and actual spend [9]. For AI workloads, tracking Cost Per Unit of Work - like cost per 100,000 tokens processed or cost-per-GPU-hour - offers a precise view of efficiency. These detailed metrics can uncover inefficiencies that broader measures might miss [2].

Another critical metric is anomaly detection accuracy, which allows for quicker identification of spending issues and minimises waste. AI also significantly reduces the time spent on cost analysis - by up to 68% compared to manual methods [4]. This time saved enables teams to focus on strategic initiatives instead of routine data crunching.

Beyond costs, it’s important to assess the business impact. For example, AI-driven forecasting has been shown to boost Sales Conversion Rates by 75% and increase Average Order Value by 28%, thanks to better resource alignment with demand [4]. These downstream effects often highlight the true return on investment, illustrating how improved forecasting supports broader business goals.

To see how these principles translate into measurable returns, consider the following model.

Hokstad Consulting's No Savings, No Fee Model

One of the biggest barriers to adopting AI in cost forecasting is the uncertainty surrounding upfront investments. Hokstad Consulting addresses this with its No Savings, No Fee model. Here’s how it works: you only pay when measurable savings are realised, and fees are capped as a percentage of the actual cost reductions. This approach effectively guarantees ROI, ensuring the service cost is always a fraction of the money saved.

This model is particularly appealing for organisations still building confidence in AI-driven solutions. To get the most out of it, consider adopting weekly or monthly rolling forecast reviews rather than relying solely on quarterly cycles [2]. With the inherent variability of AI and multi-cloud costs, frequent reviews allow for early detection of potential overspending, keeping budgets under control.

Conclusion: The Future of AI in Multi-Cloud Cost Management

AI-powered cost forecasting is reshaping how organisations manage multi-cloud expenses. However, achieving accurate forecasts requires tackling challenges like data consistency and model drift. Without reliable metadata, even the most advanced AI models struggle to deliver useful insights. Similarly, as cloud pricing and usage trends shift, continuous monitoring and retraining of models become essential to maintain accuracy [1][4]. By integrating MLOps practices, businesses can effectively track financial drift and adapt to changes in cloud architectures [1].

For those ready to embrace AI-driven forecasting, focusing on unit economics is critical. This approach uncovers inefficiencies that broader metrics often overlook, which is especially important given the massive cost differences - ranging from 30× to 200× - between unoptimised and fully engineered cloud platforms [2].

Combining these strategies with automated deployment tools can further streamline cost management. Setting up real-time budget alerts helps organisations stay on top of their spending, while adopting a rolling forecast schedule - whether weekly or monthly - can quickly identify and address unexpected costs. Additionally, ensuring proper IAM permissions can prevent costly mistakes, such as unauthorised experiments in sandbox environments [2].

For expert guidance, Hokstad Consulting offers tailored solutions in cloud cost engineering and AI strategy. Their No Savings, No Fee model provides a risk-free way to implement these advanced capabilities, making it easier for organisations to optimise their cloud investments.

FAQs

How does AI enhance the accuracy of multi-cloud cost forecasting compared to traditional methods?

AI has transformed the way businesses forecast multi-cloud costs, delivering a level of precision that traditional methods simply can't match. By combining real-time telemetry data with machine learning, AI-driven models significantly outperform conventional approaches that rely solely on historical data. Where older methods often result in forecast errors of 40–60%, AI reduces this margin to about 10%. This leap in accuracy gives businesses much better control over their cloud spending.

AI's ability to spot real-time patterns and trends doesn't just improve cost predictions - it also empowers companies to manage their budgets proactively. This means businesses can fine-tune their resource allocation and make smarter financial decisions across multi-cloud environments.

What AI techniques are commonly used to detect unusual cloud spending patterns?

In cloud environments, businesses often rely on machine learning models - both supervised and unsupervised algorithms - alongside time-series forecasting and pattern recognition techniques like clustering to detect unusual spending patterns. These methods are key for identifying irregularities in cloud usage and costs.

By using these advanced tools, organisations can fine-tune their multi-cloud strategies, minimise unnecessary expenses, and ensure that their spending stays in line with their operational objectives.

How can businesses maintain consistent data across multiple cloud platforms for accurate AI cost forecasting?

To achieve reliable AI-driven cost forecasting, businesses must focus on maintaining data consistency across platforms like AWS, Azure, and Google Cloud. A great starting point is to implement automated, standardised tagging when creating resources. These tags should include essential business details such as project name, owner, environment, and cost centre. Leveraging AI-powered tagging tools can help avoid manual mistakes and ensure uniformity across all cloud environments.

Another crucial step is consolidating data into a centralised repository, such as a UK-hosted data lake or warehouse. This approach allows raw usage logs to be collected via APIs, standardised into consistent units like GB-hours or vCPU-hours, and converted into GBP for seamless reporting. Make sure timestamps are formatted appropriately for the UK (e.g., 25 Oct 2023, 14:30) and use metric measurements to maintain accuracy.

Lastly, set up regular synchronisation between the central repository and the cost management tools provided by each cloud platform. Automated scripts can be used to identify and correct any discrepancies, ensuring that AI models are always trained with accurate and current data. By following these steps, businesses can rely on their forecasts to drive effective budgeting and FinOps strategies.