AI is reshaping Identity and Access Management (IAM) by automating policy creation, improving security, and reducing operational risks. UK businesses, especially those managing cloud environments and adhering to GDPR, benefit significantly from these advancements. Here's how AI transforms IAM:

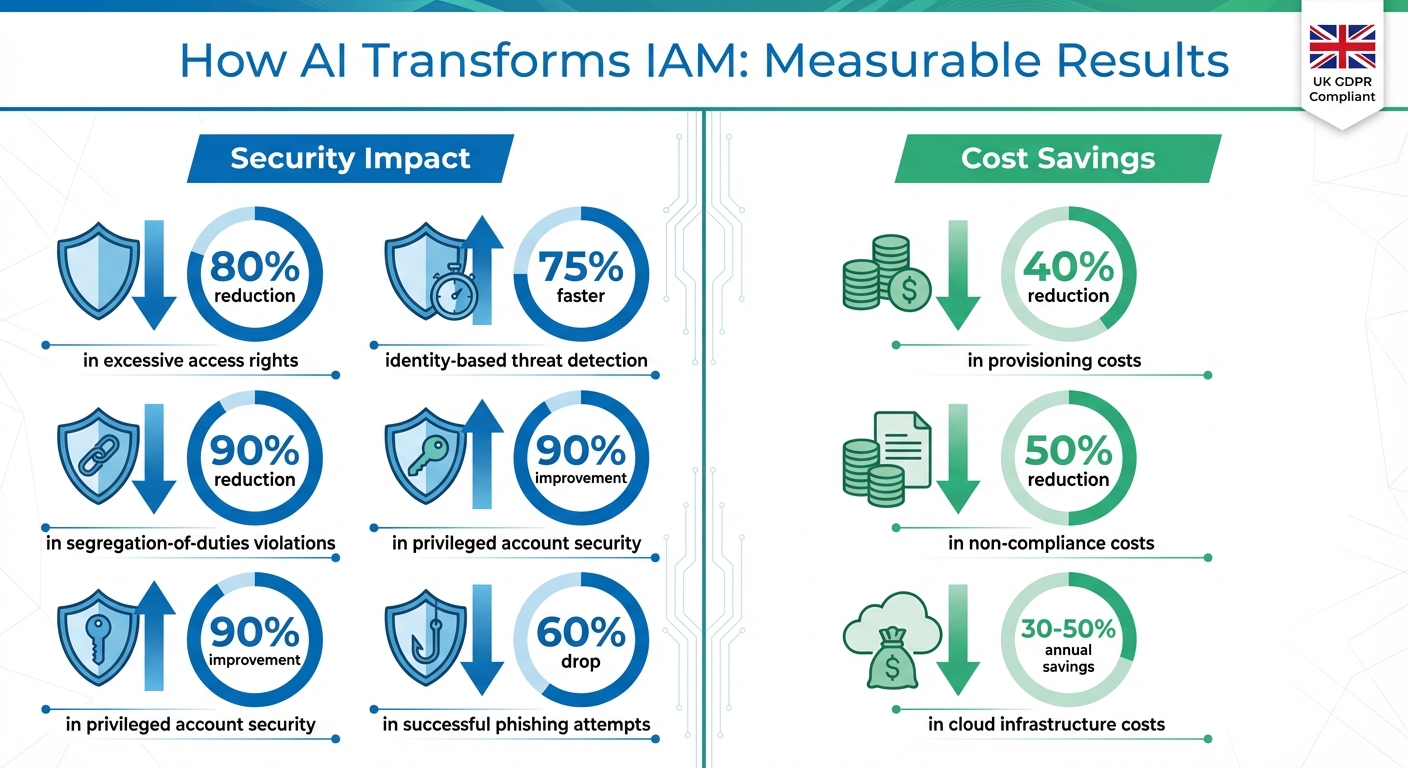

- Automated Least-Privilege Policies: AI analyses access logs to create precise permissions, cutting excessive access rights by up to 80%.

- Role Optimisation: AI simplifies role-based access control (RBAC) by clustering users based on actual behaviour, reducing violations by 90%.

- Anomaly Detection: AI flags unusual activity, such as geographic login discrepancies, reducing phishing success rates by 60%.

- Cost Savings: Automation lowers provisioning costs by up to 40%, saving millions annually.

AI-driven IAM integrates with existing workflows, like CI/CD pipelines, and ensures compliance with UK regulations. While challenges like model bias and drift exist, starting small and maintaining human oversight can mitigate risks. Tools like AWS IAM Policy Autopilot and partnerships with experts (e.g., Hokstad Consulting) help organisations modernise IAM processes while optimising security and costs.

::: @figure  {AI-Driven IAM Impact: Key Statistics on Security and Cost Savings}

:::

{AI-Driven IAM Impact: Key Statistics on Security and Cost Savings}

:::

What's new with IAM - from least privilege to organization policies and AI-powered assistance

Key Applications of AI in IAM Policy Automation

AI is reshaping how organisations handle IAM (Identity and Access Management) policy automation, offering smarter ways to manage cloud access across the UK. Here are three key areas where AI is making a difference.

Automated Least-Privilege Policy Generation

AI takes the guesswork out of permissions by analysing historical logs, API calls, and resource activity to create policies tailored to what users and services actually need. Instead of starting with overly broad permissions, machine learning models dig into months of real-world activity to pinpoint the exact access required. For instance, tools like AWS IAM Policy Autopilot scan application code, map SDK calls, and automatically generate policies that include only the minimum necessary permissions - handling cross-service dependencies without requiring developers to manually craft JSON files [2].

The results speak for themselves. Organisations using AI to automate least-privilege policies have seen an 80% drop in excessive access rights and a 75% faster response to identity-based threats [3]. For UK businesses navigating GDPR’s strict data minimisation rules, these precise policies can mean fewer audit issues, reduced regulatory risks, and a smaller attack surface. This foundation also sets the stage for further AI-driven improvements in role management.

Role Mining and RBAC Optimisation

Traditional role-based access control (RBAC) often relies on job titles or organisational charts, which can lead to outdated or overly complex roles. AI-driven role mining changes this by using clustering algorithms to group users based on their actual access behaviours. This approach simplifies roles, identifies redundancies, and flags outliers whose access patterns don’t align with their peers. The outcome? Streamlined governance with fewer violations and tighter security for privileged accounts. In fact, organisations adopting this method have reported a 90% reduction in segregation-of-duties violations and a 90% improvement in privileged account security [3].

Risk-Based Access and Anomaly Detection

AI doesn’t stop at creating policies and refining roles - it also enhances access control by continuously assessing risks. By analysing factors like geolocation, time of day, device security, and deviations from typical behaviour, AI enables context-aware decisions in real time. For example, it can flag unusual access attempts from non-corporate devices or impossible travel scenarios, such as logging in from London and another distant location within a short timeframe. This kind of behavioural analysis can also detect subtle threats, like gradual privilege abuse or incremental access escalations, that static rules often overlook.

The impact is substantial. Organisations using AI for anomaly detection have seen a 60% drop in successful phishing attempts, as AI correlates identity data, access patterns, and risk signals to block compromised credentials before they cause harm [3]. For UK businesses managing complex DevOps pipelines or cloud infrastructure, partners like Hokstad Consulting can help integrate these AI tools into existing CI/CD workflows and governance frameworks, ensuring adaptive access controls meet both security and operational needs.

Building AI-Driven IAM Policy Solutions

Creating an AI-driven Identity and Access Management (IAM) architecture for UK businesses involves layering five critical components: data and telemetry, feature/model, policy engine, orchestration/workflow, and governance/monitoring. At its base lies the data and telemetry layer, which consolidates identity stores, access logs, HR systems, ticketing systems, and cloud audit logs into a centralised security data lake or SIEM. Above this, the feature and model layer employs AI to perform tasks like role mining, least-privilege recommendations, risk scoring, and anomaly detection, all based on historical access patterns. The policy engine and decision layer converts these AI outputs into actionable policies and conditional access rules, integrating seamlessly with tools like AWS IAM, Azure AD, GCP IAM, or on-premises identity systems. The orchestration and workflow layer manages access requests, approval processes, and joiner-mover-leaver workflows. Finally, the governance and monitoring layer ensures compliance with UK GDPR through dashboards, KPI tracking, audit reporting, and human oversight for high-impact changes.

Most UK enterprises implement this as a hybrid model, combining cloud-native services with connections to on-premises directories and HR systems. Data residency controls tailored to UK and EU regulations ensure compliance. Together, these layers transform raw data into precise and compliant IAM policies. Below, we explore each layer in detail.

Data and Telemetry Requirements

AI-driven IAM relies heavily on comprehensive and accurate telemetry. Organisations need to gather identity and HR data - such as user identifiers, roles, departments, employment status, manager relationships, and location - to support role mining and joiner-mover-leaver workflows. Access logs should capture authentication events, authorisation decisions, resource usage, and privilege escalations. Application and API activity from cloud audit logs (e.g., AWS CloudTrail, Azure Activity Log, Google Cloud Audit Logs) provides insights into actual permission usage versus granted permissions. Security signals from SIEM alerts, endpoint telemetry, and threat intelligence help identify anomalous access patterns and potential risks.

Under UK GDPR, much of this data is classified as personal data, requiring organisations to define clear legal bases for its collection, often under legitimate interests or legal obligations for security and compliance. Data minimisation is critical - only collect attributes necessary for security analytics. Use pseudonymisation for analytic datasets and enforce strict access controls on raw logs. Organisations should also respect data subject rights and adhere to retention limits, keeping detailed logs only as long as necessary for audits or compliance. A practical setup involves maintaining a secure raw log store alongside an anonymised or aggregated analytics store for training AI models.

Integrating AI with Existing IAM Workflows

For seamless adoption, AI should enhance existing IAM workflows rather than operate as a standalone system. In DevOps and Infrastructure as Code (IaC), tools like AWS IAM Policy Autopilot demonstrate how AI can analyse code to automatically generate least-privilege IAM policies, integrating directly into developer workflows and CI/CD pipelines. Similar methods can be applied to tools like Terraform, ARM/Bicep, and Deployment Manager, where AI suggests or validates IAM configurations before changes are merged.

In change management, AI-generated policy updates can be presented as pull requests or tickets for review by security engineers, ensuring approvals follow established processes. For access request workflows, AI can recommend entitlements based on peer groups and historical approval patterns, providing explainable suggestions rather than raw policies.

UK organisations often start with AI in an advisory role, using it to measure error reductions and time savings before moving to full automation. Hokstad Consulting, for example, helps integrate AI tools into existing CI/CD workflows and governance frameworks, enabling adaptive access controls while optimising security and operational efficiency.

Governance and Oversight in Policy Automation

Once AI becomes part of IAM workflows, strong governance ensures compliance with regulatory and security standards. For UK businesses, this includes establishing clear accountability: assigning ownership of AI models (e.g., to the CISO or IAM lead), data (to the DPO or data owner), and operations (to SecOps or DevSecOps), in line with UK GDPR principles. Implementing risk-based approval tiers is crucial - low-risk changes, like removing unused entitlements, can be auto-approved, while higher-risk modifications (e.g., those involving privileged roles or cross-border access) require explicit human approval, often with multi-person sign-off. A documented policy for AI use in security should outline objectives, data processing methods, model validation protocols, and the right to override AI decisions, supporting transparency under GDPR and emerging AI governance guidelines.

Organisations should monitor KPIs related to security, operational efficiency, and compliance. Metrics might include reductions in excessive privileges, faster detection of identity-based threats, and improved audit outcomes. For example, some organisations have reported an 80% drop in excessive access rights and a 75% improvement in identity threat detection times [3]. Comprehensive logging of AI recommendations, human decisions, and resulting policy states is essential for regulatory and internal audits. UK-regulated sectors should also align governance practices with guidance from bodies like the FCA, PRA, or ICO.

Need help optimizing your cloud costs?

Get expert advice on how to reduce your cloud expenses without sacrificing performance.

Challenges and Best Practices

Risks and Limitations of AI in IAM

AI-powered Identity and Access Management (IAM) comes with its own set of challenges, including model bias, explainability issues, over-reliance on automation, and model drift.

Model bias can lead to skewed access controls. For instance, if the training data disproportionately represents specific roles, departments, or regions, the AI might consistently grant too much or too little access to certain user groups. This can create systemic imbalances in access permissions.[7] Another pressing issue is the explainability gap. Many machine learning models operate as black boxes

, making it difficult for security teams to understand why a particular policy was suggested or why a user was flagged. This lack of transparency can erode trust and complicate regulatory requirements, especially under UK GDPR.[1][7]

Over-reliance on automation is another risk. Without proper human oversight, automated systems could inadvertently lock users out of critical services or grant inappropriate access levels.[4][6] The quality of data is also key - poor telemetry or incomplete logs can result in unreliable models, leading to missed threats or an increase in false positives.[7][5] Lastly, there’s the issue of model drift. As applications, cloud services, and user behaviours evolve, AI models trained on older data may become misaligned with current risks. If these models aren't regularly updated and validated, they can fail to maintain effective security policies.[3][7] To tackle these challenges, a structured and cautious approach to AI deployment is essential.

Best Practices for AI-Driven IAM Automation

To navigate the risks associated with AI in IAM, it’s wise to start small and proceed cautiously. Begin with low-risk, report-only deployments. Use AI to identify excessive permissions, dormant accounts, and unusual access patterns, but keep humans in charge of making policy changes until the AI's accuracy and reliability have been proven in real-world conditions.[4][5] Once confidence is built, incorporate AI recommendations into established change management processes, ensuring peer review and thorough testing.[2][4]

Focus on specific, well-defined use cases rather than aiming for fully autonomous IAM solutions from the outset. For example, AI can suggest least-privilege policies for particular cloud services or flag risky access combinations for review. Embedding AI-driven IAM into familiar workflows - such as access review campaigns, joiner-mover-leaver processes, or security incident responses - helps teams adopt these tools without disrupting their existing practices.[4][6]

Additionally, invest in training and change management. Equip security, DevOps, and compliance teams with the knowledge to interpret AI-generated risk scores, understand how the models work, and override decisions when necessary. This empowers teams to use AI effectively while maintaining control over critical IAM decisions.[1][7] These practices not only reduce risks but also improve efficiency and resource allocation.

Cost and Scalability Considerations

Adopting these best practices doesn’t just enhance security - it can also lead to substantial cost savings. For example, automated audit trails and policy-violation detection can reduce non-compliance costs by up to 50%.[5]

That said, organisations must account for the costs associated with AI model training, data storage, and logging infrastructure. Smart resource management can help offset these expenses. By optimising compute resources and automating intelligently, businesses can reduce cloud costs by 30–50% annually.[9] Hokstad Consulting, for instance, supports UK organisations in designing scalable IAM architectures that balance security needs with budget constraints. Their approach integrates AI tools into existing CI/CD workflows and governance structures, while also optimising cloud infrastructure costs. Regularly retraining models is critical to address model drift and ensure that IAM systems scale effectively as cloud environments expand.[2][8][5] These strategies not only keep costs manageable but also ensure that AI-driven IAM solutions remain effective and compliant as organisations grow.

Conclusion

AI-powered IAM automation is transforming how organisations manage access, detect threats, and reduce breach-related costs. As mentioned earlier, businesses implementing these solutions report up to 80% fewer excessive access rights and a 75% improvement in threat detection times[3]. These advancements not only minimise security incidents but also help organisations meet UK GDPR requirements while reducing the financial impact of breaches. By automating tasks like access reviews, provisioning, and audit evidence collection, IT and security teams can shift their focus from repetitive chores to more strategic priorities[4].

Looking ahead, emerging AI technologies promise to push IAM capabilities even further. Generative AI is already making waves in IAM policy creation. For example, tools like AWS's IAM Policy Autopilot leverage precise code analysis and AI-driven validation to craft least-privilege policies directly from application code. This approach addresses issues like AI hallucinations

of incorrect permissions by incorporating schema checks, test harnesses, and human oversight. In the near future, engineers may simply describe access needs in natural language, with AI generating and validating secure policies.

IAM is also becoming more integrated with broader security and governance platforms. Identity context is no longer siloed; it now works alongside SIEM, XDR, zero trust, and data security tools to provide unified risk scoring and coordinated responses. For instance, AI can analyse telemetry across identity, device, network, and data layers. A high-risk signal from endpoint monitoring could automatically adjust IAM policies or trigger additional authentication measures[3]. This interconnected approach paves the way for more actionable and cohesive security strategies.

For UK organisations aiming to adopt these advancements, a practical starting point involves strengthening data foundations, modernising IAM workflows, and running small-scale pilot projects. Steps like centralising identity telemetry, cleaning up outdated accounts, and standardising joiner–mover–leaver processes ensure AI models are fed reliable data. Pilot projects - focused on areas such as anomaly detection or automated audit reporting - allow teams to test the waters, demonstrating measurable improvements in managing excessive permissions before scaling up. Aligning these efforts with existing security and DevOps practices ensures a smooth integration of AI-driven IAM solutions. Hokstad Consulting offers support for UK enterprises on this journey, helping them incorporate AI into their DevOps and cloud workflows, optimise infrastructure costs, and maintain compliance with governance and security goals.

FAQs

How does AI enhance security in IAM policy automation?

AI plays a key role in improving security within IAM policy automation by offering real-time threat detection, spotting unusual activities, and automatically updating policies. This not only minimises the chance of human error but also keeps security measures in line with the latest standards.

Through constant monitoring and analysis of behaviour, AI adjusts to emerging risks, enabling organisations to maintain strong access controls while streamlining the often complicated process of managing IAM policies.

What challenges arise when using AI for IAM policy automation?

Using AI to streamline Identity and Access Management (IAM) policies comes with its own set of hurdles. Data privacy and security sit at the top of the list. Since AI systems often depend on sensitive data to operate effectively, safeguarding this information while staying compliant with regulations is absolutely critical.

Then there’s the issue of accuracy and fairness. AI models aren’t immune to biases or mistakes, and these can result in granting or denying access inappropriately. Getting AI to work smoothly alongside existing IAM systems adds another layer of complexity, often demanding advanced technical skills and significant resources.

Finally, transparency and interpretability are non-negotiable. Organisations must understand how AI-driven decisions are made to ensure they meet compliance standards and foster trust in the system. Tackling these challenges calls for thorough planning, strong governance frameworks, and a commitment to ethical AI practices.

How can UK businesses use AI-driven IAM while staying GDPR compliant?

To ensure compliance with GDPR while using AI-driven IAM, UK businesses need to focus on protecting data through robust safeguards and frequent risk evaluations. Being transparent is essential - clearly communicate to users how their personal data is being used and processed.

It's equally important to enforce strict access controls so that only authorised individuals can manage sensitive information.

Moreover, AI systems should be carefully designed to reduce bias and safeguard personal data at every stage of their use. By adhering to GDPR principles, organisations can keep their IAM processes secure, responsible, and aligned with UK regulations.