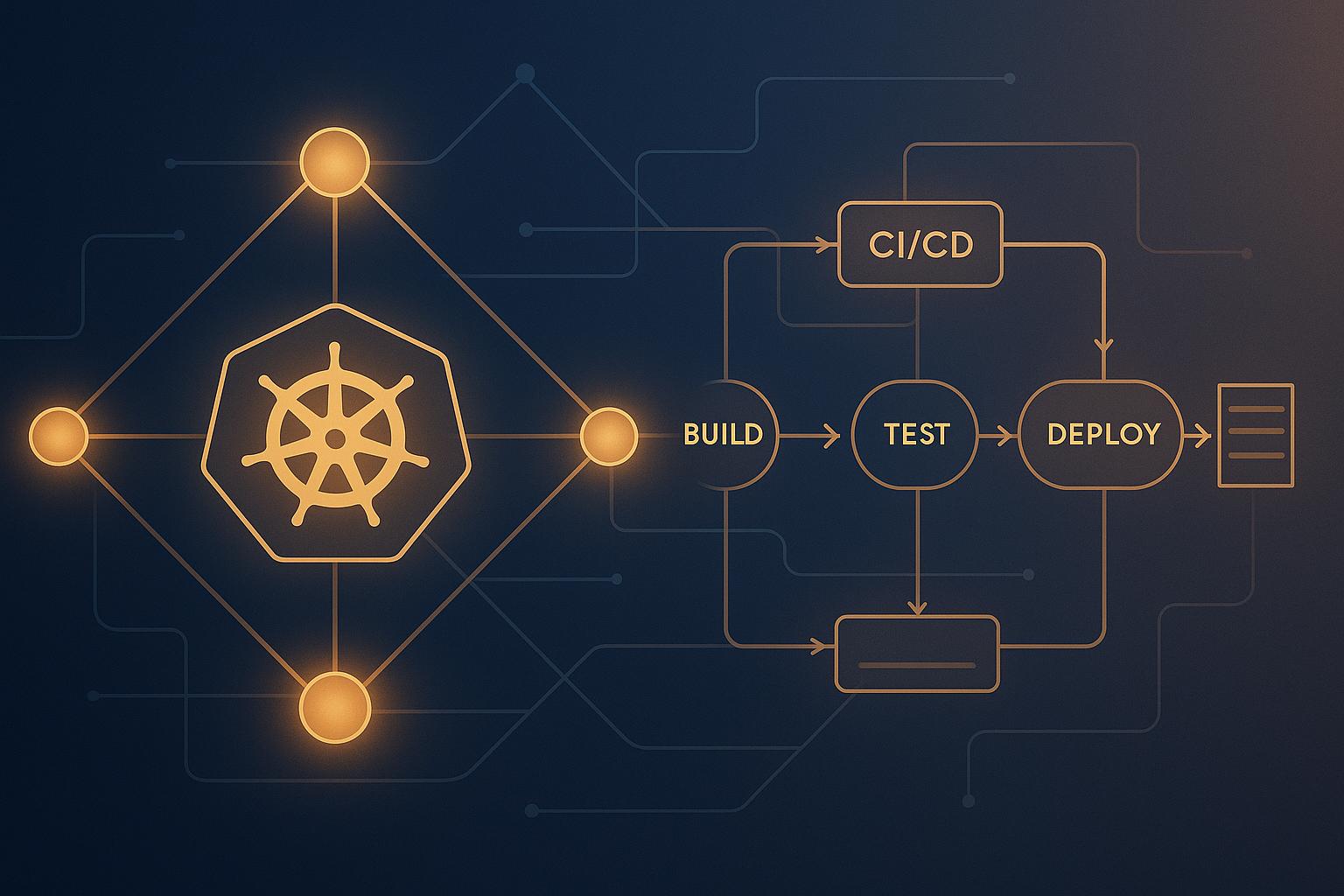

Want to keep your Kubernetes CI/CD pipelines running smoothly, no matter what? High availability ensures your deployments stay online, even during failures or maintenance. With downtime costing UK businesses upwards of £80,000 per incident, it's critical to build resilient pipelines.

Here’s how to achieve it:

- Replication and Redundancy: Use ReplicaSets and multi-zone deployments to eliminate single points of failure.

- Control Plane Resilience: Strengthen cluster management with multi-master setups and distributed nodes.

- Automated Failover: Configure health checks, backups, and disaster recovery drills for quick recovery.

- Monitoring: Use tools like Prometheus, Grafana, and AI-driven alerts to detect and fix issues early.

- Load Balancing and Multi-Region Deployments: Distribute workloads globally for fault tolerance and better performance.

These strategies reduce downtime, improve deployment speed, and keep your systems resilient under pressure. Whether you're a small startup or a large enterprise, these steps are vital for maintaining a reliable CI/CD pipeline.

Step 1: Set Up Replication and Redundancy

In Kubernetes CI/CD pipelines, redundancy plays a key role in keeping critical services running smoothly, even when components fail. By using ReplicaSets and deploying across multiple zones, you can create service copies and distribute them across infrastructure zones, forming a solid backbone for reliable CI/CD operations.

Using ReplicaSets to Ensure Service Redundancy

ReplicaSets in Kubernetes are designed to maintain a specific number of identical pod replicas at all times. For instance, a ReplicaSet configured with three replicas ensures that if one pod goes down, Kubernetes automatically spins up a replacement while the remaining replicas continue handling requests [5][3].

This approach is particularly useful for keeping essential CI/CD components - like build servers, artifact repositories, and deployment tools - up and running. By distributing traffic evenly using load balancers and ingress controllers, it prevents any single instance from being overwhelmed.

Of course, running multiple replicas does require additional resources, but this is a small price to pay for uninterrupted availability. Tools like Infrastructure as Code (IaC) help maintain consistency across replicas, minimising the risk of configuration drift and ensuring smooth operations.

Multi-Zone Deployments for Greater Fault Tolerance

While ReplicaSets handle pod-level redundancy, multi-zone deployments tackle infrastructure-level failures. By spreading workloads across multiple availability zones, you eliminate single points of failure. For example, if an entire zone experiences issues like power outages or network disruptions, Kubernetes can reschedule workloads to healthy nodes in other zones [4].

This strategy ensures your CI/CD pipeline keeps running, even during large-scale disruptions. Combining multi-zone deployments with Kubernetes Services, which provide a stable access point regardless of pod location, further bolsters fault tolerance. Although this setup might introduce minor latency, the added resilience is well worth it - especially for CI/CD workloads where uptime is critical.

Comparing Replication Strategies

Different CI/CD components require tailored replication strategies. Here’s a breakdown:

| Strategy | Best For | Fault Tolerance | Complexity | Performance Impact |

|---|---|---|---|---|

| ReplicaSets (Single-Zone) | Stateless build agents, API gateways | Handles pod-level failures | Low | Minimal |

| ReplicaSets (Multi-Zone) | Web services, load balancers | Mitigates both zone and pod failures | Medium | Slight latency increase |

| StatefulSets (Single-Zone) | Databases, message queues | Preserves data consistency during pod failures | High | Moderate |

| StatefulSets (Multi-Zone) | Distributed databases, persistent storage | Maintains data consistency even during zone failures | Very High | Higher latency |

For stateless applications, ReplicaSets are ideal as they allow any replica to handle incoming requests equally. On the other hand, StatefulSets are essential for stateful applications requiring persistent storage and data consistency, offering ordered deployment and scaling.

Single-zone deployments are simpler to manage but leave systems vulnerable to zone-wide outages. Multi-zone setups, while more complex, provide robust fault tolerance by enabling automatic failover to unaffected zones. However, this comes with challenges like more intricate network configurations and data synchronisation requirements.

For UK businesses, Hokstad Consulting can assist in crafting tailored replication strategies that balance availability with cost efficiency. Ultimately, the right approach depends on your application’s state requirements and how much complexity you’re willing to manage. Many CI/CD pipelines benefit from a hybrid model - using ReplicaSets for stateless components and StatefulSets for stateful ones.

Step 2: Strengthen Control Plane and Node Resilience

To keep your Kubernetes cluster running smoothly and avoid system-wide failures, it's crucial to reinforce both the control plane and worker nodes. This section outlines practical strategies to make your cluster more resilient.

Control Plane Redundancy

Think of the control plane as the command centre of your Kubernetes cluster - it handles everything from processing API requests to making scheduling decisions. If the control plane fails, the entire cluster stops functioning. That’s why ensuring redundancy here is non-negotiable, especially for maintaining high availability in CI/CD pipelines.

The best practice? Deploy at least three API servers and three etcd nodes, spreading them across different availability zones. This setup ensures quorum, minimises the risk of data loss, and keeps your cluster operational even if one zone encounters issues. AWS EKS, for example, recommends this three-node minimum for production-level high availability [6].

When it comes to deployment, you have two main options:

- Integrated Deployment: Here, etcd runs on the same nodes as other control plane components. It’s simpler to manage and less costly, making it a good fit for smaller clusters or budget-conscious setups.

- Dedicated etcd Deployment: In this approach, etcd is hosted on its own cluster, separate from the control plane. While this increases complexity, it offers better fault isolation and scalability, which is ideal for large, mission-critical environments [7].

For UK organisations, particularly those in highly regulated industries like finance or healthcare, the dedicated etcd setup can be a smart choice. By isolating etcd, which stores the cluster’s state data, you add an extra layer of resilience, ensuring the control plane remains functional even if individual nodes fail.

Worker Node Distribution

While redundancy in the control plane safeguards cluster management, distributing worker nodes ensures your applications and workloads stay online during infrastructure hiccups. Spread worker nodes across multiple zones (or even regions) so that workloads can automatically shift to healthy nodes if one zone goes down. For example, if a zone outage occurs, CI/CD jobs can seamlessly continue on nodes in other zones.

To maintain consistency across your nodes, use DaemonSets. These ensure critical services - like monitoring, logging, and security - are deployed uniformly across every node, giving you both visibility and security across your entire CI/CD setup.

Load Balancers and Multi-Master Setups

Load balancers play a key role in directing traffic to only the healthy control plane nodes. A robust configuration typically includes both internal and external load balancers: external ones manage traffic from outside the cluster, while internal ones handle communication between cluster components. Incorporating health checks ensures that unresponsive API servers are detected quickly, and traffic is rerouted to functioning nodes.

For added resilience, consider a multi-master setup. This allows you to perform maintenance on one master node without disrupting the entire cluster. It’s essential to have a strong etcd clustering setup to avoid split-brain scenarios, where the cluster becomes fragmented due to communication breakdowns.

Here’s a quick comparison of different setups:

| Setup Type | Fault Tolerance | Complexity | Best For |

|---|---|---|---|

| Single Master + Load Balancer | Limited to worker node failures | Low | Development environments |

| Multi-Master (Stacked) | Full control plane redundancy | Medium | Production clusters, cost-conscious setups |

| Multi-Master (External etcd) | Maximum fault isolation | High | Large-scale, mission-critical deployments |

Investing in a multi-master configuration pays off during critical moments. It ensures your CI/CD pipelines keep running even during control plane maintenance or unexpected failures, allowing development teams to deploy without interruptions.

For UK businesses aiming to fine-tune their Kubernetes resilience strategies, Hokstad Consulting offers tailored solutions. Their expertise in high-availability architectures ensures a balance between fault tolerance and cost efficiency, backed by deep experience in DevOps and cloud infrastructure.

Step 3: Automate Failover and Disaster Recovery

Automating failover and disaster recovery is essential for minimising downtime and ensuring your CI/CD pipelines remain unaffected by unexpected failures.

Health Checks and Pod Recovery

Kubernetes offers two powerful tools for automated pod recovery: liveness probes and readiness probes. These health checks work together to keep applications running smoothly by ensuring only healthy pods handle traffic.

- Liveness probes: Restart pods that become unresponsive.

- Readiness probes: Temporarily remove pods from service until they're fully operational.

By configuring these probes, you can ensure your applications recover quickly from minor issues without manual intervention.

Backup and Restore Strategies

Protecting your data is just as crucial. Tools like Velero streamline cluster-wide backups and enable fast recovery using volume snapshots. Velero doesn’t just back up data - it captures the entire application state, including configurations, secrets, and custom resources, making full restoration straightforward.

To maximise reliability:

- Schedule backups during periods of low activity.

- Regularly test restoration processes in a non-production environment to ensure everything works as expected.

Routine testing is key to maintaining confidence in your disaster recovery plans.

Disaster Recovery Drills and Rollbacks

Simulating failure scenarios is a proactive way to prepare for real-world issues. Conduct regular disaster recovery drills to evaluate your team’s readiness and the effectiveness of your recovery strategies. Incorporate Helm's rollback feature into your CI/CD workflows to quickly revert to a stable deployment in case of failure.

When designing drills, consider scenarios like:

- Node failures

- Zone outages

- Data corruption events

Document recovery times, challenges faced, and improvements identified during these exercises. Post-drill reviews are invaluable for refining your disaster recovery approach.

For businesses in the UK, partnering with experts like Hokstad Consulting can simplify the process. Hokstad Consulting specialises in creating resilient Kubernetes architectures tailored to UK regulations. Their expertise ensures automated failover processes are both efficient and compliant, offering a practical path to high availability without overspending.

Investing in automated disaster recovery solutions can dramatically reduce downtime. In fact, companies that embrace comprehensive DevOps practices, including disaster recovery automation, often see up to a 95% reduction in infrastructure-related outages [1]. By removing manual bottlenecks and limiting human error, these systems ensure smoother recovery when it matters most.

Need help optimizing your cloud costs?

Get expert advice on how to reduce your cloud expenses without sacrificing performance.

Step 4: Monitor and Observe Your CI/CD Pipelines

After setting up replication and failover strategies, the next step is keeping a close eye on your CI/CD pipelines. Monitoring isn’t just about reacting to problems - it’s about spotting potential issues early and preventing them from becoming major headaches.

Monitoring with Prometheus and Grafana

Prometheus plays a key role in gathering metrics from Kubernetes clusters and CI/CD tools. This open-source monitoring system collects data from sources like kubelet, API servers, and platforms such as Jenkins or ArgoCD.

To get started, deploy Prometheus and configure ServiceMonitors to track critical endpoints. Focus on gathering meaningful metrics, such as pod health, deployment success rates, build times, and resource usage trends. For UK-based operations, tagging metrics with GMT timestamps and relevant business context ensures the insights align with local working hours [2].

Grafana complements Prometheus by transforming raw metrics into user-friendly dashboards. These dashboards provide a quick overview of cluster health, highlighting key indicators like node status, pod availability, and CI/CD job success rates. You can also customise dashboards to reflect business-specific needs, such as deployment patterns during UK business hours.

Together, Prometheus and Grafana help teams identify patterns like build failures, resource constraints, or latency spikes - allowing you to address issues before they escalate.

AI-Driven Anomaly Detection

While traditional monitoring works well, it can sometimes miss subtle warning signs. This is where AI-driven anomaly detection steps in, offering a smarter way to spot issues. By analysing historical data, machine learning models can detect unusual patterns that might otherwise go unnoticed.

For example, AI tools can monitor deployment times, resource usage, and failure rates in Kubernetes. If they detect trends like a gradual increase in build times or unexpected resource spikes, they can trigger automated alerts or responses before users feel the impact.

To implement this, you can use AI plugins for Prometheus or dedicated AIOps platforms that integrate with Kubernetes. These solutions are particularly effective at identifying anomalies like irregular build durations or resource consumption, helping predict and prevent deployment failures [2].

The real benefit? A faster mean time to resolution (MTTR). Instead of waiting for thresholds to be breached, teams can act on early alerts, resolving issues before they snowball.

Best Practices for Alerts and UK-Specific Metrics

Too many alerts can overwhelm teams, so it’s essential to configure them thoughtfully. For non-critical issues, schedule alerts to trigger during UK business hours (09:00–17:30 GMT/BST) and use local date/time formats to streamline responses. At the same time, ensure critical alerts are active 24/7 to safeguard system reliability. Prioritise alerts based on their business impact, not just their technical severity.

For UK-specific operations, monitoring certain metrics is especially important. Keep an eye on transaction volumes in £, measure latency and uptime during peak trading hours, and maintain audit logs that comply with local data protection laws and industry standards [2].

Additionally, consider unique workload patterns. For instance, e-commerce platforms might see spikes in traffic during UK bank holidays, which differ from typical European schedules. These insights play a key role in ensuring your CI/CD pipelines remain robust and responsive.

For organisations that want to simplify monitoring without building in-house expertise, Hokstad Consulting offers tailored solutions. They specialise in integrating Prometheus, Grafana, and AI-driven anomaly detection, helping businesses cut cloud costs, boost deployment cycles, and meet UK-specific operational needs.

By adopting these monitoring strategies, you strengthen the reliability of your Kubernetes CI/CD pipeline. Automation can take this a step further by linking monitoring systems to incident response tools like PagerDuty or Opsgenie. When critical alerts are triggered, automated processes can kick off failover actions, rollbacks, or resource scaling - ensuring that monitoring leads directly to swift, protective measures.

This monitoring framework lays the groundwork for the load balancing and multi-region strategies covered in Step 5.

Step 5: Configure Load Balancing and Multi-Region Deployments

Step 5 focuses on automating traffic distribution and ensuring your CI/CD pipelines remain operational, even during regional outages.

Global and Local Load Balancers

In Kubernetes environments, load balancing operates on two levels. Global load balancers handle traffic distribution across regions, directing it to the nearest or healthiest endpoint. They also automatically reroute traffic during regional outages. Local load balancers, on the other hand, manage traffic within individual clusters.

For UK and European operations, global load balancers like Cloud Load Balancing (GCP), AWS Global Accelerator, or Azure Front Door are excellent options. These services rely on health checks, proximity-based routing, and failover mechanisms to efficiently manage traffic. Within Kubernetes, you can use an Ingress controller (such as NGINX or Traefik) to expose services and integrate them with DNS-based or IP-based global load balancers.

For intra-cluster load balancing, use Kubernetes Services of type LoadBalancer for external access and deploy Ingress controllers to handle HTTP/HTTPS routing. This layered approach ensures a robust traffic management system.

Multi-Region Deployment Strategies

Building on effective load balancing, multi-region deployments add another layer of resilience by running Kubernetes clusters in multiple regions. These clusters are kept in sync through GitOps workflows or CI/CD pipelines. Users are routed to the nearest healthy region using DNS-based failover mechanisms. To enhance cross-region communication, service mesh solutions like Istio or Linkerd can be used. These tools enable secure communication, traffic splitting, and failover policies.

For instance, deploying clusters in London and Frankfurt with DNS failover ensures service continuity during regional outages. However, multi-region setups come with challenges like data consistency, configuration drift, and increased complexity. These can be addressed by:

- Using declarative infrastructure tools like Terraform.

- Adopting centralised GitOps workflows to synchronise deployments.

- Employing replicated databases with strong consistency models to minimise data conflicts.

Single-Region vs. Multi-Region Setup Comparison

When deciding between single-region and multi-region deployments, it’s essential to weigh the costs, fault tolerance, and performance implications. Here’s a quick comparison:

| Deployment Type | Cost (GBP, monthly) | Fault Tolerance | Performance for UK Users |

|---|---|---|---|

| Single-Region | £500–£1,000 | Low (regional outage can cause downtime) | Low latency if services are hosted within the UK |

| Multi-Region (2 EU) | £1,200–£2,500 | High (automatic failover to another region) | Slightly higher latency, better global reach |

| Multi-Region (Global) | £2,500+ | Very high (multiple failover options available) | Ideal for global users, but UK latency may increase |

Multi-region setups can significantly reduce downtime - by as much as 80% compared to single-region deployments. Additionally, global load balancers improve latency for international users by 30–50% [2].

For UK businesses, compliance with GDPR is critical. This means sensitive data must remain within the UK or EU. Watch out for cross-region data transfer fees, as these can add up. For example, AWS charges roughly £0.01–£0.02 per GB for inter-region transfers, and global load balancers typically cost between £15 and £30 per month for each managed instance.

If you're transitioning from a single-region to a multi-region setup, start by auditing your current infrastructure to identify critical workloads. Next, deploy a second Kubernetes cluster in a geographically distinct region, such as London and Dublin. Set up global DNS routing and a service mesh for cross-region communication. Update your CI/CD pipelines to support multi-cluster deployments, establish centralised monitoring tools, and regularly test failover processes to ensure disaster recovery readiness.

For organisations in the UK, Hokstad Consulting offers expert services to simplify multi-region deployments. Their tailored solutions help reduce operational costs while improving deployment efficiency and resilience.

Conclusion

Creating a resilient CI/CD pipeline is achievable with the right strategies in place. For UK enterprises, ensuring high availability in Kubernetes CI/CD pipelines is not just a technical goal - it’s a necessity in today’s competitive market. By following a clear plan, you can build a system that keeps your applications running smoothly, no matter the circumstances.

Key Takeaways

Achieving high availability requires a combination of key practices: replication, control plane resilience, automated failover, proactive monitoring, and load balancing.

- Replication and redundancy: Running multiple instances across availability zones eliminates single points of failure, forming the backbone of a resilient system.

- Control plane and node resilience: Strengthen your cluster’s core infrastructure with distributed resources and multi-master setups, ensuring stability.

- Automated failover and disaster recovery: Regular backups using tools like Velero and Kubernetes Volume Snapshots ensure rapid recovery when incidents occur.

- Monitoring and observation: AI-driven anomaly detection can identify and address issues before they impact users.

- Load balancing and multi-region deployments: Distributing workloads across regions improves fault tolerance and performance, making your system more robust globally.

For UK businesses, adopting multi-region deployments not only reduces downtime but also ensures faster recovery during disruptions. These measures directly translate into increased customer trust, minimised revenue loss, and a stronger reputation for reliability.

Next Steps for UK Enterprises

Start by evaluating your current infrastructure. Are you running multiple replicas of critical services? If not, this is a simple yet effective starting point to achieve redundancy. Audit your cluster components to ensure they are up to date - this is essential for maintaining security, stability, and performance.

Next, review your disaster recovery plan. Confirm that regular backups are in place and schedule routine recovery drills to test your readiness. Examine your monitoring tools to ensure they provide sufficient observability, ideally with AI-driven anomaly detection to pre-empt issues. Finally, assess whether your deployment strategy includes multi-zone or multi-region distribution to eliminate single points of failure.

Many UK businesses focus heavily on application redundancy but may overlook the importance of control plane resilience and proper resource distribution. If in-house expertise is lacking, consider working with specialists to speed up the assessment and implementation process.

For example, Hokstad Consulting has helped organisations significantly reduce downtime and achieve cost savings [1].

Investing in high availability isn’t just about avoiding outages - it’s about ensuring faster deployments, improving customer satisfaction, and staying ahead in a competitive market. Don’t wait for vulnerabilities to surface. Take proactive steps now to build a resilient infrastructure that your business can rely on. These efforts align seamlessly with the high availability framework outlined in this guide.

FAQs

What is the difference between ReplicaSets and StatefulSets in ensuring high availability for Kubernetes CI/CD pipelines?

ReplicaSets and StatefulSets are two Kubernetes resources designed to manage pods, but they cater to different needs, especially when it comes to ensuring high availability.

ReplicaSets are perfect for managing stateless pods. Their primary role is to maintain a specified number of identical pods, ensuring that if one fails, it gets replaced without disrupting the system. This makes them a great choice for workloads like web servers or other stateless applications, where each pod operates independently.

StatefulSets, however, are tailored for stateful applications that require unique identities and stable storage. They manage pods in a specific order - whether that's during creation, deletion, or replacement. This makes them ideal for use cases like databases or applications that need persistent storage and consistent network identities.

When integrating these into a Kubernetes CI/CD pipeline, the choice between ReplicaSets and StatefulSets comes down to the workload. Stateless tasks, such as build runners, are well-suited for ReplicaSets. Meanwhile, stateful components, like databases or caching systems, will benefit from the structured and stable approach provided by StatefulSets, ensuring both high availability and data consistency.

What should I consider when implementing a multi-region deployment strategy to improve fault tolerance in Kubernetes CI/CD?

To build fault tolerance into a Kubernetes CI/CD pipeline using a multi-region deployment approach, focus on three key areas: redundancy, failover mechanisms, and data consistency. Start by replicating critical components across multiple regions to eliminate single points of failure. This ensures that if one region experiences issues, your system remains operational.

Automated failover processes are equally crucial. These processes should seamlessly redirect traffic to other regions during outages, minimising disruptions.

Another important consideration is managing latency and data synchronisation between regions. Tools like Kubernetes Federation or other multi-cluster management platforms can help maintain data consistency and ensure workloads are evenly distributed. Finally, make it a priority to regularly test your failover and recovery systems. Simulating real-world scenarios will help confirm their reliability and effectiveness in maintaining smooth operations.

How can AI-driven anomaly detection enhance monitoring in Kubernetes CI/CD pipelines?

AI-powered anomaly detection can transform the way Kubernetes CI/CD pipelines are monitored by spotting unusual patterns or behaviours as they happen. This real-time capability allows teams to address potential failures or inefficiencies before they disrupt the pipeline's performance.

By examining metrics like resource usage, deployment durations, and error rates, AI delivers early alerts and practical insights. This means teams can react swiftly, minimising downtime and ensuring deployments run more smoothly and reliably.