API gateways and service meshes are tools for managing traffic in modern cloud systems, but they serve different purposes:

- API Gateways handle external (north-south) traffic, like requests from users or external apps to backend services. They focus on tasks like authentication, rate limiting, and routing.

- Service Meshes manage internal (east-west) traffic between microservices. They ensure secure and reliable communication, often using sidecar proxies deployed alongside each service.

::: @figure  {API Gateway vs Service Mesh Cost Comparison: Infrastructure, Scaling, and Management}

:::

{API Gateway vs Service Mesh Cost Comparison: Infrastructure, Scaling, and Management}

:::

API Gateways vs Service Mesh: Which One Do You Need?

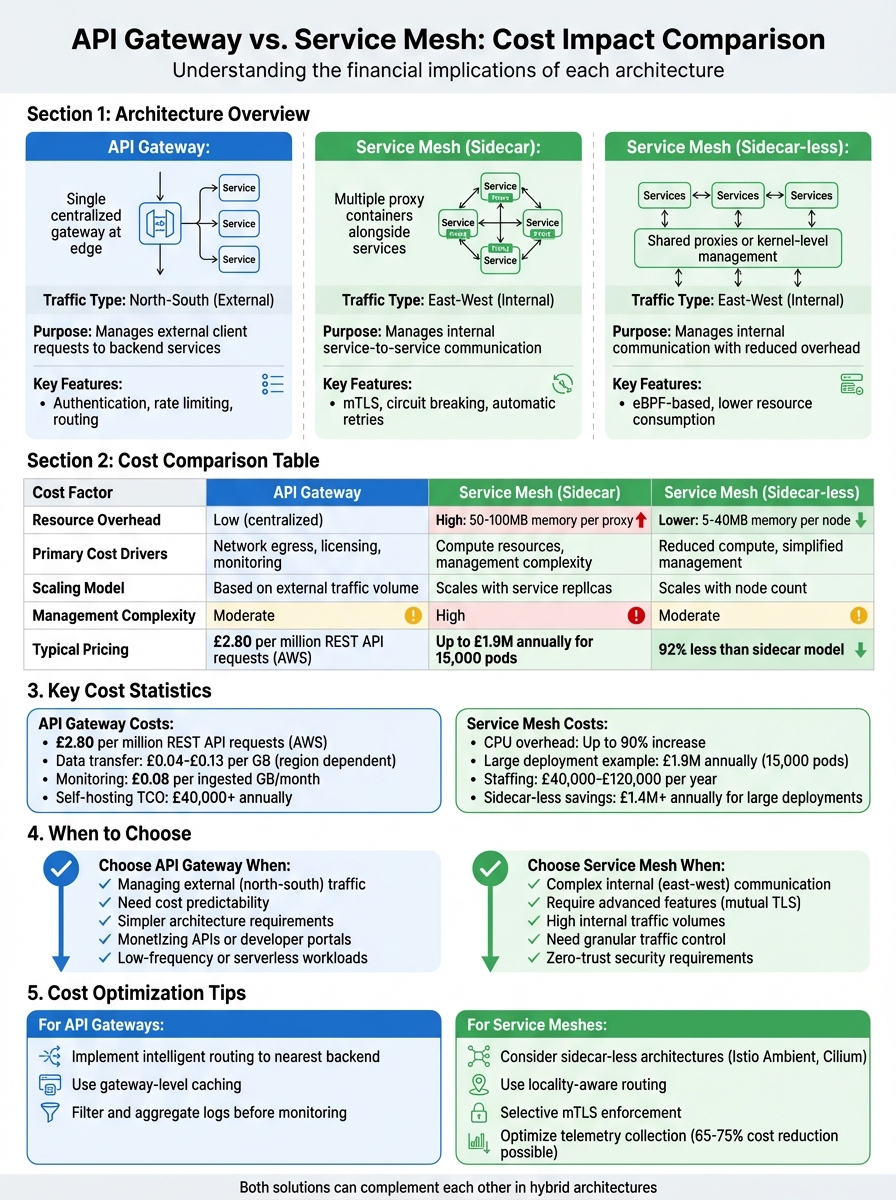

Key Cost Factors

Both technologies come with unique cost considerations:

- API Gateways: Costs are driven by network egress fees, licensing, and monitoring. For instance, AWS API Gateway charges around £2.80 per million REST API requests, and data transfer fees vary by region (e.g., £0.04–£0.13 per GB).

- Service Meshes: Resource overhead is significant due to sidecar proxies, potentially increasing CPU usage by up to 90%. Large deployments (e.g., 15,000 pods) can cost £1.9 million annually in compute resources.

Quick Comparison

| Feature | API Gateway | Service Mesh (Sidecar) | Service Mesh (Sidecar-less) |

|---|---|---|---|

| Primary Use | External (north-south) traffic | Internal (east-west) traffic | Internal (east-west) traffic |

| Resource Overhead | Low (centralised) | High (50–100MB memory per proxy) | Lower (5–40MB memory per node) |

| Cost Drivers | Network egress, licensing, monitoring | Compute resources, management complexity | Reduced compute, simplified management |

| Scaling Costs | Based on external traffic | Scales with service replicas | Scales with node count |

| Management Complexity | Moderate | High | Moderate |

Takeaway

- Choose API gateways for managing external traffic, especially in simpler setups or when cost predictability is important.

- Opt for service meshes when internal communication between microservices is complex or requires advanced features like mutual TLS.

- Consider sidecar-less service mesh options to reduce resource usage and costs in large deployments.

Both solutions can complement each other, with API gateways handling external traffic and service meshes focusing on internal communication.

How Service Mesh and API Gateway Differ

What is a Service Mesh?

A service mesh is designed to manage communication between microservices within your system. It uses a decentralised sidecar

pattern, where a lightweight proxy runs alongside each service instance. This proxy helps coordinate communication at both Layer 4 (TCP) and Layer 7 (HTTP/gRPC), addressing network-level tasks like mutual TLS encryption, automatic retries, and circuit breaking. However, implementing a service mesh can be more involved compared to an API gateway. It requires deploying a data plane proxy next to each service instance and updating CI/CD pipelines to inject these sidecars.

What is an API Gateway?

An API gateway acts as the front door to your system, managing traffic from external clients to your internal services. It follows a centralised architecture, typically operating as a single entry point. The gateway handles tasks like authentication, rate limiting, protocol translation, and routing. Operating at Layer 7, it also manages policies such as monetisation, developer portals, and API documentation. Since the gateway interacts with external clients - where you have no control over their configurations - it must support various formats, CORS, and redirects.

Main Differences in How They Work

The key difference between the two lies in how they handle traffic. API gateways focus on North-South

traffic, which refers to communication between external clients and internal services. On the other hand, service meshes are designed for East-West

traffic, managing communication between internal services. However, Marco Palladino, CTO and Co-Founder of Kong, offers a more nuanced view:

API gateways are for north-south traffic and service meshes are for east-west traffic. This is not accurate, and if anything, it underlines a fundamental misunderstanding of both patterns.[3]

Deployment models also set them apart. API gateways operate in a separate architectural layer, making them non-invasive, while service meshes rely on sidecar proxies injected into every service instance. In multi-cloud environments, the distinction becomes even more apparent. For instance, if two clouds cannot share a Certificate Authority, they cannot form a unified service mesh. In such cases, an API gateway is needed to bridge these isolated environments.

Interestingly, both technologies often share the same foundational technology, such as the Envoy Proxy. However, their control planes differ, tailored to their specific traffic management roles. As the Ambassador Team explains:

The edge use case is sufficiently different that API Gateways and service meshes will both be needed.[2]

API Gateway Cost Breakdown

Infrastructure Costs

When it comes to API gateways, the bulk of expenses typically stems from network egress fees, rather than computing power. Cloud providers charge for data leaving their systems, and these fees can vary significantly depending on the region. For example, transferring data in South America costs around £0.13 per GB, whereas in North America, it’s just £0.04 per GB [4].

Things get even more complicated in multi-cloud environments. If your gateway routes traffic across multiple cloud platforms, you’ll face multiple charges at each stage: Transit Gateway processing fees (around £0.016 per GB), inter-region transfer fees, and finally, the egress fee to the destination cloud [4]. This means a single API request could trigger three or four separate charges before it reaches its endpoint.

AWS adds another layer of cost with its bi-directional billing for cross-AZ transfers. As Kaiwalya Koparkar, Platform Advocate at Gravitee, explains:

A single gigabyte (GB) transferred between two instances in different AZ will be accounted as 2 GB on the bill. This doubles the cost and increases the inflation factor by 100%[4].

Monitoring and observability can also rack up costs. Keeping tabs on distributed API traffic generates large volumes of logs, and platforms like Datadog charge roughly £0.08 per ingested GB per month [4]. For high-traffic deployments, these monitoring fees can grow to rival the cost of the API gateway itself.

Licensing Fees and Commercial Models

Network charges are just one piece of the puzzle. Licensing models and operational costs also play a big role in API gateway expenses. Providers typically use one of two pricing models: pay-as-you-go or tiered subscriptions.

Pay-as-you-go models, like those offered by AWS and Google Cloud, charge based on usage. AWS, for instance, bills £2.80 per million REST API requests, dropping to £1.21 per million at higher volumes. Google Cloud provides the first 2 million calls for free and then charges £2.40 per million thereafter [8][9][10]. While these models are affordable for smaller projects, they can become costly as usage scales unless carefully managed.

On the other hand, subscription models, like those from Azure and Apigee, involve higher fixed monthly fees. Azure’s Premium v2 tier, which is necessary for features like self-hosted gateways in hybrid or multi-cloud setups, starts at £2,240 per month, with an additional £200 for each extra self-hosted gateway unit [4]. Apigee’s hybrid environments can cost up to £2,745 per month, plus hourly proxy charges [4].

Enterprise tiers often bundle critical features - like private network integrations or self-hosted gateways - into their most expensive packages. This means you may need to pay for a premium subscription, even if your traffic volume doesn’t justify it [4].

If you’re considering self-hosting an open-source gateway, it might appear economical at first glance. However, the total cost of ownership often tells a different story. Between infrastructure, DevOps labour for patching and upgrades, and ongoing maintenance, costs can exceed £40,000 annually [7]. By contrast, managed SaaS solutions like Gravitee start at around £2,000 per month (£24,000 annually) and include maintenance and automatic scaling [7].

Scaling and Operations Costs

Once you’ve accounted for infrastructure and licensing, it’s time to factor in the dynamic costs of scaling and running your gateway. High-traffic environments introduce additional challenges, especially in multi-cloud setups where requests cross regional boundaries. This can significantly increase data transfer fees [4].

Staffing also contributes to operational costs, with salaries for relevant roles ranging from £40,000 to £120,000 per year [4].

To manage these costs, intelligent routing can help. By directing traffic to the nearest backend service instance, gateways can reduce inter-region and cross-continental data transfer fees [4]. Advanced caching at the gateway level also cuts down on redundant backend calls, lowering data egress expenses [4]. Additionally, filtering and aggregating logs before sending them to monitoring platforms can reduce the volume of billed data ingestion [4].

In October 2025, INVERS, a German shared mobility company, restructured its API platform by adopting Gravitee’s event-native API gateway. By eliminating the need for heavy infrastructure management and manual scaling, they reduced their overall costs while gaining better control and visibility over their API operations [7].

Service Mesh Cost Breakdown

Compute and Resource Overhead

Service meshes typically rely on a sidecar architecture, where a proxy container (often Envoy) runs alongside every service instance in your cluster. While this design enhances functionality, it effectively doubles the number of containers, driving up cloud costs. In fact, service meshes can demand up to 90% more CPU cores due to the combined load of sidecar proxies and the control plane [5].

For large-scale deployments, this overhead can lead to multi-million-pound compute expenses [4]. Each sidecar proxy consumes between 50–100MB of memory and increases CPU usage depending on traffic patterns [5].

To mitigate these costs, some organisations are exploring sidecar-less architectures. For example, Istio's Ambient Mesh

model uses shared proxies, while Cilium leverages eBPF technology at the kernel level. These approaches can reduce resource consumption to as little as 5–15MB per node [5]. For large deployments, adopting such architectures could save over £1.4 million annually [4]. However, these savings must also be weighed against the operational complexities discussed below.

Management Complexity Costs

The operational side of managing a service mesh introduces its own set of expenses. Service meshes increase management complexity, which often translates to higher staffing costs. Organisations typically require dedicated platform engineers to oversee control planes, handle mutual TLS (mTLS) certificate rotations, coordinate version upgrades for sidecars, and troubleshoot intricate YAML configurations [5][6].

These operational demands can cost teams between £40,000 and £120,000 annually [4]. And that figure doesn’t account for the hidden costs of downtime or misconfigurations, which can be particularly difficult to diagnose due to the layered nature of proxy infrastructure.

Observability is another area where costs can escalate. Each sidecar proxy generates significant telemetry and log data, which must be stored and analysed. Managed observability tools like Datadog charge around £0.08 per ingested GB per month [4]. For organisations monitoring thousands of proxies, these fees can add up quickly, further straining budgets.

Open Source vs. Paid Solutions

Given the resource and management challenges, deciding between open-source and managed service mesh solutions becomes a key financial consideration. Open-source options like Istio and Linkerd may appeal with their £0 licence fees, but this initial saving often hides substantial long-term costs. Self-hosting a service mesh can exceed £40,000 per year once infrastructure, DevOps labour, and ongoing maintenance are factored in [7].

As one analysis highlights:

Over three years, Istio's and Envoy's £0 licences transform into six-figure operational costs.- Zuplo [6]

Managed solutions, on the other hand, generally start at around £2,000 per month (roughly £24,000 annually). These services include automatic scaling, security updates, and expert support [7]. While the upfront cost is higher, the total cost of ownership can be lower, as managed platforms often eliminate the need for dedicated engineering resources. They also claim to cut observability and governance expenses by 65–75% through optimised telemetry collection [5].

Ultimately, the choice between open-source and managed solutions depends on your organisation’s size and expertise. Smaller teams or those without dedicated platform engineers may find managed solutions more cost-effective. Larger enterprises with well-established DevOps capabilities might lean towards self-hosted options, provided they’ve accounted for the operational overhead in their budgets.

Cost Comparison Table

The table below summarises the main cost factors for API gateways and service meshes, highlighting their distinct roles in handling traffic. While API gateways focus on managing north–south traffic (external client requests), service meshes are designed for east–west traffic (internal service-to-service communication). These differences are reflected in their cost structures.

| Cost Component | API Gateway | Service Mesh (Sidecar) | Service Mesh (Ambient/eBPF) |

|---|---|---|---|

| Infrastructure Model | Centralised or edge deployment; scales with external traffic | A proxy runs alongside each pod; scales linearly with the number of microservices | Shared proxies or kernel-level; scales with node count |

| Resource Overhead | Low (concentrated at entry points) | High (50–100MB memory per proxy) | Low to medium (approximately 5–40MB per node) |

| Licensing Costs | Usage-based (e.g. around £2.60 per million calls) or tiered subscriptions (£2,000–£2,600 per month) | Free in open-source form but may lead to high operational costs | Similar to the sidecar model; managed options start from around £2,000 per month |

| Scaling Expenses | Increases with request volume and gateway instances | Costs rise with each additional service replica; large deployments can reach nearly £1.8 million annually | Much lower scaling costs - up to 92% less than sidecar deployments |

| Management Complexity | Moderate; centralised policies and edge security simplify management | High; requires significant platform engineering effort | Moderate; benefits from simplified lifecycle management |

| Multi-Cloud Premium | Premium tiers required - for example, Azure API Management Premium v2 costs around £2,600 per month plus per-unit charges | High compute overhead across clusters increases costs | Lower resource footprint overall, though cross-cloud expertise remains critical |

| Observability Costs | Moderate; edge logging reduces data volumes ingested | High; telemetry from many proxies raises monitoring expenses | Lower, due to consolidated telemetry management |

The differences in cost become more pronounced at scale. Gravitee provides a striking example:

A large-scale deployment of 15,000 pods, for instance, could incur nearly £2.4 million per year in cloud compute resources... simply for running the proxy sidecars[4].

API gateways are more predictable in terms of costs since they concentrate resources at fewer points, making them ideal for managing external-facing traffic. On the other hand, service meshes, particularly those using sidecar proxies, can see costs skyrocket due to their per-pod resource requirements. These factors play a crucial role in shaping multi-cloud strategies, as explored in the next section.

Performance and Latency Cost Effects

Latency differences largely stem from the way these systems are architected. An API gateway typically introduces a single centralised network hop at the edge, while a service mesh using the sidecar pattern adds two extra hops for every internal service call - one through the source sidecar and another through the destination sidecar[3].

Enforcing mutual TLS (mTLS) for zero-trust security also brings cryptographic overhead, increasing CPU usage due to constant encryption and decryption. For instance, in high-throughput scenarios, a sidecar proxy in Istio 1.22 can consume about 0.5 vCPU for every 1,000 requests per second[12]. When scaled across thousands of pods, these resource demands can become a significant cost factor. If proxies are under-provisioned, they may create bottlenecks during peak traffic periods. This architectural reality is an important consideration, as highlighted by industry professionals.

Marco Palladino, CTO and Co-Founder at Kong, underscores the trade-offs involved:

The API gateway pattern describes an additional hop in the network that every request will have to go through... In a service mesh pattern, we must deploy a proxy data plane alongside every replica of every service[3].

In distributed systems, latency builds up with each internal hop, especially when traffic crosses availability zones. This is further compounded by bi-directional billing, which effectively doubles transfer costs.

To mitigate these latency and cost issues, several strategies can be employed. Locality-aware routing is one approach, ensuring traffic stays within the same availability zone to avoid cross-zone fees, which can double network costs on platforms like AWS[4]. Another tactic is to selectively enforce mTLS only for sensitive services, reducing the overall CPU load. For large-scale deployments, moving to sidecar-less architectures like Istio Ambient or leveraging eBPF-based solutions such as Cilium can significantly reduce overhead. For example, Istio's ztunnel proxies add just 0.17ms to P90 latency and use 20–40MB of memory per node, compared to the 50–100MB required by sidecar proxies[12].

API gateway caching is another effective method. By caching frequently requested responses at the edge, backend calls can be minimised, reducing both latency and data transfer costs. For instance, AWS API Gateway offers caching options starting at approximately £0.03 per hour for a 1.6GB cache[8]. This modest expense can help avoid repeated high-cost service calls and lower overall monthly cloud bills.

Management and Operational Costs

When it comes to managing resources and ensuring smooth operations, the costs go far beyond the basics of compute power and network latency. In fact, the day-to-day operational expenses often dwarf the raw costs of running infrastructure. One key difference lies in the complexity of service meshes compared to API gateways. Service meshes demand significantly more effort to deploy and maintain. As Marco Palladino, CTO and Co-Founder of Kong, puts it:

The service mesh pattern... is more invasive than the API gateway pattern because it requires us to deploy a data plane proxy next to each instance of every service, requiring us to update our CI/CD jobs in a substantial way[3].

This added complexity translates into higher staffing costs, which can range from £40,000 to £120,000 per team. It also requires constant attention to tasks like control-plane upgrades, managing the lifecycle of sidecars, rotating certificates, and troubleshooting configurations [4][6]. On the other hand, managed API gateways, particularly SaaS-based ones, often provide a zero-ops

experience. This means the provider takes care of scaling, patching, and certificate rotation, reducing the operational burden [6].

Another consideration is the sheer volume of telemetry data generated by service meshes. Every sidecar in a service mesh produces logs and metrics, which can drive up storage and monitoring costs. For instance, platforms like Datadog charge around £0.08 per ingested GB per month [4], making high-volume service mesh logs a costly affair. In fact, observability and telemetry costs for service meshes can be 65% to 75% higher than those for API gateway-based setups [5]. API gateways help control this by filtering and aggregating logs before they are sent to expensive monitoring tools [4].

Yet, addressing these operational challenges can lead to measurable business benefits. A great example comes from Sky Italia, which in March 2025 adopted Kong's API management platform with an APIOps

approach, blending DevOps and GitOps workflows. Under the leadership of Claudio Spadaccini, Head of Integration, they achieved impressive results: an 80% reduction in deployment time, a 30% cut in infrastructure costs, and a 20% saving in development costs - all while maintaining 99.99% availability [14]. Spadaccini highlighted:

Through this new process, we have been able to reduce development time to enable fast usage and rapid testing as soon as the interface agreement is confirmed. This lets the team work much faster and more efficiently[14].

In multi-cloud environments, operational expertise becomes even more critical. Automation and expert guidance can significantly reduce overheads. Hokstad Consulting, for example, specialises in DevOps transformation and cloud cost optimisation. They help businesses automate CI/CD pipelines, fine-tune infrastructure, and cut cloud expenses by 30–50%. Their services include strategic cloud migration, tailored development and automation, and ongoing performance improvements. These efforts can prevent open-source management costs from ballooning into six figures over a three-year period [6].

Multi-Cloud Deployment Cost Strategies

Avoiding Vendor Lock-In

Planning your architecture carefully from the start can save you from pricey licensing tiers later. Many traditional vendors limit essential multi-cloud features to their higher subscription levels. For instance, Azure API Management's Premium v2 tier - necessary for self-hosted gateways - starts at around £2,350 per month[4]. Similarly, Apigee's hybrid deployment environments can cost approximately £2,880 monthly per environment, with additional hourly proxy deployment charges[4].

Opting for open-source standards can sidestep these limitations. Tools like Istio, Linkerd, or Kong provide flexibility across cloud providers, unlike proprietary, cloud-native solutions[11][3]. Marco Palladino, CTO and Co-Founder of Kong, highlights the value of such tools:

The API gateway pattern describes an additional hop in the network that every request will have to go through... it is an abstraction layer that allows us to change the underlying APIs over time without having to necessarily update the clients[3].

This abstraction layer becomes particularly useful when different teams rely on varying service meshes or Certificate Authorities. Using an intermediate API gateway ensures secure interoperability without needing to overhaul the entire architecture[3].

While avoiding vendor lock-in is essential, keeping resource waste in check is equally important for managing multi-cloud costs.

Reducing Resource Waste

Hidden network charges can quickly inflate multi-cloud budgets. For example, data egress fees can be unpredictable. In Europe, Azure charges around £0.073 per GB for internet egress, with inter-region transfers costing about £0.017 per GB[4]. AWS adds another layer of complexity with bi-directional pricing for cross-Availability Zone transfers - effectively charging for 2 GB when only 1 GB is transferred[4].

To mitigate these costs, intelligent routing and caching can make a big difference. Locality-aware routing ensures API calls are directed to the closest backend, reducing expensive cross-region transfers. Gateway caching, on the other hand, lowers execution fees and redundant egress charges[4].

Observability costs can also spiral out of control. For example, managed platforms like Datadog charge about £0.084 per ingested GB of logs per month. By filtering and aggregating gateway logs, organisations can cut ingestion volumes by 65–75%, significantly reducing these expenses[5].

For companies struggling with complex billing across multiple vendors, expert help can be transformative. Hokstad Consulting, for instance, specialises in cloud cost engineering and DevOps transformation. Their strategies - ranging from automation to intelligent routing - have helped businesses cut cloud expenses by 30–50%. By avoiding inefficiencies, they can prevent a £100,000 infrastructure budget from ballooning into a £240,000 annual cost.

When to Choose Each Solution

Opt for an API gateway when your primary focus is managing incoming (north-south) traffic. A service mesh, on the other hand, becomes relevant as your microservices architecture grows more intricate. Deciding between the two is crucial for managing multi-cloud deployment costs effectively. In the UK, most organisations typically start with an API gateway, as every application needs an ingress solution. A service mesh only becomes essential when the complexity of your microservices architecture demands it[2].

Most organizations will start with an API Gateway over a service mesh, because everyone needs an ingress solution, while not everyone needs a service mesh[2]

When API Gateways Cost Less

Building on earlier cost discussions, API gateways are often the more economical choice when dealing with north-south traffic - requests originating from external clients into your infrastructure. If you're running a SaaS platform, exposing public APIs, or monetising APIs through developer portals and billing, an API gateway is the logical solution[3].

The centralised nature of API gateways avoids the additional CPU overhead that service meshes introduce via per-service proxies[5]. By leveraging shared infrastructure at the edge, API gateways eliminate this overhead entirely.

For workloads with intermittent traffic, API gateways with serverless integrations (like AWS API Gateway paired with Lambda) are especially cost-efficient. You pay only for the actual requests, avoiding the expense of maintaining always-on proxy infrastructure. Additionally, intelligent caching at the gateway level can significantly cut backend calls and data egress fees. This is particularly valuable given that Azure charges approximately £0.068 per GB for internet egress in Europe[4].

When Service Meshes Cost Less

For environments with intricate internal communication needs, service meshes can offer clear cost advantages. Service meshes excel in handling east-west traffic - service-to-service communication within your cluster. By enabling direct point-to-point connectivity, they reduce latency and unnecessary data transfers[13].

Service meshes also enhance developer productivity by automating network tasks like mutual TLS (mTLS) and circuit breaking, saving both time and operational costs[1][3]. For UK financial services firms that require strict zero-trust security, automating mTLS across all services through a service mesh is far more efficient than implementing it individually within each application[3].

Another cost-saving opportunity comes from moving away from traditional sidecar models to sidecar-less approaches. This transition can result in annual savings exceeding £1.4 million in large-scale deployments[4]. Optimised Agent Mesh

configurations have demonstrated reductions in observability and governance costs by 65–75% compared to standard implementations[5]. If compute overhead is a concern, Hokstad Consulting offers expertise in cloud cost engineering, potentially cutting your infrastructure expenses by up to 50%.

| Choose API Gateway When | Choose Service Mesh When |

|---|---|

| Managing external (north-south) traffic | Handling complex internal (east-west) communication |

| Monetising APIs or providing developer portals | Automating zero-trust security across microservices |

| Running low-frequency or serverless workloads | Operating at scale with high internal traffic volumes |

| Reducing operational complexity | Enabling granular traffic control (e.g., canary or A/B testing) |

Conclusion

When deciding between a service mesh and an API gateway, it’s all about understanding what each brings to your architecture. API gateways are ideal for managing external traffic with predictable costs, while service meshes handle the complexities of internal communication - though they come with added infrastructure demands. Interestingly, these aren’t either-or choices. Many organisations in the UK combine both: API gateways for north-south traffic and service meshes for east-west communication. These decisions play a crucial role in shaping operational expenses and resource allocation.

It’s worth noting that service meshes can demand up to 90% more CPU cores due to sidecar proxies [5]. On the other hand, API gateway costs can rise unexpectedly because of hidden data egress charges or premium tier requirements [4]. However, newer sidecar-less architectures are significantly reducing the resource strain, making it essential to align your architecture with clear financial goals.

Budgeting for a hybrid API ecosystem is no longer about accepting vendor costs. It is more about driving architectural decisions by financial data.

– The Gravitee Team [5]

For UK businesses operating in multi-cloud environments, challenges such as network volatility and observability overhead can inflate costs. For example, cross-region data transfers can cost up to three times more than local transfers [4]. Similarly, telemetry from every service mesh sidecar can lead to unplanned monitoring expenses [4]. Solutions like intelligent routing, caching at the gateway level, and filtering observability data can help control these costs.

Keeping these expenses in check is essential for maintaining a successful multi-cloud strategy. If you're struggling with high cloud costs, Hokstad Consulting offers expertise in cloud cost engineering. They can help identify areas for optimisation, potentially cutting your spending by 30–50% through targeted architectural improvements and smarter resource allocation.

FAQs

What are the key cost differences between a service mesh and an API gateway?

The key cost distinction between API gateways and service meshes lies in where the expenses stack up. For API gateways, costs are typically tied to licensing, usage fees, and data transfer. For instance, you might be charged per request, per gigabyte of data leaving the system, or for premium add-ons like advanced routing or caching features. In multi-cloud or hybrid setups, these fees can surge dramatically due to cross-provider traffic and hidden egress charges.

In contrast, service meshes drive costs primarily through compute demands and operational overhead. Every microservice requires a sidecar proxy, effectively doubling CPU and memory usage. Additionally, the control plane introduces infrastructure costs for managing policies and telemetry. For larger organisations, this can lead to cloud bills that surpass £2.4 million annually. Beyond infrastructure, the complexity of service meshes means more engineering time is needed for tasks like deployment, monitoring, and ongoing maintenance, which further inflates expenses.

To put it simply, API gateways tend to cost more due to licensing and usage-based fees, while service meshes ramp up expenses through compute requirements and operational intricacies. Deciding between the two depends on whether your budget is more impacted by usage charges or infrastructure and labour costs.

How do sidecar-less service meshes reduce costs?

Sidecar-less, or ambient, service meshes offer a practical way to cut costs by eliminating the need for a dedicated proxy for every pod. This approach reduces the demand for CPU, memory, and storage, which means you can use smaller virtual machines or nodes and save on cloud compute expenses. Plus, with fewer network hops, there's a drop in latency-related egress charges, which can be especially beneficial in multi-cloud setups.

This architecture also simplifies operations by relying on a per-node Layer 4 waypoint instead of managing countless sidecars. That means no more deploying, updating, or monitoring thousands of sidecars - saving valuable engineering time and reducing operational complexity. On top of that, Layer 7 features can be activated selectively, so you're not paying the price of full Layer 7 processing for all traffic.

When is it more cost-effective to use an API gateway instead of a service mesh?

An API gateway is often a more budget-friendly option when your main concern is managing edge traffic (north-south), and you don’t need the advanced service-to-service (east-west) communication features that a service mesh provides. A managed API gateway can efficiently handle tasks such as authentication, rate-limiting, caching, and routing at the network edge. Plus, it does so without the added compute and memory demands of sidecar proxies, which can help cut down on infrastructure costs - especially in multi-cloud environments where resource usage directly influences expenses.

If your application involves limited internal service communication, has modest scaling requirements, or can depend on a SaaS-based API gateway, skipping the complexity and overhead of a service mesh makes practical and financial sense. Service meshes come with ongoing CPU and memory costs for each pod and add layers of management complexity. This can outweigh their advantages when your needs are focused solely on edge-level functionality.

For applications with edge-focused needs, managed gateway solutions, and a priority on cutting both infrastructure and operational costs, an API gateway stands out as the more economical choice.