In multi-tenant CI/CD environments, where resources like servers and storage are shared across teams or projects, allocating costs accurately is a major challenge. Without proper tracking, expenses can spiral, leading to inefficiencies and disputes over resource usage. Here's what you need to know:

-

Key Challenges:

- Tracking ephemeral workloads (e.g., short-lived containers) accurately.

- Splitting shared infrastructure costs (e.g., NAT gateways, control planes).

- Enforcing consistent tagging of resources for cost attribution.

-

Solutions:

- Use Kubernetes-native tools like

KubecostorOpenCostfor tracking usage by namespace or labels. - Enforce strict tagging policies using tools like Open Policy Agent (OPA) or AWS Service Control Policies.

- Implement chargeback or showback dashboards to link costs to specific teams or projects.

- Use Kubernetes-native tools like

-

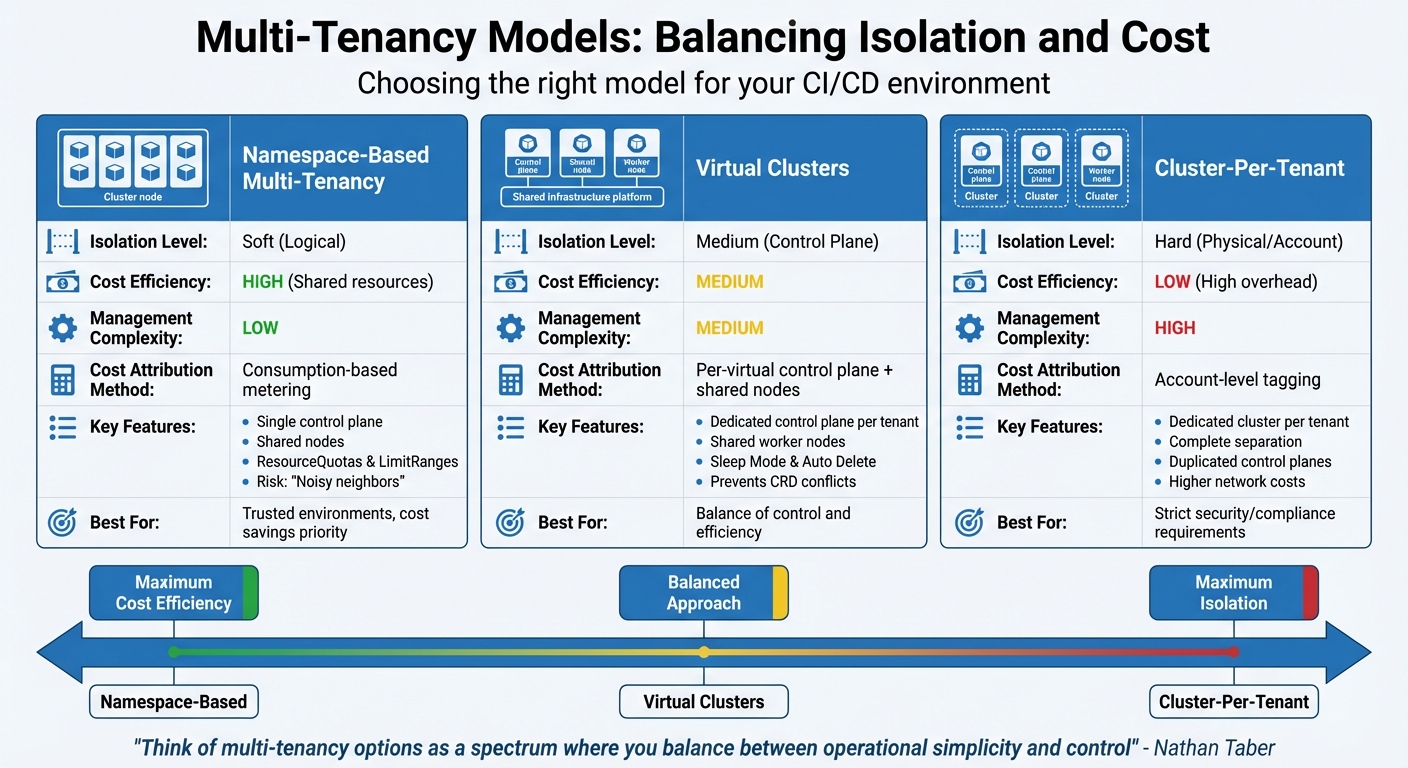

Multi-Tenancy Models:

- Namespace-Based: Cost-efficient but requires careful monitoring to avoid resource conflicts.

- Virtual Clusters: Balances isolation and cost by sharing nodes but separating control planes.

- Cluster-Per-Tenant: Offers full isolation but at a higher expense.

Accurate cost allocation ensures financial accountability and helps organisations manage shared infrastructure effectively. Whether you choose namespace-based setups or dedicated clusters, the right tools and practices can simplify this complex process.

Using Virtual Clusters for Development and CI/CD Workflows

Main Challenges in Multi-Tenant CI/CD Cost Allocation

Allocating costs effectively in multi-tenant CI/CD systems is no small feat. While these systems offer scalability and efficiency, accurately attributing expenses to individual tenants comes with its own set of hurdles.

Difficulty Tracking Resource Usage at Tenant Level

One of the biggest challenges is tracking resource usage at the tenant level. CI/CD workloads are often ephemeral - runners and containers spin up and disappear within minutes - making it tough to aggregate CPU, memory, and disk usage accurately [1]. Add to this the dynamic nature of pipelines that scale automatically based on demand, and pinpointing which tenant used specific resources becomes even trickier.

To make matters worse, standard monitoring tools rely on data sampling, which often misses the fine details needed for precise billing [4]. Organisations face a tough choice: opt for coarse-grained methods, such as estimating usage based on API calls or active users, which are easier to implement but less accurate, or go for fine-grained tracking with detailed metering instrumentation. While the latter provides better accuracy, it demands significant engineering effort. For instance, an optimised Prometheus server tailored for cost tracking can reduce stored metrics by 70–90% while still maintaining visibility [1].

This challenge of tracking resource usage directly impacts the ability to fairly assign costs for shared infrastructure, which we’ll explore next.

Attributing Costs for Shared Infrastructure

Shared infrastructure adds another layer of complexity. Resources like NAT gateways, load balancers, and Kubernetes control planes are often used by multiple tenants simultaneously, making it difficult to break down costs by individual usage [7]. Compounding the issue, cloud providers typically limit the number of tags you can apply to a resource, making it impractical to tag every tenant for shared components [4].

In Kubernetes environments, the situation becomes even more complex due to additional layers like pods and namespaces [7]. As Anthony McClure from AWS puts it:

The real challenge lies in the pooled model, where tenants share resources. To address this challenge... involves making your shared resources tenant-aware [8].

Certain costs, such as those tied to commitment-based discounts (e.g., Reserved Instances or Savings Plans) or shared storage IOPS, are almost impossible to assign to specific tenants at the time they are incurred [5][6]. Without tenant-aware telemetry built into microservices, organisations are left with aggregate spending data that lacks the granularity needed to calculate meaningful unit economics.

Poor Tagging and Metadata Enforcement

Tagging is another critical issue. Tags are not retroactive - they only apply to billing data from the moment they are activated [5][2]. For example, if a resource is tagged on the last day of the month, it will appear as untagged

for the previous 29 days, creating significant gaps in historical cost data.

Inconsistent tagging practices lead to incomplete datasets, which can erode trust between Finance, Engineering, and Executive teams [2]. The FinOps Foundation highlights this problem:

Having a tagging strategy that is only partially implemented or enforced will lead to incomplete and incorrect data which will lead to mistrust of the costs [2].

Organisations often measure their FinOps maturity in cost allocation by the percentage of taggable costs that are properly tagged. Those tagging less than 30% of their costs are considered at Level 0

maturity, while high-maturity organisations can achieve tagging accuracy exceeding 90% [2].

When metadata enforcement is weak, it becomes harder to identify noisy neighbour

tenants - those that consume a disproportionate share of resources. In some SaaS setups, the Basic

tier might unexpectedly drive the largest share of infrastructure costs if usage isn’t properly monitored and controlled [3]. Addressing these tagging gaps is a necessary first step to building accurate cost allocation dashboards.

Solutions for Accurate Cost Allocation

Tackling the complexities of cost allocation in multi-tenant CI/CD environments can feel overwhelming, but with the right tools and practices, it becomes manageable. Kubernetes-native tools, strict enforcement policies, and clear reporting can help you navigate these challenges effectively.

Leverage Kubernetes‑Native Usage Metrics

Kubernetes offers built-in tools to track resource usage at the tenant level. By assigning each tenant to its own namespace, you can monitor CPU, memory, and storage consumption more effectively. Tools like ResourceQuotas and LimitRanges help set capacity limits for tenants, establishing clear cost baselines.

As noted in the OpenCost specification:

Workload Costs should be understood as max(request, usage) when Assets have Resource Allocation Costs, e.g. CPU or GPU [11].

This means costs account for reserved resources - even if they’re not actively used - ensuring fair allocation. For stricter resource separation, you can dedicate node pools to specific tenants using taints and tolerations, enabling high-priority tenants to be billed directly.

Solutions like Kubecost and OpenCost integrate with Prometheus to provide detailed cost insights by namespace, deployment, or custom labels [1]. Kubecost optimises Prometheus usage by retaining only cost-relevant metrics, reducing data retention by 70–90% compared to standard setups [1]. Yet, the challenge remains: 40% of teams admit their Kubernetes cost monitoring relies on estimates, and 38% lack monitoring entirely [12].

Enforce Strict Tagging Policies

Cost allocation efforts often fail without proper tagging enforcement. The key is to block untagged resources from being deployed in the first place. Open Policy Agent (OPA) Gatekeeper can act as a Kubernetes admission controller, rejecting any resource - like namespaces, pods, or deployments - without the required tenant labels.

In AWS environments, Service Control Policies (SCPs) can enforce tagging rules, ensuring resources are tagged at creation. Be mindful of AWS tag limits - keys are capped at 128 characters, values at 256 characters, and only the first 50 Kubernetes labels per pod are imported into Amazon EKS cost allocation tags [10].

To standardise tagging, categorise labels into three groups:

- Business: Cost Centre, Owner, TenantID

- Technical: Environment, ApplicationID

- Automation: Schedule, OptOut

Keep in mind that new tags may take up to 24 hours to appear in billing consoles and another 24 hours to activate [10]. High-performing FinOps teams aim to tag and allocate over 90% of their costs [2]. A dashboard highlighting untagged

or unallocated

costs can encourage teams to address non-compliant resources.

Implement Chargeback with Per‑Tenant Dashboards

Chargeback systems directly deduct costs from specific teams' budgets, while showback tools raise awareness by presenting detailed expenditure reports [9]. Dashboards should allow users to explore costs by namespace, service, deployment, label, pod, container, team, or product [13].

For stakeholders without kubectl access, dashboards can be made accessible through an NGINX Ingress controller with basic authentication or SSO/SAML [1]. A comprehensive dashboard should also account for un-allocatable

costs - such as shared services or the Kubernetes control plane - by distributing them proportionally, uniformly, or using custom metrics [11]. Additionally, out-of-cluster costs (e.g., RDS instances, S3 buckets, or CloudFront distributions) can be attributed to tenants using external labels [22, 24].

Dashboards should also focus on unit economics, like Cost per Customer

or Cost per Feature

, to align engineering decisions with business goals [14]. For precise billing, consider building a custom pipeline using Azure Event Hubs or AWS Cost and Usage Reports (CUR) to process raw data, rather than relying on tools that sample data [25, 8]. Only 21% of teams have successfully implemented accurate chargeback or showback models for their clusters [12].

These strategies lay the groundwork for more advanced cost allocation practices, helping teams achieve better financial clarity and operational efficiency.

Need help optimizing your cloud costs?

Get expert advice on how to reduce your cloud expenses without sacrificing performance.

Comparing Multi-Tenancy Models for Cost Allocation

::: @figure  {Multi-Tenancy Models Comparison: Isolation vs Cost Efficiency}

:::

{Multi-Tenancy Models Comparison: Isolation vs Cost Efficiency}

:::

Selecting the right multi-tenancy model is more than just a technical decision - it directly influences your infrastructure expenses and how accurately you can track spending. Nathan Taber puts it succinctly:

Customers should think of multi-tenancy options as a spectrum where you balance between operational simplicity and control [1].

Here’s a closer look at three common models, each sitting at a different point on this spectrum, balancing isolation and cost efficiency.

Namespace-Based Multi-Tenancy

This model is all about maximising resource efficiency. By sharing nodes and running a single control plane, namespace-based isolation keeps costs low [1]. However, tracking expenses can get tricky. Containers often have short lifespans and fluctuating usage, making tools like Kubecost essential for aggregating CPU, memory, and storage consumption over time [1][17].

The downside? The risk of noisy neighbours.

Heavy workloads from one tenant can affect others, leading to hidden costs tied to troubleshooting and performance fixes [15][16]. To mitigate this, Kubernetes features like ResourceQuotas and LimitRanges can help ensure tenants don’t exceed their allocated resources [17]. While cost-effective, this model demands careful management to maintain performance and fairness.

Cluster-Per-Tenant Model

If isolation is your top priority, the cluster-per-tenant model is the go-to option. Each tenant gets their own cluster, simplifying cost tracking since expenses are naturally separated by AWS account or cluster [1]. But this comes at a price.

This approach significantly increases spending due to duplicated control planes and shared services like logging and monitoring in every cluster. Network costs also rise when services in separate accounts communicate across VPC boundaries. Additionally, unused capacity in dedicated node groups can hurt efficiency [1]. Despite the higher costs, this model is ideal for environments with strict security or regulatory requirements, where complete separation is non-negotiable.

Virtual Clusters

Virtual clusters strike a balance between the two extremes. Tenants get a dedicated control plane - covering components like the API server and etcd - while sharing the underlying host cluster’s worker nodes [18][19]. This setup solves issues like CRD conflicts

seen in namespace-based tenancy, allowing tenants to manage their own cluster-wide resources without impacting others [18][20].

Cost allocation is also more precise. By mapping virtual clusters to specific namespaces or labels, you can accurately measure compute, storage, and network usage for each tenant [18][12]. Features like Sleep Mode

and Auto Delete

further reduce costs by shutting down idle environments [18]. To prevent resource monopolisation, apply quotas at the namespace level within the host cluster [19][21]. This model offers a practical middle ground, balancing control with cost efficiency.

Below is a summary of the key attributes of each model:

| Model | Isolation Level | Cost Efficiency | Management Complexity | Cost Attribution Method |

|---|---|---|---|---|

| Namespace-Based | Soft (Logical) | High (Shared resources) | Low | Consumption-based metering [1] |

| Virtual Clusters | Medium (Control Plane) | Medium | Medium | Per-virtual control plane + shared nodes [19] |

| Cluster-Per-Tenant | Hard (Physical/Account) | Low (High overhead) | High | Account-level tagging [1] |

For teams operating in a trusted environment, namespace-based isolation offers excellent cost savings. If tenants need flexibility for different CRD versions or webhooks, virtual clusters provide the necessary isolation without the hefty price tag of dedicated clusters [19]. Meanwhile, the cluster-per-tenant model is best reserved for scenarios where strict compliance or security demands full separation.

Implementation Best Practices and Hokstad Consulting Expertise

Getting cost allocation right in multi-tenant CI/CD environments can feel like a daunting task. However, with the right strategies and expert guidance, what seems overwhelming can be transformed into a smooth, automated process. Below are some effective practices that ensure precision in cost allocation.

Infrastructure as Code for Cost Allocation

The backbone of accurate cost allocation lies in Infrastructure as Code (IaC). Tools like Terraform allow you to embed essential tagging structures - such as tenant_id and environment - directly into your infrastructure. Combined with Policy as Code tools like Open Policy Agent (OPA), you can automatically block non-compliant deployments, reducing human error and ensuring financial accountability [2][23]. This approach builds on tagging policies, ensuring consistency and accuracy from the start.

IaC doesn’t just stop at tagging. By integrating Policy as Code tools into your CI/CD pipeline, you can reject any resource that lacks the required cost-allocation metadata [2]. For example, tools like Open Policy Agent or Conftest can enforce compliance during development. Additionally, cost estimation tools like Infracost can be incorporated into pull requests, giving developers immediate visibility into the financial impact of their changes [23][14]. It’s worth noting that tags only take effect from the moment they are applied [2].

Automated Audits and Cost Reporting

Manual reviews simply can’t keep up with the speed of modern deployments. Automated audits step in to quickly flag untagged or non-compliant resources, while also calculating their financial impact [2]. High-performing organisations aim to reduce the time between incurring a cost and displaying it to the responsible team to under 24 hours. They often achieve over 90% of costs being allocated to specific owners, with more than 80% tag compliance [2].

These results are achieved through a combination of resource hierarchies, mandatory tagging policies enforced at the provisioning stage, and automated workflows that catch any manual changes made outside of IaC [2][23]. This ensures that cost accountability becomes an integral part of the process, not an afterthought.

Hokstad Consulting's No-Savings-No-Fee Model

Hokstad Consulting offers a wealth of expertise in DevOps transformation and cloud cost engineering, helping businesses optimise their multi-tenant CI/CD environments. Their approach focuses on achieving up to 50% cost savings by refining infrastructure and deployment practices [22]. What makes their service stand out is their No-Savings-No-Fee model: you only pay based on the savings they deliver, with fees capped as a percentage of the actual reductions.

Their services cover everything from implementing production-ready IaC with automated validation and policy enforcement to setting up detailed cost attribution for containerised workloads, such as ECS and EKS clusters. Whether you’re looking for a one-time cloud cost audit, ongoing infrastructure monitoring, or a comprehensive DevOps transformation, Hokstad Consulting tailors their solutions to fit your specific multi-tenancy setup and business needs.

With expertise across both public and private cloud environments, they tackle the unique challenges posed by multi-tenant architectures. Their strategies address everything from namespace-based isolation to cluster-per-tenant models, seamlessly integrating cost allocation practices into your system. By embedding these methods, Hokstad Consulting ensures that your multi-tenant CI/CD environment operates efficiently, with clear and precise cost tracking.

Conclusion

Getting multi-tenant CI/CD cost allocation right isn’t just a nice-to-have - it’s a must. By 2025, cloud cost allocation is expected to rank as the second highest priority for FinOps practitioners [24]. This means businesses can no longer rely on vague estimates or outdated methods.

Using Kubernetes-native metrics, strict tagging practices, and per-tenant dashboards can provide the accuracy needed for effective cost allocation. Whether you choose namespace-based multi-tenancy, cluster-per-tenant models, or virtual clusters, the trick is finding the right balance between isolation and efficiency.

These methods aren’t standalone - they fit into a broader strategy for managing and optimising costs. Starting with a showback model and evolving to chargeback ensures transparency and accountability. Pair this with tools like Infrastructure as Code, automated audits, and consumption-based tracking, and cost allocation shifts from being a frustrating chore to a strategic advantage.

Hokstad Consulting offers expert support to fast-track this transformation. With their No-Savings-No-Fee model, you only pay based on the actual savings they help you achieve, and fees are capped to match those savings. Their tailored solutions cover everything from implementing production-ready IaC with automated validation to setting up detailed cost attribution for containerised workloads. By focusing on the unique needs of multi-tenant architectures across public, private, and hybrid cloud setups, they help turn infrastructure spending into a true competitive edge.

FAQs

How do Kubernetes-native tools simplify cost allocation in multi-tenant environments?

Kubernetes-native tools make cost allocation simpler by leveraging metadata like labels or annotations (e.g., cost-centre=finance or tenant=acme-corp). These labels help track resource usage - such as CPU, memory, storage, and network - across shared clusters with precision. This ensures a clear and accurate distribution of costs. Tools like Kubecost and OpenCost turn this data into real-time dashboards, providing insights into daily spending in £, projected costs, and alerts for any excessive resource consumption.

These tools can also integrate directly into CI/CD pipelines, automatically tagging workloads with tenant-specific labels to maintain accurate cost tracking from the moment of deployment. Additionally, reports can be exported to analytics platforms, allowing finance teams to align costs with budgets on a dd/mm/yyyy basis. Hokstad Consulting supports businesses in adopting these solutions, helping to fine-tune Kubernetes environments for efficient and predictable cost management.

What are the key differences between namespace-based and cluster-per-tenant models in multi-tenant CI/CD environments?

In a namespace-based model, all tenants operate within a single Kubernetes cluster, with their workloads organised into separate namespaces. The cluster's resources, including the control plane (API server, scheduler, controller-manager) and worker nodes (CPU, memory, storage), are shared among all tenants. Isolation between tenants is achieved using Kubernetes tools like RBAC (Role-Based Access Control), network policies, and resource quotas. This setup is both cost-efficient and quick to deploy, but it can bring challenges like noisy neighbour

issues and weaker security boundaries.

The cluster-per-tenant model takes a different approach, assigning each tenant their own Kubernetes cluster. This ensures full isolation of the control plane, node pools, and networking stack, delivering stronger security and more consistent performance. However, it also increases costs and operational complexity, as each cluster must be individually provisioned, upgraded, and monitored.

The namespace model works well for smaller teams or workloads that are less critical. In contrast, the cluster-per-tenant approach is ideal for use cases where strict isolation, regulatory compliance, or reliable performance are essential - despite the extra expense and effort required to manage it.

Why is proper tagging crucial for managing costs in multi-tenant CI/CD environments?

Proper tagging plays a crucial role in associating CI/CD resources with the right projects, teams, or environments. This not only ensures accurate cost allocation but also promotes transparency and accountability across your organisation.

By adopting clear and consistent tagging practices, businesses can gain better insight into finances, minimise wasteful spending, and manage budgets more efficiently - particularly in complex multi-tenant systems. It also enables smarter decisions when it comes to optimising resource usage and keeping cloud costs under control.