Federated key management simplifies encryption key handling in multi-cluster Kubernetes setups by centralising policy control while ensuring local enforcement. This approach resolves challenges like key sprawl and inconsistent encryption policies, which increase security risks and complicate compliance with regulations such as UK GDPR.

Key highlights:

- Centralised Policies: Policies for encryption, key rotation, and access control are defined in a host cluster and applied across member clusters.

- Local Enforcement: Member clusters enforce these policies locally, reducing latency and avoiding single points of failure.

- Compliance and Security: Federated management ensures consistent encryption practices, meeting regulatory standards and minimising vulnerabilities.

Key components include Kubernetes encryption at rest, integration with external Key Management Services (KMS), workload identity federation to eliminate static credentials, and GitOps workflows for automated policy application.

This approach not only strengthens security but also reduces administrative overhead by automating tasks like key rotation and policy updates. Organisations can start with a small proof-of-concept and scale securely with expert guidance.

Kubernetes Cluster Federation: Unified Control Plane for Multi-Cluster Management

Designing a Federated Key Management Architecture

Creating a secure key management architecture involves balancing centralised policy management with decentralised operations. For example, a UK-based organisation might use a host cluster in a London region to define and manage key policies, while member clusters across the UK and EU enforce these policies locally through their own KMS providers or HSMs. This setup minimises latency and keeps cryptographic operations close to the data, reducing risks and avoiding single points of failure[2][6]. The next step is establishing trust boundaries to ensure smooth propagation of policies.

The architecture should focus on three main goals: minimising the impact of compromises, consistent enforcement of policies, and low-latency key operations. By separating encryption domains for each cluster or tenant, you limit the damage if a key is compromised. Central policy controllers and admission webhooks ensure all clusters comply with approved encryption configurations, while the actual encryption and decryption processes occur locally. This aligns with ICO guidance on data protection, allowing keys and operations to remain region-specific when necessary for UK data residency.

Setting Up Trust and Key Domains

Defining trust boundaries is essential. Start with an organisation-wide root key stored in an HSM or cloud KMS, and derive per-cluster and per-tenant keys from this root. This structure supports central auditing while limiting the impact of individual compromises[8]. For instance, a UK HSM-backed root can be used for production clusters, with separate roots for non-production environments.

Using distinct roots for production and non-production environments enhances security but adds complexity. Alternatively, shared roots with strongly separated intermediate keys simplify management but increase the risk if a root is compromised. For multi-tenant clusters, assign namespace or tenant-specific encryption keys, ensuring each tenant's data uses unique key material. Keep secret materials - such as Kubernetes etcd encryption keys, application envelope keys, and token-signing keys - in separate hierarchies to prevent cross-use and simplify revocation.

To establish trust between host and member clusters, implement a PKI hierarchy where a federation CA signs certificates for key-management components like CSI KMS providers, controllers, and webhooks. Use mutual TLS between clusters with these certificates[4][5]. Register member clusters with the host control plane by providing kubeconfigs referencing the appropriate CA and client credentials. Limit the host cluster’s permissions to only what’s necessary, such as managing specific CRDs and Secrets in dedicated namespaces, rather than granting cluster-admin rights[6][7]. Secure network paths with private connectivity (e.g., VPC peering, VPN), and restrict API endpoints for KMS and federation traffic by IP and layer-7 authentication.

Centralising Key Policies Across Clusters

Once trust domains are in place, centralise key policies to ensure consistent enforcement across clusters. Define a KeyPolicy CRD on the host cluster, specifying algorithms, key lengths, usages, and rotation schedules[6][7]. These CRDs are then synchronised to member clusters using Kubernetes federation or synchronisation controllers, which convert them into provider-specific KMS resources, such as AWS KMS keys or GCP Cloud KMS key rings. GitOps tools can manage these CRDs declaratively, enabling audited and consistent updates, such as tightening algorithms or shortening rotation schedules[6][7].

Policy controllers can block local key resources that don’t conform to the approved baseline. For instance, central configurations might require all etcd storage to use envelope encryption via a KMS plugin and prohibit storing unencrypted application Secrets in etcd. Instead, Secrets could be synced from an external vault using CRDs. Clusters in different regions or on different cloud providers can adapt this template to their local KMS services, while federation controllers ensure compliance with approved algorithms, rotation windows, and retention policies. Standard labels and annotations - like security.hokstad.co.uk/encryptionProfile: pci - help policy engines such as OPA or Gatekeeper enforce consistent encryption standards, even across clusters managed by different teams.

Identity and Access Control for Key Management

In federated setups, RBAC should clearly define roles and responsibilities. On the host cluster, create fine-grained roles (e.g., KeyPolicyAdmin, KeyOps, KeyAudit) within restricted namespaces to minimise privileges on sensitive resources[6][7]. On member clusters, separate application operators, who reference key IDs and configure encryption, from security or platform operators, who manage KMS plugins, EncryptionConfiguration, and ServiceAccount policies. Federation-specific RBAC should ensure only a select group can modify federated resources that affect multiple clusters.

Workload identity federation is key to securing short-lived credentials for KMS access, reducing reliance on static secrets[3]. This involves setting up an identity pool or provider in the cloud KMS that trusts OIDC tokens issued by Kubernetes clusters or a central identity issuer. Map Kubernetes ServiceAccount identities - based on namespace, name, and labels - to cloud IAM roles that grant specific KMS permissions, such as Encrypt, Decrypt, or GenerateDataKey, for designated keys[3]. Configure member clusters to issue projected ServiceAccount tokens with the appropriate audience and lifetime. Applications can then use these tokens and a small credential configuration to seamlessly access KMS services. Standardising ServiceAccount naming and labels across clusters simplifies role mappings and aligns with UK security principles, such as least privilege and avoiding long-lived secrets.

For organisations managing complex hybrid environments, expert guidance can simplify these designs. Hokstad Consulting specialises in multi-cluster DevOps, secure Kubernetes environments, and automation for key management and workload identity. For more information, visit Hokstad Consulting.

Implementing Encryption at Rest with Federated Key Control

::: @figure  {5-Step Implementation Guide for Federated Kubernetes Encryption at Rest}

:::

{5-Step Implementation Guide for Federated Kubernetes Encryption at Rest}

:::

To secure federated clusters, start by establishing trust domains and centralised policies. One essential step is enabling encryption at rest for etcd. This process involves configuring providers, deploying standardised templates, and verifying encryption.

Understanding Kubernetes Encryption at Rest

Kubernetes uses EncryptionConfiguration files to manage how data at rest is encrypted and decrypted. These files define a priority order for encryption providers. Common providers include:

- KMS: Integrates with external key management services like AWS KMS or Google Cloud KMS, using hardware-backed keys for added security.

- aescbc: Relies on software-based AES-CBC encryption with a static passphrase, often used for testing or isolated environments.

- secretbox: Utilises XSalsa20-Poly1305 for fast, authenticated encryption.

Here’s an example configuration:

apiVersion: apiserver.config.k8s.io/v1

kind: EncryptionConfiguration

resources:

- resources: [secrets]

providers:

- aescbc:

keys:

- name: key1

secret: cTEtMjUtYWVzLWNibC1rZXkxCg==

In federated setups, KMS is the preferred choice for production due to its hardware-backed key isolation. Meanwhile, aescbc can serve as a fallback. For industries with strict regulations, Hardware Security Modules (HSMs) provide tamper-resistant, FIPS-compliant storage.

To maintain consistency, distribute these configurations using a federated encryption template.

Federated Workflow for Encryption Templates

In a federated environment, encryption configurations are centrally managed through standardised templates. These templates enforce the key policies established earlier, ensuring consistent encryption practices across clusters. Tools like KubeFed or Cluster API can synchronise these templates.

While the configuration itself (e.g., providers and resource types like Secrets) is shared, sensitive details such as KMS URIs or passphrases are handled locally. For instance, a template might include a parameter like a cache prefix (e.g., mycluster-

) that is replaced with a cluster-specific KMS endpoint during deployment. The template is typically distributed via federated ConfigMaps or Deployments, allowing each cluster to reference its local key management service (e.g., kms: endpoint: "kms.us-east-1.amazonaws.com" for AWS or cloudkms.googleapis.com for GCP).

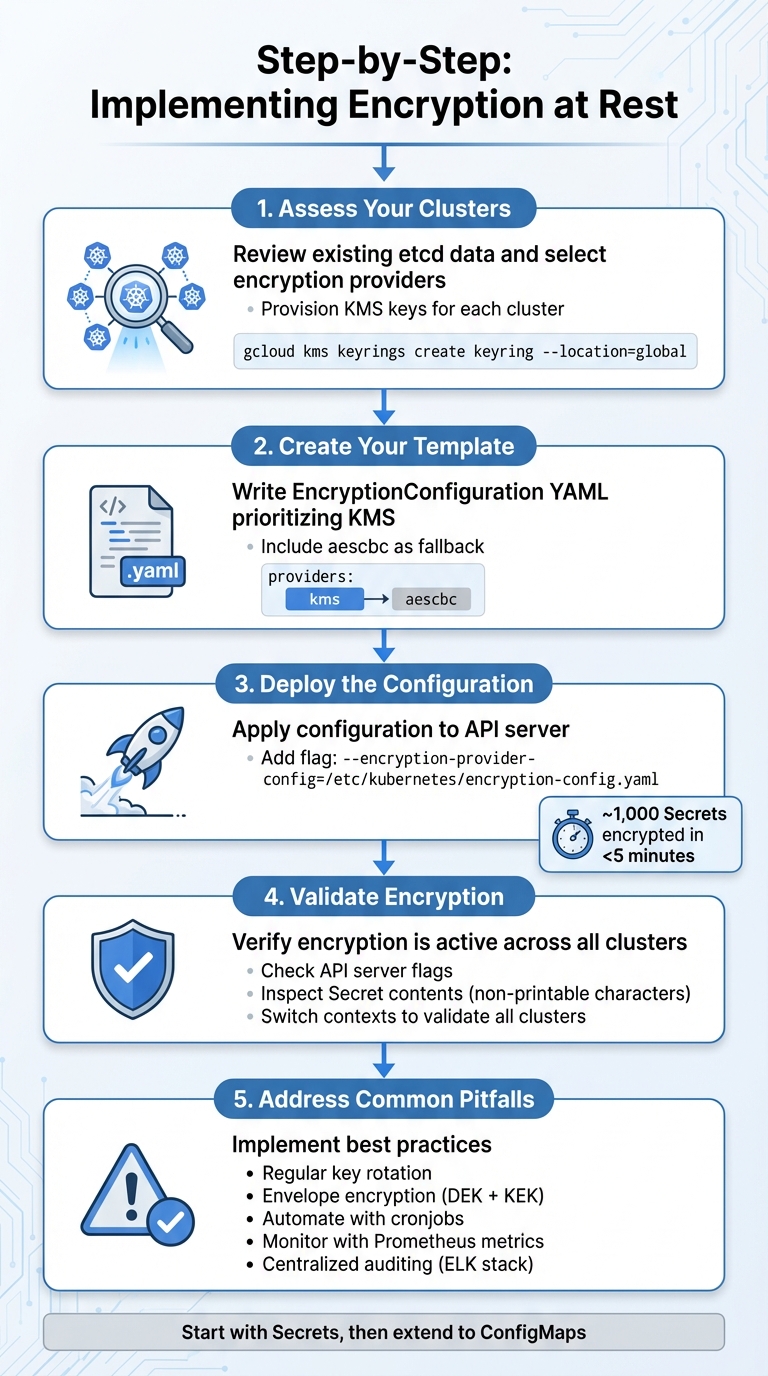

Step-by-Step Implementation Guide

Here’s how to implement encryption at rest across your clusters:

Assess Your Clusters

Start by reviewing existing etcd data and selecting encryption providers that align with your cloud environment and compliance needs. Provision the required KMS keys for each cluster. For example, in GCP, you can create a keyring using:

gcloud kms keyrings create keyring --location=global-

Create Your Template

Write an EncryptionConfiguration YAML file prioritising KMS, with aescbc as a fallback:apiVersion: apiserver.config.k8s.io/v1 kind: EncryptionConfiguration resources: - resources: - secrets providers: - kms: name: my-kms endpoint: cr-kv-engine.example.com:9400 cacheRadius: 300s cekIdentifier: "default" - aescbc: keys: - name: key1 secret: <base64-encoded-key>Distribute this configuration using a federated deployment targeting the kube-apiserver.

Deploy the Configuration

Apply the configuration to each cluster's API server by adding the flag:

--encryption-provider-config=/etc/kubernetes/encryption-config.yaml

Restart the kube-apiserver to initiate re-encryption of existing data. Encrypting around 1,000 Secrets typically takes under five minutes [1][2].-

Validate Encryption

Check that encryption is active by inspecting API server flags:

kubectl get cm kube-apiserver -n kube-system -o yaml | grep encryption

Verify that Secret contents are encrypted by ensuring they appear as non-printable characters:kubectl get secrets --go-template='{{range .items}}{{.metadata.name}} {{.data}}{{end}}'For federated clusters, switch contexts to validate encryption across all clusters (e.g.,

kubectl --context=cluster1 get secretsandkubectl --context=cluster2 get secrets). You can also test decryption by deploying a Pod that mounts a Secret and verifying the application can access it. -

Address Common Pitfalls and Best Practices

Be mindful of mismatched provider priorities, which can cause fallback to weaker encryption methods. Regular key rotation is crucial to avoid decryption issues. Implement envelope encryption, where each Secret is encrypted with a unique data encryption key (DEK), and the DEK is secured with a key encryption key (KEK) stored in KMS. Automate key rotation with cronjobs and monitor encryption activity using metrics likeetcd_encryption_enabledvia Prometheus. Centralised auditing tools, such as an ELK stack, can help track encryption events across clusters. Start by encrypting Secrets, and once the process is stable, extend encryption to ConfigMaps to further minimise risks [6].Need help optimizing your cloud costs?

Get expert advice on how to reduce your cloud expenses without sacrificing performance.

Federating Secrets and Application-Level Key Usage

Expanding on encryption at rest, managing distributed secrets and application keys helps maintain a secure environment across multiple clusters. The aim is to distribute secret definitions securely, without exposing sensitive data in plaintext or compromising security boundaries between clusters.

Synchronising Secrets in Federated Clusters

FederatedSecrets enable the synchronisation of secret templates across clusters while keeping the actual values encrypted and specific to each cluster. With KubeFed, you define a FederatedSecret that includes the secret's name, type, labels, and required keys - leaving out the actual secret values [6]. Each cluster then generates or retrieves its own secret values and encrypts them using its unique KMS key.

For example, a FederatedSecret template might require a secret (e.g., db-password) with keys like DB_USERNAME and DB_PASSWORD. Each cluster retrieves region-specific credentials from its designated secret backend. A per-cluster controller (such as the external-secrets operator) monitors the template, retrieves the necessary values, and populates the secret locally. This approach is especially useful in regulated environments in the UK - such as financial services or NHS deployments - where per-region or per-tenant key isolation is often mandatory to meet data residency and segregation standards.

To implement this, enable federation for Secrets by creating a FederatedTypeConfig for secrets.v1. Define a federated namespace (e.g., prod-shared) and apply the FederatedSecret YAML to the host cluster so KubeFed can distribute it [2][6]. Deploy controllers in each cluster to populate data from the appropriate secret backend. Test the setup by scaling clusters up or down to ensure new clusters receive the template but never plaintext values from other clusters. This synchronisation approach also supports envelope encryption, which is discussed in the next section.

Implementing Envelope Encryption for Applications

Envelope encryption provides an additional layer of security by using a two-tier key hierarchy. In this setup, a federated wrapping key (CMK) is logically shared across clusters, while local Data Encryption Keys (DEKs) are generated for individual workloads. Applications request a DEK from a local key service, use it to encrypt data, and store the wrapped DEK alongside the ciphertext.

This method limits the impact of a compromised KMS key to a single cluster and supports compliance with UK data residency and segregation requirements.

To ensure application portability across cloud providers, use a key management abstraction layer. This could be a custom gRPC key service or a library that integrates with AWS KMS, Azure Key Vault, and GCP KMS through a unified interface [3]. Use a standard envelope-encryption format - storing {ciphertext, wrapped_DEK, key_id, algorithm, version} - so encrypted data in one cluster can be decrypted in another, provided they share the same logical key policy but use different physical CMKs. For authentication, rely on service accounts and workload identity federation instead of embedding API keys [3][9]. Externalise crypto configurations - like key IDs, KMS endpoints, and rotation schedules - into ConfigMaps or Secrets that can be federated at the definition level. Once application keys are secured, the focus shifts to managing ServiceAccount and token-signing keys across clusters.

Managing Service Account and Token-Signing Keys

In a federated setup, service accounts require careful configuration to ensure secure and manageable key permissions. Assign unique ServiceAccounts for each application role and namespace, and avoid using the default account in production [9]. Align RBAC configurations across clusters by applying federated RBAC manifests (ClusterRoles and RoleBindings) using KubeFed or GitOps. This ensures that the same logical role has consistent permissions across all clusters [6][7].

For token-signing keys, use projected service account tokens that are automatically rotated, instead of long-lived tokens based on secrets [9]. Manage the service-account token signing keypair - used by the API server to sign tokens - through a KMS or HSM. Assign one keypair per cluster and avoid sharing private keys between clusters. Implement a 90-day rotation policy for token-signing keys, with dual validation during transitions. Store rotation policies and schedules centrally (e.g., in GitOps or a security runbook) to ensure consistency across clusters, while automation tools handle the actual rotation. For clusters using cloud IAM integrated with OIDC issuers and workload identity federation, ensure OIDC metadata and JWKS endpoints are updated seamlessly so external systems continue to trust the new keys [3][9]. Regular key rotation aligns with UK security standards and NCSC guidance, without creating dependencies between clusters.

Operating and Automating Federated Key Lifecycles

Building on the principles of federated key management, managing the lifecycle of keys effectively is crucial for maintaining security across multiple clusters. Once keys are distributed, the challenge lies in keeping them updated, secure, and compliant without causing system downtime. Many security breaches aren't due to a lack of encryption but rather poor key management practices - like failing to rotate keys, granting excessive access rights, or storing keys alongside encrypted data [8]. In a federated environment, relying on manual processes or ad hoc scripts for key rotation quickly becomes impractical and error-prone. This is where automation and centralised policies become indispensable.

Coordinating Key Rotation Across Clusters

Key rotation intervals should align with a centralised policy, considering the type of key and the organisation's regulatory or risk tolerance [8]. For instance, token-signing keys might need rotation every 90 days, while database envelope keys could follow a 180-day schedule. These intervals should be defined in a single source of truth, such as a Git repository, particularly in GitOps-driven environments. Updates to KMS key versions, Kubernetes encryption settings, and secret versions can then be propagated to all clusters via the federation control plane or automation pipelines [2][6].

A multi-phase rotation process is recommended. Start by introducing new keys in a dual-read/single-write mode, update workloads and API servers to work with both old and new keys, and only decommission the old keys after all clusters have successfully re-encrypted data and tokens using the new keys [8]. To ensure a smooth rotation, implement safeguards such as health checks, staged rollouts (e.g., using canary clusters or regions), and automated rollback mechanisms. When retiring old keys, mark them as retiring

in the central registry or KMS to prevent new data encryption. Automation should handle cluster-level validation, ensuring Kubernetes API servers have re-encrypted secrets at rest, synchronised federated secrets and ConfigMaps, and confirmed that external systems no longer use tokens tied to retired keys [6]. Once validation is complete, disable the old keys for decryption, archiving them for a defined retention period for audit or recovery purposes. These steps naturally integrate into workflows that automate configuration updates and key transitions.

Automating Key Management Processes

Key references and policies should be stored declaratively in Git, while the actual key material resides securely in a KMS or secret manager [6]. CI/CD pipelines can handle updates to these manifests when new key versions are created, triggering controlled rollouts scoped by cluster labels, regions, or environments (e.g., starting with non-production clusters). To minimise exposure, pipelines must avoid logging key values and should use sealed-secrets, CSI drivers, or workload identities to retrieve secrets at runtime within the cluster rather than during build [3][6].

Event-driven workflows are key to effective automation. For example, creating a new key version in the KMS can trigger a pipeline to update Kubernetes encryption configurations, SecretProviderClass resources, or federated secret definitions. Git commits then drive reconciliation across clusters via GitOps [2][6]. Scheduled workflows, such as cron-based controllers or CI jobs, can enforce periodic rotation and run validation checks to ensure encryption coverage, address expired keys, or fix misaligned configurations across clusters.

Auditing and Monitoring Federated Keys

Automation alone isn't enough; rigorous auditing and monitoring are critical for compliance and anomaly detection. Metrics such as key usage counts, decryption failures, authentication/authorisation failures, and token issuance/validation rates should be collected for each cluster and key ID [6]. Logs from the KMS, Kubernetes API servers, ingress controllers, and service meshes should be aggregated centrally to monitor which users or systems access which keys, from where, and at what times. This approach helps identify unusual patterns, such as spikes in access or cross-region usage, which could signal potential misuse. Alerts should flag issues like repeated decryption failures (indicating configuration drift or misuse of retired keys), unexpected access attempts, or incomplete rotation processes within defined SLAs.

In the UK, federated key management must comply with UK GDPR requirements for data protection by design and default. This includes robust encryption, strict access controls, and demonstrable lifecycle management of keys for personal data [8]. Regulated sectors, such as financial services, must also adhere to FCA and PRA guidelines on operational resilience, which stress strong identity management, data sovereignty, and evidence of control over third-party services like cloud KMS providers [8]. A UK-specific checklist should include:

- Documented data classification and mapping of data types across clusters.

- An inventory of all keys, their purposes, and associated clusters.

- Defined and implemented rotation policies, with evidence of past rotations.

- Confirmation that encryption-at-rest and encryption-in-transit are enabled and tested across all clusters.

- RBAC and least-privilege principles for key administration and usage.

- Centralised logging of all key access and lifecycle events, with retention aligned to legal and business needs.

- Regular access reviews for identities managing or using keys.

- Tested incident response plans for suspected key compromises.

- Evidence of third-party risk assessments for external KMS or cloud services [6][8].

This checklist should be part of internal audit cycles and, for regulated organisations, mapped to frameworks like UK GDPR or FCA requirements to streamline regulatory reporting.

For organisations seeking to balance compliance with efficiency, working with specialists can be beneficial. For instance, Hokstad Consulting offers expertise in DevOps transformation, cloud infrastructure, and custom automation, helping teams implement CI/CD pipelines, Infrastructure as Code, and monitoring solutions that reduce manual errors and bottlenecks.

Conclusion

Federated key management strengthens the security of multi-cluster Kubernetes environments by bringing encryption keys, secrets, and policies under a single, centralised system. This eliminates inconsistencies and potential vulnerabilities. For UK organisations subject to regulations like the UK GDPR and the Data Protection Act 2018, this approach provides the added benefit of unified auditing and access controls across distributed systems, ensuring compliance.

By establishing trust domains with tools like mutual TLS, envelope encryption, and strict role-based access control (RBAC), organisations can build a strong security framework. Selective federation further enhances security by limiting the propagation of critical resources - such as deployments and secrets - across clusters, effectively reducing the attack surface. In fact, businesses adopting federated setups have reported operational overhead reductions of 20–30% thanks to automation of management tasks [6].

The benefits go beyond cost savings. Automating processes like key rotation, secret synchronisation, and failover mechanisms not only streamlines operations but also minimises the risk of human error. Considering that downtime can cost as much as £8,600 per minute, federated policies that allow for quick workload distribution and recovery provide a competitive edge [6]. Tools like Prometheus and ELK stacks enhance these systems by offering real-time monitoring, anomaly detection, and audit trail maintenance - key for meeting regulatory requirements. Together, these tools and practices pave the way for practical and efficient deployments.

A good starting point for implementing federated key management is a proof-of-concept with two or three clusters. Using tools like KubeFed Helm charts, teams can safely test scenarios such as key rotation and failover. During these tests, it’s essential to prioritise RBAC configurations, verify TLS certificates, and ensure dashboards display metrics in localised formats like gigabytes and DD/MM/YYYY [6][4]. Once initial trials are successful, expert guidance can help scale deployments securely and efficiently.

Hokstad Consulting provides valuable expertise in DevOps transformation, cloud infrastructure optimisation, and custom automation. Their guidance can help organisations streamline secure multi-cluster deployments while keeping cloud costs under control.

FAQs

How does federated key management support UK GDPR compliance in multi-cluster Kubernetes setups?

Federated key management enables organisations to align with UK GDPR standards by managing encryption keys centrally across multiple Kubernetes clusters. This approach not only secures access but also streamlines the management of keys and enhances audit processes, helping to minimise the chances of data breaches.

By enforcing uniform security policies and offering comprehensive visibility into key usage, federated key management safeguards sensitive information while ensuring adherence to strict data protection laws.

Why is workload identity federation better than using static credentials in Kubernetes?

Workload identity federation strengthens security by eliminating the reliance on static credentials, which are often at risk of being exposed or misused. Instead, it enables dynamic and precise access control, ensuring permissions are granted only when necessary and strictly to the appropriate workloads.

This method not only streamlines the management of credentials but also cuts down on administrative tasks. It works smoothly with identity providers, making it an efficient solution. In Kubernetes environments, federation enhances security, supports scalability, and aligns with current security standards.

How can organisations maintain consistent encryption standards across multiple cloud providers using federated key management?

To ensure consistent encryption standards across various cloud providers, organisations can turn to federated key management. This method uses a centralised system to handle encryption keys, allowing uniform policies, protocols, and lifecycle management across different cloud environments.

By doing so, organisations can strengthen their security, meet regulatory requirements, and reduce the chances of misconfigurations or inconsistencies in multi-cloud setups. Federated key management streamlines encryption oversight, making it more straightforward to safeguard sensitive information across multiple platforms.