CI/CD pipelines are essential for modern software development, but they consume more energy than you might think. Frequent runs, inefficient configurations, and idle resources can waste energy and inflate costs. Here’s why this matters and what you can do:

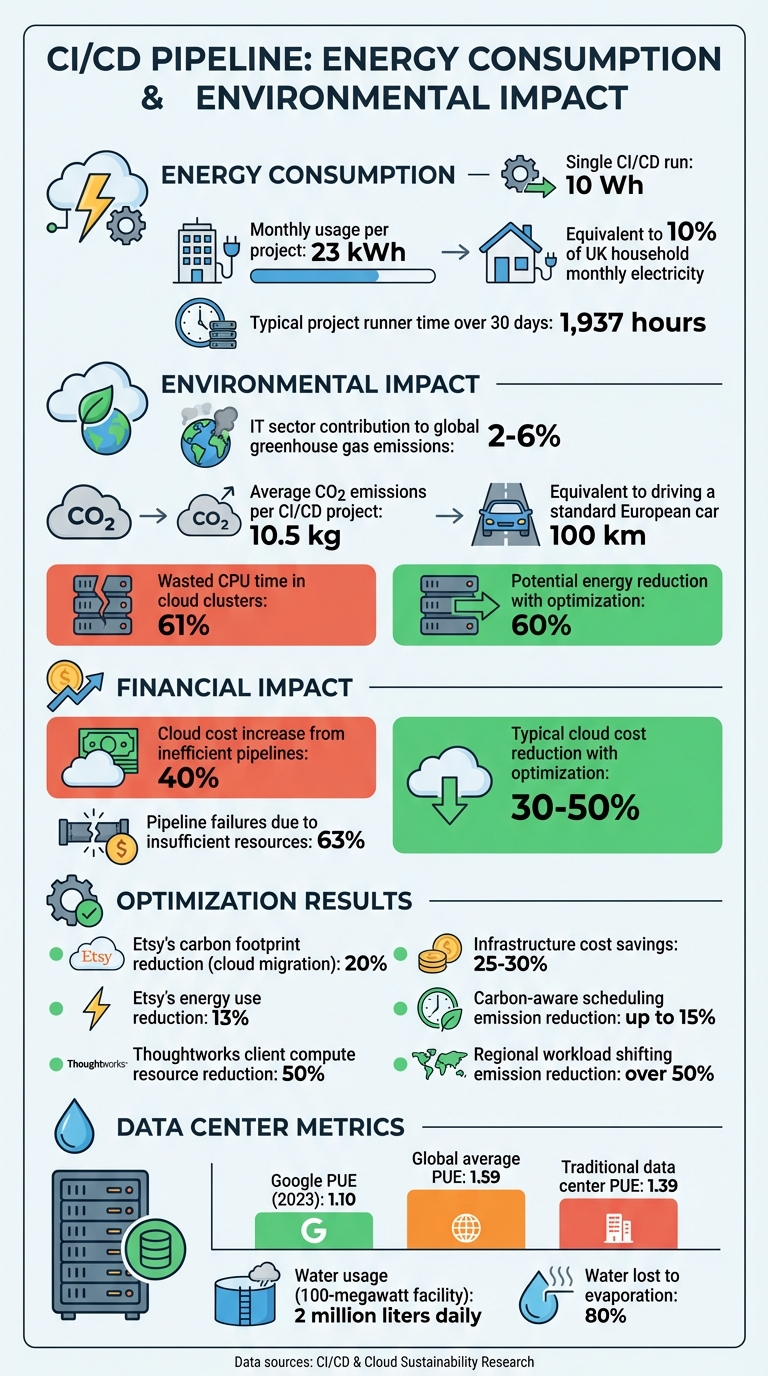

- Energy Use Adds Up: A single CI/CD run uses about 10 Wh, but frequent usage can total 23 kWh per month per project - 10% of a UK household's monthly electricity.

- Environmental Impact: The IT sector contributes 2–6% of global greenhouse gas emissions, comparable to aviation. Wasted CPU time in cloud clusters accounts for 61% of this.

- Financial Costs: Inefficient pipelines can increase cloud expenses by 40%, with oversized VMs, redundant builds, and failed runs being key culprits.

To reduce energy use, focus on optimising pipeline configurations, using lightweight container images, caching dependencies, and scheduling tasks during low-carbon energy periods. Tools like Carbon Aware SDK and Cloud Carbon Footprint can help track and minimise emissions.

Saving energy in CI/CD pipelines isn’t just about cutting costs - it’s a step towards reducing your carbon footprint and meeting upcoming UK carbon reporting requirements in April 2026.

::: @figure  {Energy Consumption and Environmental Impact of CI/CD Pipelines}

:::

{Energy Consumption and Environmental Impact of CI/CD Pipelines}

:::

How CI/CD Pipelines Consume Energy

What Drives Energy Usage in CI/CD

CI/CD pipelines draw energy from frequent triggers, such as running on every commit. Over time, this builds up quickly, with the total energy consumption reaching around 22 kWh per project [6].

One major culprit is inefficient container images. For instance, using a bulky Python image (352 MB) instead of a slim version (48 MB) increases energy use during transfers and decompression [8]. Similarly, redundant build steps, like rebuilding entire environments for minor changes, unnecessarily repeat energy-intensive tasks.

Another source of waste is idle resources. Build servers that run 24/7 but only handle tasks during business hours consume power without contributing any value. Poor resource allocation also plays a role - oversized virtual machines (VMs) used for simple tasks lead to underutilisation [3][4].

Testing phases are another energy drain. Lengthy integration tests often run for minor updates, like README changes, wasting significant compute power [1]. Running full test suites for trivial updates not only slows performance but also increases cloud costs.

How Resource Waste Increases Cloud Costs

The inefficiencies mentioned above don’t just waste energy - they also inflate cloud bills. Organisations that fail to optimise their cloud resources often overspend by as much as 40% [4]. This overspending is tied to oversized instances, redundant builds, and ineffective caching strategies.

Pipeline failures compound the issue. About 63% of pipeline failures occur due to insufficient resources [9], leading to expensive retries and manual fixes. Each failed run wastes the energy already spent and requires even more energy to rerun.

John Stocks, Head of Solution Engineering at Depot, highlights another hidden cost:

Lost productivity is a cost too... if a pipeline takes more than 5 or 6 minutes, it's not uncommon for an engineer to context switch a bunch and lose 20 minutes to socialising, snacking, walking the dog etc.[9]

Storage and artefact bloat also add to the problem. Without proper retention policies, organisations accumulate thousands of outdated container images in registries, which increases storage costs and the energy demands of data centres [8]. On top of that, moving large artefacts across regions without effective caching drives up network bandwidth expenses.

CI/CD Cost Optimization Explained | Cut Build Time & Cloud Spend

Environmental Impact of CI/CD Pipelines

The environmental consequences of CI/CD pipelines extend beyond financial costs, highlighting the importance of streamlining operations to reduce their impact.

Carbon Emissions from CI/CD Workflows

Every time a CI/CD pipeline runs, it contributes to CO2 emissions. The ICT sector is responsible for 2–6% of global greenhouse gas emissions [4][2], placing it on par with the aviation industry [4]. Automated workflows add to this total due to the electricity consumed by data centre servers during build, test, and deployment processes.

On average, a CI/CD project generates 10.5 kg of CO2 emissions, which is comparable to driving a standard European car for 100 kilometres [6]. While a single pipeline run might seem negligible, consuming roughly 10 Wh, frequent executions quickly compound the environmental toll.

What exacerbates this issue is the amount of wasted energy. Studies reveal that 61% of requested CPU time in cloud clusters goes unused [2], meaning a significant portion of emissions from CI/CD pipelines serves no productive purpose. Efficiency improvements, like running only affected tests, can slash CI energy usage by as much as 60% [2].

Moreover, the environmental cost of server manufacturing is often overlooked [2]. For example, training a single large-language model - a growing trend in AI-powered CI/CD workflows - can produce CO2 emissions equivalent to flying a passenger aircraft over one million kilometres [2].

Next, let’s examine how the geographical location of CI/CD pipelines influences their environmental footprint.

How Location Affects Environmental Impact

The location of your CI/CD pipelines plays a crucial role in determining their environmental impact. This is largely influenced by the carbon intensity of the local electricity grid, which measures the CO2 emissions per unit of electricity consumed. Identical energy usage in different regions can result in vastly different carbon outputs.

Regions that rely heavily on nuclear or hydropower, like France and Sweden, provide significantly cleaner energy compared to areas dependent on coal. For instance, Switzerland’s reliance on hydropower makes it far cleaner than coal-heavy regions. Between 2018 and 2019, Etsy cut its carbon footprint by 20% by moving from traditional data centres to the cloud, benefiting from improved Power Usage Effectiveness (PUE) ratios - 1.39 versus 1.10 [11].

Colder climates also offer an advantage by reducing the energy needed for cooling, which lowers overall emissions [5]. In 2023, Google reported a PUE of 1.10 for its data centres, far better than the global average of 1.59 [11][5]. Shifting compute-intensive CI/CD tasks to regions with cleaner grids can significantly reduce emissions, though this must be balanced against potential increases in latency [11].

Beyond carbon emissions, water usage is another critical factor. Data centres consume enormous volumes of water for cooling, with a typical 100-megawatt facility using around 2 million litres daily [12]. Of this, approximately 80% is lost to evaporation [13]. By optimising CI/CD pipelines to minimise compute time, organisations can reduce the heat generated by servers, thereby lowering the water required for cooling [1][13].

Need help optimizing your cloud costs?

Get expert advice on how to reduce your cloud expenses without sacrificing performance.

Methods for Reducing Energy Use in CI/CD Pipelines

Cutting down energy consumption in CI/CD pipelines involves making deliberate adjustments to configurations and scheduling. These changes can help reduce carbon emissions while also lowering cloud costs - a win-win for both the environment and your budget.

Improving Pipeline Configurations

One effective way to save energy is by preventing unnecessary pipeline runs. Tools like GitHub Actions allow you to apply path filters so that only specific file changes trigger builds. Additionally, features like automatic cancellation of outdated runs (e.g., GitHub’s in-progress run cancellations or GitLab’s interruptible jobs) ensure that resources aren’t wasted on pipelines that are no longer relevant.

Another key step is to reuse cached dependencies. This avoids the energy-heavy process of re-downloading or rebuilding components. When working with Docker, you can optimise images using slim base images (like debian-slim) and multi-stage builds to reduce the energy needed for downloads.

A fail-fast strategy can also make a big difference. Running quick checks such as linting early in the process helps identify issues before the pipeline progresses further, saving unnecessary energy use. For example, a study by Matt Graham at University College London in November 2023 revealed that GitHub Actions consumed 1,937 hours of runner time over 30 days, equating to 23 kWh. That’s approximately 10% of the monthly electricity usage of an average UK household [1]. Setting conservative timeouts (instead of default six-hour limits) can also stop misbehaving jobs from hogging resources.

Finally, consider scheduling tasks to align with times when greener energy is available.

Using Carbon-Aware Scheduling

Carbon-aware scheduling focuses on running energy-intensive tasks during periods when the grid relies on cleaner energy sources. The Green Software Foundation’s Carbon Aware SDK provides a standardised way to access carbon intensity data, helping automate these scheduling decisions [14].

Carbon aware software does more when it can leverage greener energy sources, and less when the energy CO2 emissions are higher.– Green Software Foundation [14]

For non-urgent tasks, shifting workloads to low-carbon periods can reduce emissions by up to 15% [14]. For instance, replacing daily builds with weekly ones or using tools like the Grid Intensity CLI to align tasks with greener energy periods can yield significant benefits [15]. Additionally, moving workloads to regions with cleaner energy can cut emissions by over 50% [14]. The Carbon Aware SDK simplifies this process by standardising data from providers like WattTime and ElectricityMaps into a single gCO2/kWh metric, making integration straightforward and flexible.

Once scheduling is optimised, it’s important to track and monitor pipeline performance to ensure these adjustments are effective.

Implementing Energy Monitoring Tools

Energy monitoring tools are invaluable for understanding energy consumption patterns and making data-driven improvements.

In March 2025, the BBC R&D team used LECCIE to analyse energy usage in AWS’s eu-west-2 region, enabling them to fine-tune their CI/CD processes [16]. For organisations operating across multiple cloud platforms, tools like Cloud Carbon Footprint provide a baseline for tracking emissions [10]. The Carbon Aware SDK further helps standardise emissions data across providers, while the Grid Intensity CLI identifies the best times to run energy-heavy tasks [14][15]. Additionally, tools like Scaphandre offer real-time monitoring at the server and application levels, which can be integrated with platforms such as Prometheus and Grafana for long-term analysis [7].

| Tool | Primary Function | Best Use Case |

|---|---|---|

| LECCIE (BBC) | Cloud energy benchmarking | UK-based AWS multi-account environments [16] |

| Carbon Aware SDK | Emission measurement & standardisation | Standardising data across multiple providers [14] |

| Grid Intensity CLI | Carbon-aware scheduling | Deciding when to run energy-intensive builds [15] |

| Cloud Carbon Footprint | Multi-cloud carbon/cost monitoring | Setting an emissions baseline for IT organisations [10] |

How Hokstad Consulting Improves Energy Efficiency

Hokstad Consulting takes energy-saving strategies to the next level by weaving them directly into CI/CD pipelines. Their unique blend of DevOps transformation and cloud cost engineering helps businesses build pipelines that consume less energy without sacrificing performance. The results? A typical reduction in cloud costs by 30–50% and a noticeable decrease in carbon emissions.

Custom Energy-Aware CI/CD Solutions

Hokstad Consulting designs pipeline configurations that cut down on wasted compute power. One standout feature they implement is the concurrency.cancel-in-progress property, which automatically stops older job runs when new commits are pushed. This avoids wasting energy on outdated builds.

They also fine-tune path-based filters, ensuring CI jobs only trigger when relevant source files are updated. For example, changes to documentation or README files won’t unnecessarily spin up testing environments. To further support energy efficiency, they optimise dependency caching by using tools like npm or Docker layer caching. This avoids the repetitive and energy-heavy process of re-downloading or rebuilding dependencies for every pipeline run, aligning with green DevOps practices.

Cutting Costs While Supporting Environmental Goals

Hokstad Consulting’s cloud cost engineering services tackle both financial and environmental challenges. By right-sizing infrastructure, they align CPU, memory, and storage with actual workload needs, eliminating over-provisioning. They also select high-efficiency hardware, such as AWS Graviton ARM processors, and use Spot Instances for non-critical, fault-tolerant tasks. Serverless solutions like AWS Fargate or Lambda are another key strategy, ensuring compute resources are only used when necessary, rather than running continuously.

Adapting to Public, Private, and Hybrid Clouds

No matter the setup - AWS, Azure, private data centres, or hybrid environments - Hokstad Consulting tailors their energy efficiency strategies to fit. They embed sustainability metrics directly into DevOps workflows using tools like Scaphandre or Carbon Tracker, delivering real-time insights into energy usage. Their expertise includes optimising pipelines with features like automatic redundant-job cancellation, file-change filters, and smart build scheduling. These tailored approaches ensure energy efficiency improvements are seamlessly integrated across all types of cloud environments.

Conclusion

Improving energy efficiency in CI/CD pipelines isn't just good for the planet - it directly reduces costs and emissions. Organisations that fail to optimise their public cloud usage often squander valuable resources, while cutting energy waste can shrink both cloud bills and carbon footprints. This dual benefit highlights the importance of adopting energy-efficient practices in CI/CD workflows.

It's not just about savings, though. Around 70% of employees prefer to work for companies with strong environmental commitments [4], and with the UK set to introduce mandatory carbon reporting from April 2026 [2], the pressure to act sustainably is mounting. As Thoughtworks aptly puts it:

The best carbon emissions are those that never happened[5].

However, many organisations face challenges in balancing green practices with rapid delivery. Lacking in-house GreenOps expertise, they often turn to professional consultants to help establish emissions baselines, implement carbon-conscious architectures, and pinpoint the most impactful areas for optimisation [4][10]. The benefits are clear: Etsy managed to cut energy use by 13% during its cloud migration while continuing to grow [4], and Thoughtworks enabled a news organisation to reduce compute resources by 50%, saving 25–30% on infrastructure costs [4].

Hokstad Consulting offers a compelling example of how technical efficiency can align with environmental goals. Their expertise in designing energy-conscious CI/CD pipelines has consistently delivered results, including reducing cloud costs by 30–50% and lowering carbon emissions - all without sacrificing performance.

Adopting energy-aware pipelines is a smart move for any organisation looking to cut costs, minimise emissions, and improve operational efficiency. For those ready to take the next step, partnering with experts who understand both the technical and environmental aspects of cloud infrastructure can make all the difference.

FAQs

How does optimising CI/CD pipelines help reduce energy usage?

Optimising CI/CD pipelines is a practical way to cut down on energy consumption. This can be achieved by removing unnecessary builds, cancelling idle jobs, and pausing non-essential resources when they're not actively needed. These tweaks ensure computing power is used only when it's absolutely required, avoiding waste.

Efficiently scheduling lightweight container workloads also plays a key role. By reducing the number of compute-hours needed, businesses can minimise their energy footprint. These streamlined processes not only help save money but also align with efforts to meet sustainability targets.

How can I monitor and reduce the carbon footprint of my CI/CD pipelines?

Monitoring and cutting down the carbon footprint of CI/CD pipelines is a crucial step towards meeting sustainability targets. Thankfully, there are tools designed to help you track and manage emissions effectively:

- Cloud Carbon Footprint: This open-source platform offers insights into the carbon emissions tied to your cloud usage, helping you understand where changes can be made.

- Kepler with Prometheus and Grafana: Kepler measures energy consumption, Prometheus gathers the relevant metrics, and Grafana turns that data into clear visualisations, making it easier to pinpoint areas for improvement.

- AWS Sustainability Scanner: A cloud-native tool that analyses your AWS workloads and provides recommendations to lower carbon emissions.

Incorporating these tools into your CI/CD processes allows you to make smarter, greener choices without compromising on performance.

How does the location of data centres impact the environmental footprint of CI/CD workflows?

The location of a data centre plays a big role in the environmental impact of CI/CD workflows, largely due to variations in regional electricity grids and climate conditions. Some electricity grids rely more on renewable energy, while others depend heavily on fossil fuels. This means the carbon footprint of running the same workload can vary significantly from one region to another.

Climate also matters. Cooler regions naturally make it easier and less energy-intensive to cool data centres, which improves their overall power usage effectiveness (PUE). By factoring in these regional differences, organisations can lower the emissions tied to their CI/CD activities, achieving a balance between reducing costs and supporting sustainability efforts.