End-to-end encryption (E2EE) ensures your data remains protected throughout its journey in microservices - encrypted at the source and only decrypted by the intended recipient. Unlike standard TLS, which often terminates at load balancers, E2EE encrypts data all the way, safeguarding against interception, even within internal networks.

Here’s why it matters:

- Security: Prevents man-in-the-middle attacks and lateral movement within your system.

- Compliance: Meets UK GDPR, PCI DSS, and NIS2 encryption standards, reducing the risk of data breaches.

- Zero Trust: Assumes all network components are untrusted, requiring strict authentication and encryption between services.

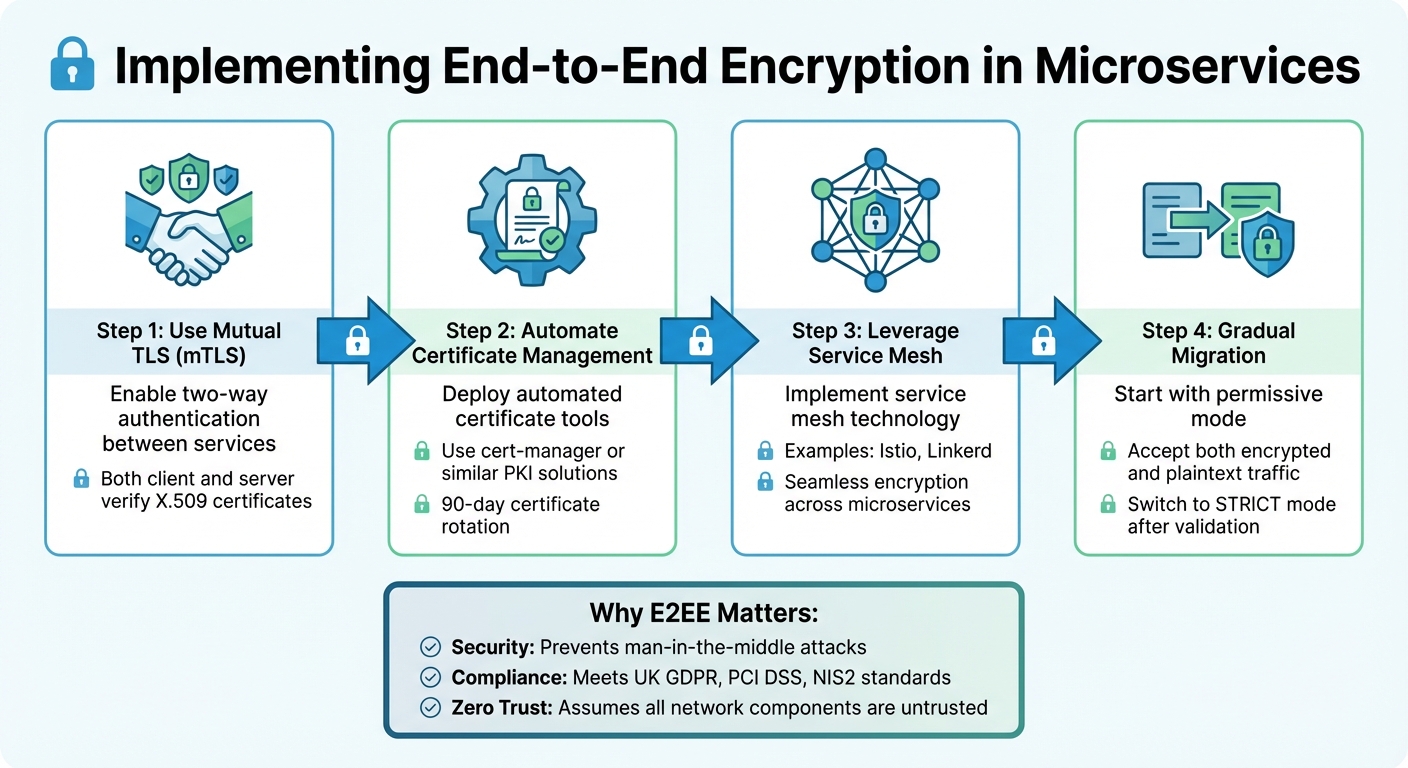

To implement E2EE:

- Use mutual TLS (mTLS) for two-way authentication.

- Automate certificate management with tools like cert-manager.

- Leverage service meshes (e.g., Istio) for seamless encryption across microservices.

- Start with permissive encryption modes during migration to avoid service disruptions.

This approach not only secures sensitive information but also aligns with modern best practices for microservices communication.

::: @figure  {4-Step Implementation Guide for End-to-End Encryption in Microservices}

:::

{4-Step Implementation Guide for End-to-End Encryption in Microservices}

:::

Master Microservices with End to End Encryption

Cryptographic Foundations and Core Concepts

Implementing end-to-end encryption (E2EE) requires a strong grasp of cryptographic principles and a design that anticipates threats from both external and internal sources.

Threats and Trust Boundaries

In a microservices environment, the network is treated as inherently untrustworthy, adopting a zero-trust approach where every request must be authenticated and authorised [1]. Traditional defences like firewalls, VPNs, and private subnets are no longer sufficient, especially as services communicate across cloud regions, data centres, or hybrid setups.

The goals of Istio security are: Security by default: no changes needed to application code and infrastructure; Defence in depth: integrate with existing security systems to provide multiple layers of defence; Zero-trust network: build security solutions on distrusted networks.– Istio Documentation [1]

Attackers often exploit vulnerabilities such as DNS spoofing, where DNS responses are manipulated to redirect traffic, or BGP hijacking, which compromises routing tables to intercept communications. However, mechanisms like secure naming protect against impersonation by linking a server's cryptographic identity (from its certificate) to the specific service it is authorised to run [1]. This ensures clients reject connections from services presenting invalid credentials, even if traffic is rerouted.

Another serious threat is API gateway bypass, where attackers try to access internal services directly, bypassing perimeter defences. To counter this, NIST recommends mutual authentication [3]. Extending trust boundaries to individual microservices through mutual TLS (mTLS) ensures that every service verifies the identity of its caller, eliminating the assumption that internal traffic is inherently safe.

These threats highlight the need for robust cryptographic solutions, which are explored in the next section.

Cryptographic Basics for Microservices

A solid foundation for E2EE in microservices relies on mutual TLS (mTLS), ensuring that both client and server validate each other's X.509 certificates before establishing a connection [1][6].

Mutual TLS (mTLS) is a security practice that provides encrypted communication between every workload and application in your infrastructure, regardless of location.– Smallstep [6]

TLS 1.2 or 1.3 should be the baseline standard [1]. These versions support strong cipher suites, such as ECDHE-ECDSA-AES256-GCM-SHA384 and ECDHE-RSA-AES128-GCM-SHA256, which provide forward secrecy. This means that even if private keys are compromised, past communications remain secure [1].

Managing certificates manually for multiple services is impractical. A Public Key Infrastructure (PKI) automates the creation, distribution, and renewal of keys and certificates [1][7]. Tools like cert-manager or Istiod streamline this process, while services like Let's Encrypt provide free certificates that expire every 90 days [2]. Automated rotation is crucial, as short-lived certificates reduce the impact of credential theft by expiring quickly.

For end-user authentication at the application layer, JSON Web Tokens (JWT) are used to verify identities and carry context across the call chain [1][3]. While mTLS secures transport between services, JWTs enable granular authorisation by passing claims about the original caller downstream.

| Cryptographic Component | Standard/Algorithm | Purpose |

|---|---|---|

| Transport Encryption | TLS 1.2 / 1.3 | Secures data in transit between services |

| Service Identity | X.509 Certificates | Provides strong, verifiable workload identity |

| Identity Framework | SPIFFE | Standardises identity across platforms |

| End-User Identity | JWT (JSON Web Token) | Propagates user context and claims |

| Certificate Management | ACME Protocol | Automates certificate issuance and renewal |

Service Identity and Authentication

Building on these cryptographic principles, service identity ensures that each microservice is uniquely and verifiably recognised.

In microservices, identity is the cornerstone of security. Unlike IP addresses, which can change as workloads scale or shift, modern architectures rely on service identity to define a workload's role and privileges [1][8].

Identity is a fundamental concept of any security infrastructure.– Istio Documentation [1]

The SPIFFE (Secure Production Identity Framework for Everyone) standard provides a consistent way to define identity across diverse environments [5][7]. SPIFFE IDs use a URI format like spiffe://<domain>/ns/<namespace>/sa/<serviceaccount> [7]. For instance, a payment service in a production namespace might have the identity spiffe://example.com/ns/production/sa/payment-service. These identities are embedded in X.509 certificates, known as SPIFFE Verifiable Identity Documents (SVIDs), which are exchanged during mTLS handshakes.

Authenticated identities form the basis for authorisation policies. Once a service proves its identity via mTLS, the system can determine what actions it is permitted to perform [1][8]. For external requests, identity propagation securely passes the original caller's context, such as an end-user identity, through the call chain using signed structures like JWTs or Netflix's Passport

pattern [3]. These internal identity tokens should never be exposed to external clients and must be signed by trusted issuers to prevent tampering.

When introducing mTLS, start with permissive mode, allowing services to accept both encrypted and plaintext traffic during the transition [1]. This avoids disruptions while gradually moving to strict mTLS enforcement. Assign unique service accounts to workloads to enforce the principle of least privilege [1][7]. For example, a payment service should not share the same identity as a logging service, even if they operate within the same cluster.

Effective service identity verification and automated certificate management are crucial for implementing E2EE, laying the groundwork for the design patterns and best practices discussed in later sections.

Design Patterns for End-to-End Encryption

Once you've got the cryptographic basics down, the next step is selecting the right design pattern to implement end-to-end encryption within microservices. Since deployment models and communication styles vary, your encryption approach needs to fit the specific requirements of your architecture.

Encryption Patterns for Microservices

One effective approach is using mutual TLS (mTLS) for securing communication between microservices. Unlike standard TLS, which secures browser-to-server connections, mTLS requires both parties to authenticate by presenting and verifying certificates. This two-way trust system is a cornerstone of zero-trust security models.

In this era of zero trust security, each individual microservice communication (request-response) must be authenticated, authorized and encrypted.– Jason Poole, NetScaler [9]

The sidecar pattern simplifies encryption by offloading tasks like mTLS handshakes, certificate management, and encryption to infrastructure components such as Envoy or NetScaler CPX [9]. This allows developers to concentrate on business logic while the sidecar handles the heavy lifting.

When it comes to edge-to-pod encryption, two common patterns are used:

- SSL termination at the load balancer: Here, traffic is decrypted at the load balancer, then either sent as plaintext or re-encrypted before reaching the gateway [4]. This creates a trust boundary at the load balancer.

- SSL passthrough: In this setup, encrypted traffic is forwarded directly to the backend microservice or ingress gateway, preserving end-to-end encryption [4].

Another approach involves identity-based security using SPIFFE IDs. Instead of relying on volatile IP addresses, this pattern assigns certificate-backed identities to workloads, ensuring their verifiability across cloud, bare-metal, or virtual environments [5][10]. This method ensures stability even during scaling or deployment changes.

Building on these encryption techniques, service meshes offer an additional layer of automation and enforcement for secure communication across clusters.

Service Mesh Solutions

Service meshes like Istio and Linkerd take encryption to the next level by automating mTLS across Kubernetes clusters without requiring changes to your application code. In these setups, the control plane (e.g., Istio's istiod) acts as a Certificate Authority, managing certificate issuance, distribution, and rotation [1].

A secure naming system ensures that server identities match the service names during the TLS handshake. This prevents impersonation attacks, even if DNS or routing are compromised [1].

Istio provides flexible security policies through its PeerAuthentication resource, which offers three modes:

- STRICT: Only encrypted traffic is accepted.

- PERMISSIVE: Both encrypted and plaintext traffic are allowed, useful during transitions.

- DISABLE: Encryption is turned off, and traffic remains unencrypted [1].

| Istio Peer Auth Mode | Description |

|---|---|

| STRICT | Only mTLS traffic is accepted by the workload |

| PERMISSIVE | Both mTLS and plaintext traffic are accepted |

| DISABLE | mTLS is disabled; traffic remains unencrypted |

For monitoring, tools like Kiali provide visual overviews of the service mesh, using indicators like lock icons to confirm mTLS encryption is active across your services.

Event-Driven and Asynchronous Patterns

Encryption isn't just for synchronous communication. Asynchronous messaging systems, like Kafka or RabbitMQ, also benefit from robust encryption to secure both the transport layer and message payload. Service meshes can enforce mTLS for plain TCP protocols, ensuring secure communication between producers, consumers, and brokers [1].

By using sidecar proxies, you can simplify encryption for messaging systems. Producers and consumers communicate through local sidecar proxies, which transparently establish mTLS connections with the message broker [9]. This eliminates the need for custom encryption logic.

To tighten security further, authorisation policies can restrict which microservices can interact with the messaging infrastructure. For example, only specific services might be allowed to publish to certain Kafka topics or subscribe to specific queues [1].

When transitioning event-driven systems to end-to-end encryption, it’s often best to start with PERMISSIVE mode. This allows for a gradual rollout while ensuring compatibility. Once all components are ready, you can switch to STRICT mode to enforce full encryption.

Need help optimizing your cloud costs?

Get expert advice on how to reduce your cloud expenses without sacrificing performance.

Implementation and Best Practices

Deploying encryption without disrupting services requires careful planning and a phased approach. Here's how to ensure a smooth transition.

Rollout and Migration Strategy

Start by setting up a solid trust framework. Deploy a Public Key Infrastructure (PKI) using tools like cert-manager with Let's Encrypt, AWS Private CA, or HashiCorp Vault to manage service identities. Configure your edge ingress with an Ingress Controller, such as NGINX, to enable SSL passthrough. This ensures traffic remains encrypted until it reaches either the service or its sidecar proxy [4].

The safest way to migrate is by initially enabling PERMISSIVE mode, which allows services to handle both plaintext and encrypted traffic simultaneously. This reduces the risk of downtime while sidecar proxies are gradually rolled out. As Istio's documentation explains:

Istio supports authentication in permissive mode to help you understand how a policy change can affect your security posture before it is enforced.[1]

Once your service mesh (e.g., Istio) is deployed and sidecar proxies are injected into application containers, validate the certificates for all workloads. Only then should you switch to STRICT mode for full encryption [1]. Keep in mind that permissive mode is not suitable for production environments [11].

With the rollout complete, it’s time to address the performance and cost challenges that encryption can introduce.

Performance and Cost Optimisation

Encryption inevitably adds processing overhead, but you can mitigate this with smart resource management. Leverage service mesh capabilities and offload tasks wherever possible, such as using PSPs (Programmable Switches) or SmartNIC hardware [4][12]. For example, proxies handling HTTPS services can downgrade from Layer 7 to Layer 4 processing, avoiding the inefficiencies of double-terminating HTTPS traffic [4].

Automating certificate management significantly reduces operational effort and errors. Tools like cert-manager can handle provisioning and rotation seamlessly. For authorisation, consider an embedded Policy Decision Point (PDP) approach, where access rules are stored locally at the microservice or sidecar level. This reduces the need for frequent network calls, keeping latency low [3].

Your choice of identity format also matters. X.509-SVIDs are ideal for secure service-to-service authentication, while JWT-SVIDs may perform better in environments with heavy proxy usage [5]. Additionally, if your backend service already terminates TLS, configure SSL passthrough at the ingress gateway to avoid unnecessary decryption and re-encryption. For production environments, memory-based key management for SPIRE/mesh keys can enhance security, though it requires an upstream CA to maintain persistence during restarts [11].

After optimising for performance and cost, focus on rigorous testing and validation.

Testing and Continuous Validation

Testing should begin early in the process. Use tools like curl --verbose to check TLS handshakes and ensure certificates are being presented correctly [2]. Regularly monitor your CA infrastructure to confirm that SPIRE server and agent pods are functioning as expected and distributing certificates. Keep an eye out for 400 Bad Request errors in proxy logs, as these often signal issues with invalid or expired certificates [11].

To strengthen your zero-trust model, adopt a layered policy evaluation approach. Process custom actions first, followed by deny rules, and finally allow rules. This order prevents overly permissive rules from bypassing critical restrictions [1]. Identity-based auditing, such as extracting details from peer authentication and JWT principals, can help track user actions and maintain accountability.

Finally, ensure your logging practices prioritise data security. Configure logging agents to filter or mask sensitive information like passwords, personal data, or API keys before sending logs to central systems [3]. Enforce encryption policies within your CI/CD pipelines and use telemetry to identify any remaining plaintext traffic before fully switching to strict mode. Structured logging with correlation IDs and decoupled logging agents can improve traceability and help prevent data loss during outages.

Conclusion and Key Takeaways

End-to-end encryption (E2EE) is a cornerstone of securing microservices, built on the principles of zero trust. By maintaining encryption throughout the entire communication process - from sender to receiver - you can effectively block man-in-the-middle (MITM) attacks and adhere to the never trust, always verify

approach required in modern cloud environments [1][8][4]. This strategy goes beyond compliance with frameworks like PCI DSS; it’s about creating systems where strong service identity replaces shared secrets and granular access control ensures each service has access only to the resources it genuinely needs [1][2][4].

Summary of Best Practices

Here’s a quick recap of the key practices for implementing E2EE:

- Use automated certificate management tools like cert-manager combined with Let's Encrypt to issue free 90-day certificates [2].

- Deploy service meshes such as Istio to handle cryptographic operations via sidecar proxies, ensuring robust encryption protocols [1].

- Transition carefully by starting in permissive mode (supporting both plaintext and encrypted traffic) before shifting to strict mode once all workloads are validated [1][8].

- Replace IP whitelisting with identity-based policies using SPIFFE/SVIDs for enhanced security [1][5][8].

- Adopt a layered defence strategy: enforce coarse-grained security at the API gateway, transport-level encryption within the service mesh, and fine-grained logic within the business code [3].

How Hokstad Consulting Can Help

Successfully implementing these best practices requires expert guidance, and Hokstad Consulting is here to help. They specialise in transforming DevOps and cloud infrastructure to create secure, efficient microservice architectures. Their cloud cost engineering services can reduce expenses by up to 50% while ensuring your microservices remain secure.

Hokstad Consulting excels at integrating service mesh technologies like Istio and automating certificate lifecycles with cert-manager, removing manual processes and embedding security as a default. Their tailored services include zero-downtime migrations, automated CI/CD pipelines, and custom development.

Whether you’re adopting zero-trust architecture, optimising hybrid cloud environments, or exploring AI-driven automation, Hokstad Consulting offers solutions that balance security with operational efficiency. Visit Hokstad Consulting to see how their expertise can accelerate your journey to implementing end-to-end encryption.

FAQs

What is the difference between end-to-end encryption and standard TLS in microservices?

End-to-end encryption locks down data right from the source microservice and keeps it encrypted until it reaches its final destination. This means that no middleman - whether it's an intermediary service, proxy, or any other component - can access the plaintext data.

On the other hand, standard TLS protects the communication channel between two points but can be terminated by intermediaries, making the data visible to those systems during transmission.

With end-to-end encryption, you’re ensuring that sensitive information stays secure for the entire journey within your microservices setup, offering a stronger shield against potential breaches.

What are the advantages of using mutual TLS (mTLS) in a microservices setup?

Mutual TLS (mTLS) provides each microservice with a verifiable identity, enabling them to securely authenticate one another. This ensures that all communication between services is encrypted, offering strong protection against data interception.

With mTLS, the process of issuing, rotating, and revoking certificates is automated. This eliminates the hassle of setting up complex VPNs or relying on IP-based access controls. As a result, you can scale your architecture more easily while keeping your service mesh secure.

Why is automated certificate management essential for secure end-to-end encryption?

Automated certificate management takes the hassle out of maintaining end-to-end encryption by managing the issuance, renewal, and revocation of certificates across services - completely removing the need for manual input. This not only reduces the chances of human error but also prevents downtime, ensuring that communication between microservices stays secure at all times.

With this process handled automatically, organisations can stay compliant with security standards while streamlining operations. Plus, it frees up time and resources, allowing teams to focus on more pressing priorities.