PCI DSS 4.0.1 introduces stricter encryption requirements for protecting cardholder data, replacing version 3.2.1. DevOps teams must now integrate encryption into CI/CD pipelines, automate compliance checks, and adopt advanced key management. Key updates include:

- Encryption for stored data (Requirement 3): Use strong cryptography (e.g., AES-128, RSA-2048) and techniques like tokenisation or keyed hashing.

- Encryption for data in transit (Requirement 4): Enforce TLS 1.2 or higher across public networks.

- Automated key rotation: Prevent long-lived keys to reduce breach risks.

- Tokenisation and masking: Minimise PCI scope by replacing sensitive data with non-sensitive tokens.

Challenges for DevOps teams include:

- Balancing encryption with CI/CD speed.

- Managing multiple encryption points and certificates.

- Ensuring compliance in containerised and cloud environments.

Security of IaC Pipelines and Infrastructure Governance With Policies-As-Code | PulumiUP 2024

Challenges in Meeting PCI DSS Encryption Standards

Building on earlier discussions about the importance of automated encryption in dynamic environments, DevOps teams encounter several technical hurdles when implementing PCI DSS 4.0.1 encryption controls.

Integrating Encryption into CI/CD Pipelines

Adding encryption to CI/CD workflows can make operations more complicated. For example, a typical web application using Elastic Load Balancing might involve five encryption and decryption points, and introducing a Web Application Firewall bumps that number up to seven points [1]. Each of these points requires its own certificate and key management, which can slow down deployments significantly.

DevOps pipelines often handle sensitive data, such as database credentials, configuration files, and private keys. If secrets are retrieved manually, there’s a risk they could end up exposed in logs or source repositories [3]. The AWS Well-Architected Framework stresses this point clearly: Key material should never be accessible to human identities

[4]. However, many legacy systems weren’t built to support the automated retrieval systems needed to meet this standard.

Key Management and Rotation Complexities

Rotating encryption keys without disrupting applications or causing outages is a major challenge. Many systems lack support for multiple key versions, which can lead to issues when decrypting historical data [5]. Manual processes also risk allowing keys to remain active beyond the limits set by PCI DSS [5]. Alarmingly, 86% of data breaches involve stolen credentials [7]. Traditional hardware security modules and manual provisioning methods struggle to keep up with the high operational demands of ephemeral microservices [8].

A stark example occurred in January 2025, when hackers used a compromised access key to breach Gravy Analytics' AWS storage, exposing location data for millions of individuals. This incident underscored the dangers of relying on long-lived, static credentials and the failure to properly monitor and isolate access keys from broader infrastructure controls [7]. Moreover, re-encrypting large datasets in real-time can overwhelm database connections, forcing teams to adopt a more gradual approach to re-encryption to balance security with operational needs [5].

Data in Transit and API Encryption Challenges

Enforcing TLS 1.2 or higher across all network boundaries is particularly challenging for legacy systems that don’t natively support modern protocols [9]. The overhead from multiple encryption and decryption points can also lead to noticeable performance drops and increased latency [1]. As Balaji from AWS Security explains: Each additional encryption and decryption point adds key and certificate management overhead... organisations have to balance that number with application performance requirements

[1].

Securing microservices often requires mutual TLS through service meshes like Istio [2]. PCI DSS also prohibits weak ciphers, such as CBC-mode ciphers and MD5 hashes [9]. Configuring environments to exclude these weak ciphers while maintaining compatibility with essential services is a delicate task that can lead to deployment failures. Furthermore, defining the boundaries of a private network

, such as an Amazon VPC, involves meticulous logical isolation and strict configuration of network access control lists to meet auditor requirements [1].

Next, we’ll delve into how automation and cloud-based key management systems can address these challenges effectively.

Solutions for PCI DSS Encryption Compliance

::: @figure  {Cloud Tools for PCI DSS Encryption Compliance: Features and Benefits}

:::

{Cloud Tools for PCI DSS Encryption Compliance: Features and Benefits}

:::

Meeting PCI DSS encryption requirements might seem challenging, but it’s far from impossible. By using automation and cloud-native tools, DevOps teams can ensure compliance without slowing down deployments or compromising efficiency. Here’s how encryption can be seamlessly integrated into DevOps workflows.

Automating Encryption Within CI/CD Pipelines

Manual processes can introduce errors and inefficiencies, so automating encryption within CI/CD pipelines is a smart move. Tools like Terraform allow teams to programmatically manage resources such as AWS Secrets Manager and KMS keys, ensuring sensitive data never appears in plain text within state files [11]. Additionally, enabling encryption by default

on cloud services like Amazon EBS, RDS, and S3 helps prevent accidental deployment of unencrypted resources [10][13].

Automated compliance checks add another layer of security. For instance, AWS Config rules can monitor for unencrypted volumes or buckets and trigger immediate remediation if issues arise [13]. To handle certificate management, AWS Certificate Manager (ACM) automates the provisioning and renewal of TLS certificates, reducing the risk of outages and maintaining secure TLS 1.3 connections [12][4]. Centralising CloudTrail logs in a dedicated logging account and analysing them with tools like Athena can create a detailed audit trail for encryption events [6][10]. Once automation is in place, strong key management practices are essential for long-term security.

Using Cloud Key Management Systems (KMS)

Cloud-based key management systems (KMS) simplify the often complex task of managing encryption keys. For example, AWS KMS is certified to meet PCI DSS standards, including the encryption of Primary Account Number (PAN) data [10]. This service uses FIPS 140-3 Level 3 hardware security modules (HSMs) to protect key material, ensuring keys cannot be exported in plain text [6][10].

Key rotation is another critical feature. AWS KMS allows customer-managed keys (CMKs) to auto-rotate on a set schedule, typically every year, while retaining older versions to decrypt existing data [14]. To prevent accidental or malicious deletion, AWS KMS enforces a mandatory waiting period for key destruction, ranging from 7 to 30 days [6]. For application-level data, envelope encryption can be employed, where data encryption keys (DEKs) are encrypted by a master key managed within KMS [6][14]. When working with large datasets in Amazon S3, using S3 Bucket Keys can reduce the number of API calls to KMS, lowering costs and minimising audit log volume [10]. These practices ensure robust encryption while maintaining compliance.

Enforcing Strong Encryption for Data in Transit

Securing data in transit is another critical aspect of PCI DSS compliance. At a minimum, DevOps teams should enforce TLS 1.2, with TLS 1.3 recommended for even stronger security [12]. AWS will officially stop supporting TLS versions earlier than 1.2 for all API endpoints by February 2024 [12]. To ensure all traffic is encrypted, configure services like Amazon CloudFront or Application Load Balancers to redirect HTTP requests to HTTPS automatically [12].

For heightened API security, mutual TLS (mTLS) can be implemented using client certificates through Amazon API Gateway or Application Load Balancer [4]. Additionally, Amazon S3 bucket policies can use the aws:SecureTransport condition to block uploads that don’t use HTTPS [12]. To further secure the environment, security groups should audit and block the use of insecure HTTP protocols within a VPC [12]. Centralising API and encryption logs via AWS CloudTrail, combined with S3 integrity validation, helps ensure continuous compliance monitoring [4][10].

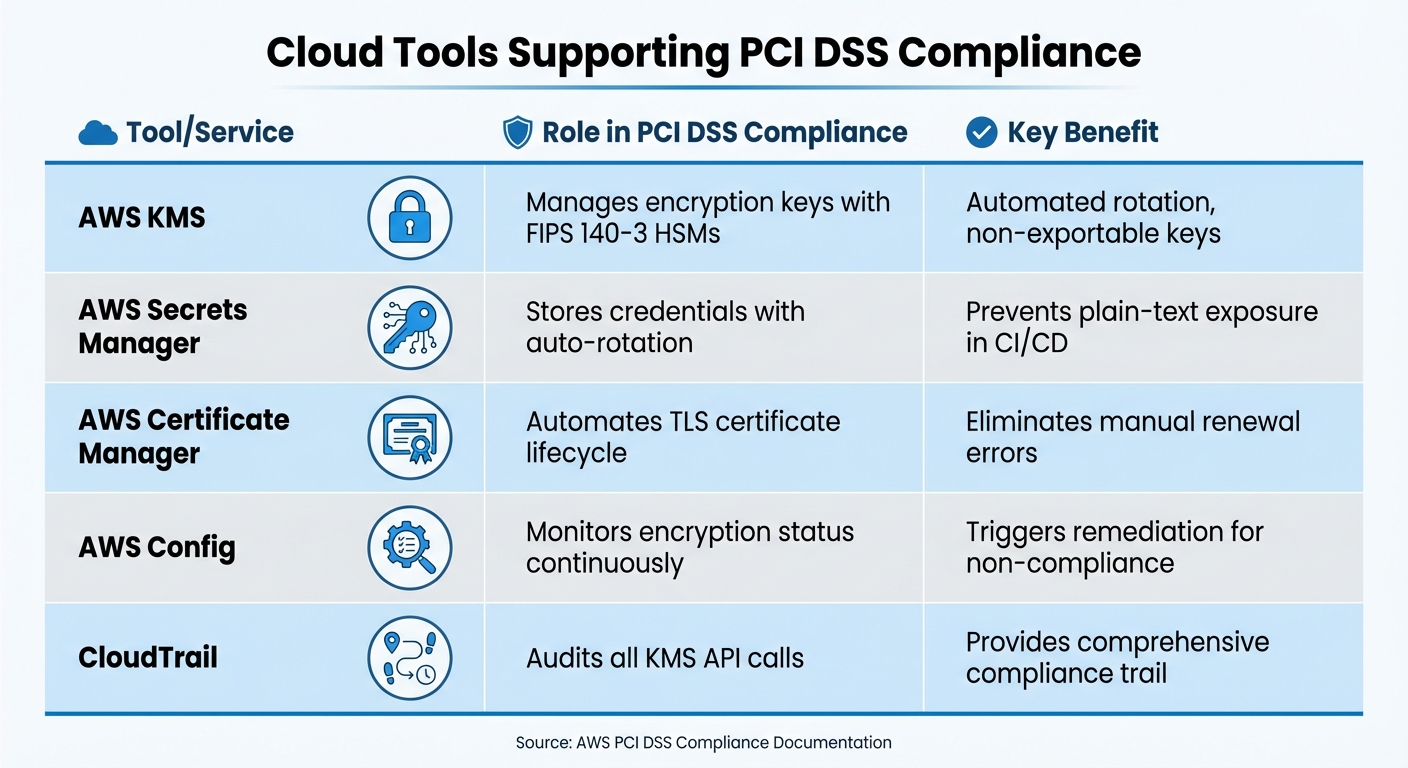

Cloud Tools Supporting PCI DSS Compliance

Several cloud tools play a key role in meeting PCI DSS encryption requirements. Here’s a quick overview:

| Tool/Service | Role in PCI DSS Compliance | Key Benefit |

|---|---|---|

| AWS KMS | Manages encryption keys with FIPS 140-3 HSMs | Automated rotation, non-exportable keys [6][10] |

| AWS Secrets Manager | Stores credentials with auto-rotation | Prevents plain-text exposure in CI/CD [11] |

| AWS Certificate Manager | Automates TLS certificate lifecycle | Eliminates manual renewal errors [4] |

| AWS Config | Monitors encryption status continuously | Triggers remediation for non-compliance [13] |

| CloudTrail | Audits all KMS API calls | Provides comprehensive compliance trail [10] |

These tools and practices offer a practical way for DevOps teams to integrate encryption into their workflows while maintaining PCI DSS compliance. By automating processes, managing keys securely, and enforcing strong encryption standards, organisations can protect sensitive data without sacrificing agility.

Need help optimizing your cloud costs?

Get expert advice on how to reduce your cloud expenses without sacrificing performance.

Reducing PCI Scope with Tokenisation and Data Masking

One of the most effective ways to simplify PCI DSS compliance is by reducing the number of systems that handle sensitive cardholder data. Tokenisation replaces Primary Account Numbers (PAN) with non-sensitive tokens [15][18]. Systems that store, process, or transmit these tokens - without the ability to reverse them - can often be excluded from PCI DSS assessment scope entirely [15][20].

Data masking, on the other hand, transforms sensitive data by replacing characters with symbols like X

(e.g., turning 4111111111111111

into XXXXXXXXXXXX1111

). This makes it ideal for displaying data in user interfaces or logs without exposing the full PAN [17][19].

Tokenization is also a form of segmentation by data flow, because it separates systems that store and transmit cardholder data from those that can perform operations using only tokens.- Google Cloud Architecture Centre [20]

The benefits to compliance are notable. A PCI-validated tokenisation solution can reduce the compliance burden significantly. For example, merchants using such a solution may only need to meet 21 sub-requirements under SAQ P2PE, compared to the 277 sub-requirements outlined in the 360-page PCI DSS v4.0.1 [21]. This reduction in scope leads to lower audit costs, fewer systems to secure, and less operational strain on DevOps teams.

Vaultless Tokenisation for DevOps Pipelines

Tokenisation and masking help reduce scope, but vaultless tokenisation takes it a step further by improving performance and scalability. Traditional tokenisation relies on a central database (or vault

) to store mappings between tokens and original PANs. This creates a bottleneck, as each tokenisation or de-tokenisation request requires a database lookup, which can slow down high-performance systems.

Vaultless tokenisation, powered by Format-Preserving Encryption (FPE), avoids this issue. FPE is stateless, meaning it doesn’t rely on a central database. Instead, it uses cryptographic keys to transform data. The original data's length and character set are preserved, so a 16-digit credit card number remains 16 digits, ensuring compatibility with legacy systems [15][17].

FPE is stateless, which means that Vault does not store the protected secret. Instead, Vault protects the encryption key needed to decrypt the ciphertext.- HashiCorp Developer [17]

This stateless approach allows for horizontal scaling, eliminating database bottlenecks [17]. For DevOps teams managing fast-paced CI/CD pipelines, tokenisation can occur as data is ingested, without slowing down deployments.

Convergent tokenisation is another valuable feature. It ensures that the same input consistently produces the same token, enabling statistical analysis, fraud monitoring, and sorting without the need to de-tokenise data [17]. This is especially useful for analytics pipelines that need to identify patterns or count unique users while keeping sensitive cardholder data out of scope.

The best practice is to implement tokenisation at the source - right where data enters your environment. This prevents sensitive PANs from ever reaching data lakes, backend systems, or DevOps environments [15]. Use NIST-approved algorithms like FF3-1 for FPE to guard against known attack vectors, and automate provisioning with Infrastructure as Code (IaC) tools like Terraform to ensure compliance as code

from the outset [16][17][19]. This scalable, stateless model integrates seamlessly with strict access controls, which we’ll discuss next.

Best Practices for Role-Based Access Control

While tokenisation reduces PCI scope, securing access to de-tokenisation is critical. Role-Based Access Control (RBAC) ensures that although many systems can handle tokens, only specific authorised roles can access the underlying PAN data.

Tokenization can be used to add a layer of explicit access controls to de-tokenization of individual data items, which can be used to implement and demonstrate least-privileged access to sensitive data.- AWS Security Blog [15]

In April 2023, AWS outlined a strategy where tokenisation adds explicit access controls to de-tokenisation. This ensures that only those with a need-to-know

can view sensitive data in a data lake, supporting PCI DSS 3.2.1 compliance [15]. Similarly, Google Cloud offers a reference architecture where a Tokenisation Service User

service account is created with the roles/cloudkms.cryptoKeyEncrypterDecrypter role, restricting access to only the necessary Cloud KMS keys for tokenisation [20].

Short-lived credentials play a crucial role in securing access. Using short-lived service tokens or dynamic secrets minimises the risk of compromised credentials. Multi-factor authentication (MFA) is a must for users accessing the Cardholder Data Environment (CDE) or de-tokenisation keys, aligning with PCI DSS requirement 8.4.1 [19].

To ensure accountability, automate monitoring for every de-tokenisation event. Real-time alerts can detect potential misuse of high-privilege roles, while detailed logs track who accessed what

[15][18]. Group PCI-scoped projects into dedicated folders or projects using resource hierarchies, and apply IAM policies at the folder level to create logical trust boundaries [20].

Finally, limit network access for functions handling tokenisation. Use settings like no-allow-unauthenticated

to require valid identity tokens for access. This ensures that only authorised components in your pipeline can request tokens, reducing the risk of unauthorised access [16].

DevOps Best Practices for Maintaining PCI DSS Compliance

To address the challenges of integration, continuous monitoring and automation are critical for maintaining PCI DSS compliance. With the PCI DSS 4.0.1 standard requiring full adoption by 31 March 2025, organisations must transition from point-in-time assessments to ongoing, automated validation. Compliance is no longer a one-off task - it’s a continuous operational metric.

Compliance-as-code is a game changer. By leveraging Infrastructure as Code (IaC) tools like Terraform or AWS CloudFormation, DevOps teams can embed security policies and encryption settings directly into version-controlled templates. This ensures that every resource is compliant from the moment it’s deployed. Automated key rotation simplifies compliance further, while managed identities, such as those in Azure or GKE, allow applications to access encryption keys securely - eliminating the need for static credentials in source code. These automated practices create a strong foundation for real-time governance and continuous compliance monitoring.

Real-time governance tools play a crucial role. Solutions like AWS Config or Azure Monitor can continuously scan for non-compliant encryption configurations - such as outdated TLS versions or weak cipher suites - and fix issues automatically. Additionally, integrating container scanning and vulnerability detection into CI/CD pipelines helps identify risks before they reach production. Kubernetes Network Policies add another layer of security by restricting pod-to-pod communication, ensuring only authorised services can access the Cardholder Data Environment (CDE).

PCI-DSS 4.0 demands security controls baked directly into your CI/CD pipelines.

– Abhay Bhargav, CEO, SecurityReview.ai

These continuous checks are best supported by automated dashboards, which simplify compliance reporting and monitoring.

Building Automated Compliance Dashboards

Automated dashboards turn PCI DSS monitoring into a real-time process, saving teams from the burden of lengthy audit preparations. They enable continuous tracking of encryption health, key rotation status, and configuration drift. For instance, dashboards can alert teams when production environments deviate from compliant IaC templates - whether due to changes in firewall rules, IAM permissions, or outdated TLS protocols. They also serve as repositories for audit-ready artefacts, including key rotation logs, access records, vulnerability scan results, and evidence of automated secret injection.

Advanced platforms take this a step further by mapping tokenisation and masking directly to PCI DSS 4.0 controls, such as Requirement 3.4.1, which mandates rendering PANs unreadable. This alignment removes guesswork during audits, cutting preparation time from weeks to just days.

PCI-DSS 4.0 kills the scan-once-and-forget approach. Your quarterly vulnerability scan? Worthless if you're deploying code daily.

– Abhay Bhargav, CEO, SecurityReview.ai

Dashboards can monitor specific encryption signals, such as unexpected traffic in the CDE, unauthorised changes to IAM permissions for encryption keys, or attempts to bypass web application firewall (WAF) rules. They also help track performance metrics like Mean Time to Remediation (MTTR) for vulnerabilities and the percentage of code covered by automated security tests. Additionally, it’s essential to ensure logging pipelines automatically remove sensitive data - like Primary Account Numbers (PAN) or CVV information - to prevent unintentional scope expansion. Integration with secret management tools can further verify that key rotation processes are functioning as intended.

| Monitoring Signal | PCI DSS Relevance | Dashboard Action |

|---|---|---|

| TLS Configuration | Requirement 4.2 | Alert if weak TLS versions or cipher suites are used |

| Key Rotation | Requirement 3.6.4 | Track key expiry and log rotation events |

| IAM Changes | Requirement 7.2.1 | Monitor for unauthorised access to key management |

| Configuration Drift | Requirement 12.10 | Detect changes to security groups or network policies |

| Vulnerability Status | Requirement 6.3.2 | Display real-time results from security scans |

Hokstad Consulting's Role in DevOps Optimisation

For organisations navigating the complexities of PCI DSS encryption compliance while maintaining rapid deployment cycles, Hokstad Consulting offers tailored DevOps solutions. Their expertise spans cloud cost optimisation, strategic migrations, and custom automation, ensuring security is seamlessly integrated into your CI/CD pipelines from the outset.

Hokstad Consulting excels in implementing automated key management, compliance-as-code frameworks, and real-time monitoring dashboards that align with PCI DSS 4.0.1 standards. Whether migrating to public, private, or hybrid cloud setups, their solutions can reduce cloud costs by 30–50% while upholding strict encryption requirements. They also provide ongoing DevOps support, infrastructure monitoring, and cloud security audits to ensure your compliance posture remains strong as your environment evolves. With their help, you can build scalable, PCI-compliant systems that integrate continuous monitoring into every stage of your CI/CD pipeline.

Conclusion

Achieving PCI DSS encryption compliance doesn’t have to be a resource-draining process or disrupt deployment cycles. With PCI DSS 4.0.1, DevOps teams are encouraged to go beyond periodic scans and adopt continuous, automated compliance practices. By integrating encryption controls into CI/CD pipelines through compliance-as-code, automating key rotation with cloud-native Key Management Systems (KMS), and enforcing TLS 1.2 or higher for all data in transit, organisations can uphold security without sacrificing agility. These steps ensure that security measures are seamlessly embedded into the deployment pipeline, allowing speed and safety to work hand in hand.

Tokenisation stands as the most effective way to minimise compliance scope. By replacing plaintext cardholder data with irreversible tokens, organisations can significantly reduce the complexity of compliance. As Evervault highlights, The greatest reduction in burden is achieved by ensuring you never handle plaintext card data in your environment

[22]. This aligns with earlier discussions on leveraging automated, token-based methods to simplify PCI scope management.

To secure PCI compliance, centralised key management, automated dashboards, and identity-based access controls are essential. These tools minimise human error, provide real-time monitoring, and generate audit-ready records continuously. Non-compliance can result in fines ranging from £2.40 to £14.40 per card breached [22], while achieving compliance could require an engineering investment exceeding £80,000 over a 12-month period [22].

For organisations looking to streamline their compliance efforts, Hokstad Consulting offers tailored solutions. They specialise in integrating compliance-as-code frameworks, automated key management, and real-time monitoring dashboards aligned with PCI DSS 4.0.1. Their expertise can help reduce cloud costs by 30–50%, all while maintaining stringent encryption standards. Whether transitioning to public, private, or hybrid cloud environments, their approach ensures compliance becomes a natural, cost-efficient part of your deployment process.

FAQs

How can DevOps teams maintain PCI DSS encryption compliance without slowing down CI/CD pipelines?

DevOps teams can uphold PCI DSS encryption compliance while keeping their CI/CD pipelines running smoothly by weaving automation and security best practices into their processes. For example, automating tasks like key management and data encryption not only ensures compliance but also avoids unnecessary delays. Using tools designed to securely handle secrets and encryption keys can significantly cut down on manual work and prevent workflow bottlenecks.

To make compliance even more seamless, it's essential to establish clear security controls right from the start. This includes encrypting data both at rest and in transit. Automated compliance checks are another game-changer - they help spot vulnerabilities early, reducing the risk of last-minute disruptions during deployment. By embracing a DevSecOps mindset, teams can fully integrate security into their CI/CD workflows, allowing for deployments that are fast, secure, and compliant.

How does tokenisation help DevOps teams simplify PCI DSS compliance?

Tokenisation allows DevOps teams to minimise the scope of PCI DSS compliance by substituting sensitive cardholder data with non-sensitive tokens. These tokens are essentially meaningless on their own, ensuring that actual card data isn't unnecessarily stored or transmitted.

By reducing the exposure of sensitive information, tokenisation strengthens security while simplifying compliance efforts. It makes audits and reporting much easier, freeing up teams to concentrate on improving workflows without sacrificing data security.

How can automated compliance checks improve security in CI/CD pipelines?

Automated compliance checks play a crucial role in boosting security within CI/CD pipelines by embedding PCI DSS encryption requirements directly into the development workflow. This integration ensures that security standards are consistently upheld at every stage, significantly lowering the chances of human error or lapses in compliance.

By flagging and halting non-compliant changes automatically, these checks help close security gaps and prevent potential exploits before they become an issue. This proactive method keeps both infrastructure and application code secure while ensuring they meet regulatory requirements throughout the entire development lifecycle.