Managing deployments across multiple cloud platforms like AWS, Azure, and Google Cloud can be complex and error-prone without automation. Infrastructure as Code (IaC) simplifies this process by treating infrastructure as version-controlled code, enabling consistent, automated, and repeatable setups. Multi-cloud CI/CD pipelines combine IaC with tools like Terraform, Pulumi, and Kubernetes to streamline deployments, reduce vendor lock-in, and improve cost efficiency.

Key takeaways:

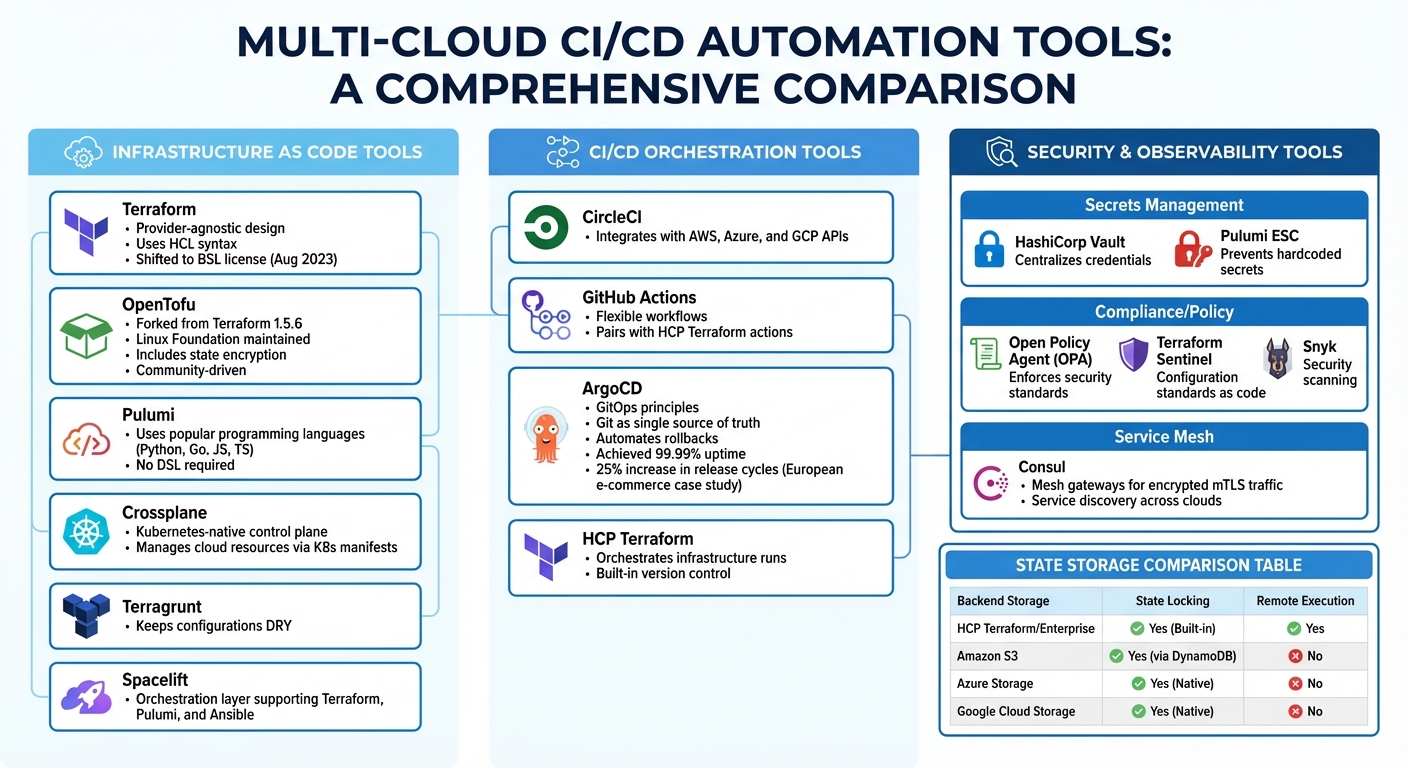

- IaC Tools: Terraform, OpenTofu, Pulumi, and Crossplane help manage resources across clouds.

- CI/CD Orchestration: Tools like CircleCI, GitHub Actions, and ArgoCD automate deployments.

- Security: Use HashiCorp Vault, Open Policy Agent (OPA), and short-lived credentials for safe pipelines.

- Cost Management: Integrate cost estimation and enforce tagging policies to control expenses.

Automating multi-cloud pipelines with IaC ensures scalable, secure, and cost-effective deployments across cloud environments.

Multi-Cloud DevOps Project: Secure CI/CD Pipeline & IaC | Ruman Najam

Tools and Components for Multi-Cloud CI/CD Automation

::: @figure  {Multi-Cloud CI/CD Tools Comparison: IaC, Orchestration, and Security Solutions}

:::

{Multi-Cloud CI/CD Tools Comparison: IaC, Orchestration, and Security Solutions}

:::

Building a multi-cloud CI/CD pipeline requires a carefully chosen set of tools that work together seamlessly. These include infrastructure provisioning solutions, orchestration platforms, and security tools. Below, we explore how each category contributes to creating efficient pipelines in multi-cloud environments.

Infrastructure as Code Tools

When it comes to provisioning infrastructure across multiple clouds, Terraform is a go-to choice. Its provider-agnostic design allows users to manage resources across different platforms using plugins that are regularly updated [5]. However, in August 2023, HashiCorp shifted Terraform's licence to the Business Source Licence (BSL), prompting some organisations to explore alternatives [6].

One such alternative is OpenTofu, which was forked from Terraform 1.5.6 and is now maintained under the Linux Foundation [5][6]. OpenTofu retains Terraform's HCL syntax but introduces additional features such as state encryption, offering a community-driven approach for those wary of vendor lock-in [6].

Pulumi takes a different route by allowing infrastructure to be defined using popular programming languages like Python, Go, JavaScript, or TypeScript [6]. This eliminates the need to learn a domain-specific language, making it easier for developers to integrate infrastructure code into their existing workflows.

For Kubernetes-heavy environments, Crossplane serves as a universal control plane. It enables Kubernetes clusters to manage cloud resources - like databases, storage, and networking - using familiar Kubernetes manifests [6]. This Kubernetes-native approach is gaining traction among teams looking to manage non-containerised resources alongside their container workloads.

Other tools worth noting include Terragrunt, which helps keep configurations DRY (Don't Repeat Yourself), and Spacelift, an orchestration layer that supports workflows combining Terraform, Pulumi, and Ansible [6][5]. As Gareth Lowe, Director of Technology at Airtime Rewards, puts it:

Spacelift has fundamentally changed how we think about infrastructure - for the better [6].

These tools simplify the complexities of managing infrastructure in multi-cloud setups, aligning with the overarching principles of unified pipeline management.

CI/CD Orchestration Tools

Once infrastructure is defined, orchestration tools handle the deployment process across different clouds. CircleCI integrates effectively with AWS, Azure, and GCP APIs, while GitHub Actions offers flexible workflows, especially when paired with HashiCorp's HCP Terraform actions for pre- and post-run checks.

For Kubernetes deployments, ArgoCD is a standout choice. It applies GitOps principles by using Git repositories as the single source of truth, ensuring consistent application states across clusters and automating rollbacks when needed. A European e-commerce platform led by CTO Maria Jensen implemented ArgoCD in 2023, achieving 99.99% uptime and a 25% increase in release cycles by deploying it across Google Cloud and Azure.

HCP Terraform also plays a role here, orchestrating infrastructure runs with built-in version control. To streamline Terraform commands in CI/CD pipelines, you can set the TF_IN_AUTOMATION environment variable and use the -input=false flag to bypass manual prompts [2].

Security and Observability Tools

Security is non-negotiable in multi-cloud pipelines. Tools like HashiCorp Vault and Pulumi ESC centralise secret management, reducing the risk of exposing sensitive credentials such as API keys or passwords [8][3]. As Julie Peterson, Sr. Product Marketing Manager at Cycode, explains:

A seemingly harmless code change that makes its way through a compromised pipeline could lead to security breaches, system compromise, and significant operational disruptions [8].

Policy-as-code frameworks, such as Open Policy Agent (OPA) and Terraform Sentinel, allow teams to enforce security and compliance standards before infrastructure is deployed [3][7]. For secure service-to-service communication, Consul uses mesh gateways to provide encrypted mTLS traffic and service discovery across clouds [4].

| Tool Category | Example Tools | Role in Multi-Cloud CI/CD |

|---|---|---|

| Secrets Management | HashiCorp Vault, Pulumi ESC | Centralises credentials and prevents hardcoded secrets [8][3] |

| Compliance/Policy | OPA, Terraform Sentinel, Snyk | Enforces security and configuration standards as code [3][7] |

| Service Mesh | Consul (Mesh Gateways) | Ensures secure, encrypted mTLS traffic between clouds [4] |

To simplify troubleshooting, configure all resources to send logs to a centralised destination, such as a shared CloudWatch log group or a Prometheus namespace [9]. Additionally, use least-privilege access controls - whether through cloud provider IAM roles or federated identity management - to limit the impact of potential security breaches [8][3].

Building a Multi-Cloud IaC Architecture

Designing a multi-cloud infrastructure requires a modular and standardised approach to minimise errors and maintain consistency over time [10]. As Rifki Karim aptly puts it:

Infrastructure-as-Code (IaC) isn't just helpful, it's foundational [10].

The choices you make today will directly impact your ability to scale, troubleshoot, and maintain your infrastructure pipelines in the future.

Modular Code Design Principles

Breaking infrastructure into reusable modules is a cornerstone of effective multi-cloud architecture. These modules should follow principles like encapsulation (grouping related resources), privileges (aligning with team responsibilities), and volatility (separating long-lived resources like databases from short-lived ones like application servers) [16].

To keep things manageable, limit root configurations to fewer than 100 resources per state file. This not only speeds up execution but also reduces the impact of potential issues [15]. For clarity, name modules using the format terraform-<cloud_provider>-<function>, such as terraform-aws-vpc or terraform-azure-database [16]. Avoid creating modules that encapsulate a single resource type - this adds unnecessary complexity without meaningful abstraction [17].

Each module should include a standard file set:

-

main.tffor the entry point -

variables.tffor inputs -

outputs.tffor exported values -

versions.tffor provider requirements -

README.mdfor documentation [17]

Always define at least one output for every resource in a module. This ensures that dependencies between modules are correctly inferred [17]. With these robust modules in place, managing state becomes the next critical task.

Managing State and Version Control

In multi-cloud setups, remote state storage is a must. It ensures a single source of truth and facilitates team collaboration. Popular options include Amazon S3, Azure Blob Storage, Google Cloud Storage, or HCP Terraform [11][14]. For added safety, choose backends that support state locking - for example, S3 with DynamoDB, Azure Storage, or Google Cloud Storage [11][14].

| Backend Storage | State Locking Support | Remote Execution Support |

|---|---|---|

| HCP Terraform / Enterprise | Yes (Built-in) | Yes [14] |

| Amazon S3 | Yes (via DynamoDB) | No [14] |

| Azure Storage | Yes (Native) | No [14] |

| Google Cloud Storage | Yes (Native) | No [14] |

When managing environments, adopt a directory-based structure for configurations that differ significantly (e.g., dev, staging, prod). This approach helps contain the impact of changes [13]. For nearly identical environments, workspaces can be used, but they require careful CLI management [13][2]. Use branching strategies to manage changes: keep stable code in a main

branch and use environment-specific branches for root configurations. For example, promote changes from dev to prod by merging branches [12].

For handling secrets, rely on dedicated secret managers like Google Secret Manager and reference them via data sources [12]. In CI/CD pipelines, use the -input=false flag to avoid interactive prompts, and set the TF_IN_AUTOMATION environment variable to optimise log output [2].

Proper state management is the backbone of consistent deployments, ensuring that your infrastructure remains reliable across clouds.

Directory Structure and Naming Conventions

To maintain clarity and scalability, organise your code systematically. Use a top-level modules/ directory for reusable components and an environments/ directory for root configurations, such as environments/dev/main.tf [15]. Align repositories with team boundaries, such as a dedicated networking repository, to establish clear ownership [12].

Adopt snake_case for resource names [17]. For numeric variables, include units in the names (e.g., ram_size_gb or disk_size_mb), and use positive phrasing for Booleans (e.g., enable_external_access) [17]. Tagging resources consistently is also critical - untagged resources can become invisible

for cost tracking and management.

In root modules, pin provider versions to a minor version (e.g., ~> 4.0.0). This allows for patch updates while avoiding breaking changes [15]. Use pre-commit hooks like terraform fmt, validate, and tflint to enforce coding standards [17]. Finally, keep module nesting shallow - ideally no more than one or two levels deep - to simplify both troubleshooting and maintenance [17].

Automating Multi-Cloud CI/CD Pipelines

Creating a CI/CD pipeline that deploys infrastructure across multiple clouds can look challenging, but leveraging Infrastructure as Code (IaC) principles makes it manageable. The process typically involves a few key steps: initialising the working directory, generating an execution plan, reviewing proposed changes, and finally, applying the changes [2]. However, multi-cloud setups come with their own set of challenges, like handling state management, managing credentials, and orchestrating deployments effectively.

Pipeline Stages and Workflow

A well-structured multi-cloud pipeline starts with validation and linting, which acts as the first checkpoint. This step identifies syntax errors or formatting issues before any resources are created [19]. Following this, the testing and policy-as-code stage ensures compliance and security. Tools like Open Policy Agent (OPA) can automatically block resources that fail to meet requirements, such as missing metadata tags or open security group rules [19][1].

The planning stage is where a preview of changes is generated for cloud providers like AWS, Azure, or GCP. These previews should be saved as build artefacts for later review [1][4]. The final step, the deployment stage, applies the approved changes. For production environments, it’s common to include a manual approval step [1].

To streamline execution, always use init, plan, and apply commands with the -input=false flag and set the TF_IN_AUTOMATION environment variable. This ensures non-interactive outputs [2]. If the plan and apply stages are executed on separate machines, make sure to archive the entire working directory, including the .terraform folder, and restore it to the same absolute path to avoid pathing errors [2].

With these pipeline stages in place, focus shifts to how deployments are handled across multiple clouds.

Deployment Across Multiple Clouds

When deploying across clouds, you can choose between two strategies: sequential deployment for scenarios where resources are interdependent, or parallel deployment to speed up provisioning when resources are independent [9].

For instance, in August 2023, HashiCorp showcased a project that provisioned an EKS cluster in AWS (us-east-2) and an AKS cluster in Azure using Terraform. The deployment used the terraform_remote_state data source to share cluster IDs across configurations, enabling the clusters to integrate via Consul mesh gateways. This setup involved managing 59 resources in AWS and 3 in Azure, all handled through a unified Terraform workflow [4].

Credential management is another critical aspect. Using OpenID Connect (OIDC), CI/CD runners can assume temporary IAM roles. HashiCorp explains:

HCP Terraform's dynamic provider credentials allow Terraform runs to assume an IAM role through native OpenID Connect (OIDC) integration and obtain temporary security credentials for each run... These credentials are usable for only one hour by default [18].

This approach reduces the risk window for potential attackers.

Once resources are deployed across clouds, maintaining visibility and monitoring becomes essential.

Integration with Monitoring and Alerts

To ensure smooth operations, centralise logs and metrics from tools like Terraform and Pulumi into a single destination, such as AWS CloudWatch or Grafana [9]. Using a shared metrics namespace (e.g., InfrastructureDeployment) allows teams to monitor error rates across clouds and tools from one dashboard [9].

Post-deployment, implement drift detection to regularly check for discrepancies between your live resources and the IaC state. This automated process helps catch manual changes that might otherwise go unnoticed and ensures future deployments don’t encounter unexpected failures [1].

Security, Governance, and Cost Optimisation

Building security and cost controls directly into your multi-cloud CI/CD pipeline isn't just a good idea - it’s essential. Gartner predicts that by 2025, 99% of cloud security failures will be self-inflicted [21]. The good news? Many of these issues can be avoided by treating security and cost management as part of your code, not as an afterthought.

Security and Compliance

At the heart of multi-cloud governance lies Policy-as-Code (PaC). Using OPA policies, you can enforce rules across multiple clouds to prevent violations before they happen. For instance, you can block public storage buckets on AWS, Azure, and GCP simultaneously [20]. These policies integrate into your CI/CD pipeline, catching issues before deployment.

Dynamic credential management is another must. Replace static, long-lived secrets with dynamic, short-lived OIDC tokens that automatically expire after about an hour [21]. Additionally, configure CI/CD pipelines to use stage-specific service accounts. For example, the plan

stage should only have read permissions, while the apply

stage requires write access [23].

To maintain security without slowing developers down, implement a golden module strategy. This involves creating a private registry of pre-approved, secure IaC templates - like a secure S3 bucket

or a compliant database instance

- that already follow best practices [30, 31]. Developers can self-serve with these modules, while governance remains intact. Also, ensure sensitive files (e.g., .tfstate, .tfvars, .terraform) are added to .gitignore to avoid accidental leaks [22].

Once security is in place, managing costs becomes the next challenge.

Cost Management Strategies

Automation and IaC can simplify deployments, but they should also help you keep costs under control. By integrating cost estimation tools into your pipeline, you can make spending visible before decisions are final. For example, HCP Terraform can provide cost estimates between the plan

and apply

stages [24]. As HashiCorp's Michael Fonseca explains:

Engineers are becoming the new cloud financial controllers as finance teams begin to lose some of their direct control over new fast-paced, on-demand infrastructure consumption models driven by cloud [26].

Sentinel policies are another way to manage costs effectively. Use them to enforce rules like restricting non-production environments to smaller instance types (e.g., t2.micro or t2.small) and requiring mandatory resource tagging (e.g., owner, cost centre, project ID). This makes it easier to track resources across multiple cloud billing systems [35, 36]. This is especially important since 94% of organisations report avoidable cloud costs [25].

For those struggling with cloud expenses, Hokstad Consulting offers expertise in cloud cost engineering. Their services - like automated scaling, regular cost audits, and infrastructure optimisation - can help businesses cut costs by 30–50%.

Conclusion

Automating multi-cloud CI/CD with Infrastructure as Code (IaC) creates systems that are scalable, dependable, and cost-effective. Tools like Terraform and Pulumi allow you to codify your infrastructure, eliminating manual drift and ensuring consistent provisioning across platforms like AWS, Azure, and GCP. This consistency streamlines operations, as teams can rely on a single source of truth rather than managing multiple cloud interfaces.

Automated pipelines, built on consistent infrastructure delivery, bring even greater reliability when combined with GitOps principles and self-healing tools like ArgoCD and Kubernetes. For example, in 2022, a global fintech company using Kubernetes and Crossplane reduced incident downtime from 2 hours to under 5 minutes, while cutting operational costs by 40% [4].

Incorporating Policy-as-Code and automated cost controls into your pipeline is another key step. As HashiCorp's Michael Fonseca explains:

Engineers are becoming the new cloud financial controllers as finance teams begin to lose some of their direct control over new fast-paced, on-demand infrastructure consumption models driven by cloud [26].

By embedding cost estimation and compliance checks directly into your workflows, you can avoid budget overruns before they occur.

To complete the automation framework, integrate security and cost management measures. Use drift detection, implement zero-downtime strategies like blue-green or canary deployments, and centralise secrets management with tools such as HashiCorp Vault. For businesses grappling with cloud complexity or rising expenses, Hokstad Consulting offers DevOps transformation and cloud cost engineering services, helping organisations reduce costs by 30–50% while enhancing deployment speed and infrastructure resilience.

FAQs

What are the benefits of using Infrastructure as Code (IaC) for automating multi-cloud CI/CD pipelines?

Using Infrastructure as Code (IaC) in multi-cloud CI/CD pipelines brings several important benefits.

One major advantage is consistent resource provisioning. With IaC, you can standardise configurations across various cloud providers, reducing the likelihood of errors and ensuring smooth integration between different platforms. This consistency makes deployments more reliable and simplifies overall management.

Another benefit is the boost to automation. By defining infrastructure through code, teams can version, review, and deploy it directly through CI/CD pipelines. This approach cuts down on manual tasks, accelerates deployment processes, and allows for faster iterations. Plus, it provides better control over infrastructure changes, reducing the chances of unintended alterations.

Lastly, IaC plays a crucial role in resilience and disaster recovery. It allows for the consistent deployment of infrastructure across multiple regions and clouds. This capability ensures that systems can recover quickly from failures, improving fault tolerance and making multi-cloud management more straightforward.

How can I maintain security and compliance in multi-cloud CI/CD pipelines?

To keep multi-cloud CI/CD pipelines secure and compliant, it's essential to follow key practices centred on infrastructure security, policy enforcement, and continuous monitoring. By using Infrastructure as Code (IaC) tools like Terraform or Pulumi, you can standardise resource provisioning across different cloud providers. This approach not only minimises risks but also supports compliance requirements.

Protecting the pipeline itself is just as critical. Focus on access controls, secret management, and automated security testing to safeguard sensitive data and workflows. Applying policy guardrails and running compliance checks on a regular basis helps ensure your pipelines align with regulatory standards. On top of that, integrating monitoring tools gives you real-time visibility, making it easier to respond quickly to any potential issues.

What are the best ways to manage costs in a multi-cloud environment?

Managing costs in a multi-cloud environment requires a thoughtful mix of strategies and automation. One effective approach is using Infrastructure as Code (IaC) tools like Terraform or Pulumi. These tools ensure consistent resource provisioning across different cloud providers, helping to avoid over-provisioning and cutting down on unnecessary expenses.

Automation plays a crucial role too. By automating deployments and workflows - such as linking IaC tools with version control systems - you can minimise manual errors and ensure infrastructure changes are predictable. On top of that, resource tagging combined with real-time cost monitoring provides a clear view of cloud spending, making it easier to identify and address inefficiencies.

Another essential step is adopting policy-driven governance. This approach enforces spending limits and resource usage policies automatically, reducing the risk of overspending. By blending these methods, organisations can keep their multi-cloud costs under control while still enjoying the flexibility and scalability they need.